Decision trees are simple, interpretable models that split data based on feature values to create a tree-like structure for classification or regression tasks. Random forests improve predictive performance by constructing multiple decision trees using different subsets of data and features, then aggregating their outputs to reduce overfitting and increase accuracy. This ensemble approach enhances robustness against noise and variance compared to single decision trees.

Table of Comparison

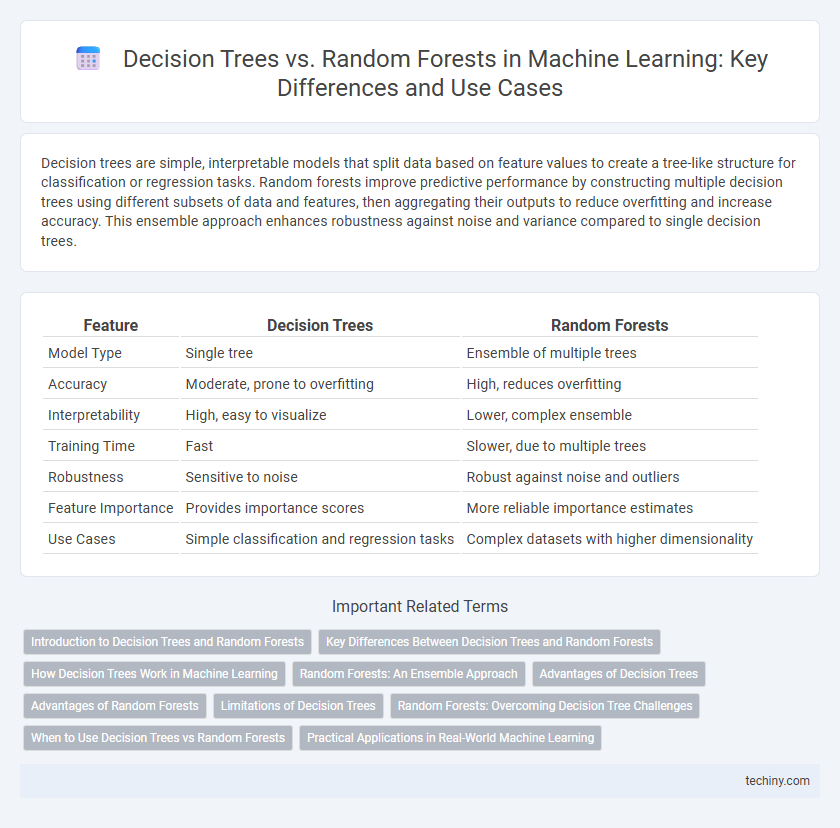

| Feature | Decision Trees | Random Forests |

|---|---|---|

| Model Type | Single tree | Ensemble of multiple trees |

| Accuracy | Moderate, prone to overfitting | High, reduces overfitting |

| Interpretability | High, easy to visualize | Lower, complex ensemble |

| Training Time | Fast | Slower, due to multiple trees |

| Robustness | Sensitive to noise | Robust against noise and outliers |

| Feature Importance | Provides importance scores | More reliable importance estimates |

| Use Cases | Simple classification and regression tasks | Complex datasets with higher dimensionality |

Introduction to Decision Trees and Random Forests

Decision trees create a flowchart-like model of decisions based on feature values, making them intuitive for classification and regression tasks in machine learning. Random forests build on decision trees by aggregating the predictions of multiple trees, enhancing accuracy and reducing overfitting through ensemble learning. This combination leverages the simplicity of decision trees and the robustness of collective wisdom for improved predictive performance.

Key Differences Between Decision Trees and Random Forests

Decision trees use a single model to make decisions by splitting data based on feature values, while random forests aggregate multiple decision trees to improve predictive accuracy and reduce overfitting. Random forests introduce randomness through bootstrap sampling and feature selection, enhancing model robustness compared to the deterministic structure of individual decision trees. Key differences also include interpretability, with decision trees being more interpretable and random forests delivering higher performance on complex datasets.

How Decision Trees Work in Machine Learning

Decision Trees in machine learning partition data by recursively splitting features into branches based on criteria like Gini impurity or information gain to create a tree structure of decision rules. Each leaf node represents a class label or continuous value prediction, enabling interpretable and straightforward classification or regression. This hierarchical approach allows efficient handling of both categorical and numerical data while capturing complex feature interactions.

Random Forests: An Ensemble Approach

Random Forests leverage an ensemble of decision trees to improve predictive accuracy and reduce overfitting by averaging multiple tree outputs. This approach enhances model robustness and generalization, especially in complex datasets with high dimensionality or noisy features. Random Forests are widely used for classification and regression tasks due to their ability to handle large-scale data and provide feature importance insights.

Advantages of Decision Trees

Decision trees offer clear interpretability, allowing users to easily visualize and understand the decision-making process. They require less computational power compared to random forests, making them faster for training and prediction on smaller datasets. Additionally, decision trees can handle both numerical and categorical data without extensive preprocessing, enhancing their flexibility in various machine learning applications.

Advantages of Random Forests

Random forests improve prediction accuracy by aggregating the results of multiple decision trees, reducing the risk of overfitting commonly seen in single decision trees. The ensemble method enhances model robustness and generalization through bootstrapped sampling and feature randomness, capturing complex data patterns effectively. Random forests also provide reliable feature importance metrics, aiding interpretability and feature selection in machine learning tasks.

Limitations of Decision Trees

Decision trees often suffer from overfitting, leading to poor generalization on unseen data due to their high variance. They are sensitive to small changes in the training dataset, causing significant fluctuations in the tree structure and predictions. Additionally, decision trees tend to create biased splits when dealing with imbalanced data or features with varying scales, limiting their robustness.

Random Forests: Overcoming Decision Tree Challenges

Random Forests overcome decision tree challenges by aggregating multiple decision trees to improve accuracy and reduce overfitting. This ensemble method leverages bootstrapped samples and feature randomness, leading to robust predictions even on complex datasets. Random Forests excel in handling high-dimensional data and noisy features, enhancing model stability and generalization.

When to Use Decision Trees vs Random Forests

Decision trees excel in scenarios requiring quick, interpretable models with straightforward decision rules, particularly in smaller datasets or when model explainability is paramount. Random forests are preferred for larger, more complex datasets where improved accuracy and robustness against overfitting are critical due to their ensemble approach averaging multiple decision trees. In cases involving noisy data or non-linear relationships, random forests provide better generalization and predictive performance compared to single decision trees.

Practical Applications in Real-World Machine Learning

Decision trees offer straightforward interpretability and quick model training, making them ideal for applications like customer segmentation and credit scoring where transparency is crucial. Random forests improve predictive accuracy and reduce overfitting by aggregating multiple decision trees, which is advantageous in complex tasks such as fraud detection and medical diagnosis. In real-world machine learning, random forests are preferred for their robustness on noisy datasets, while decision trees excel in scenarios demanding fast, explainable results.

decision trees vs random forests Infographic

techiny.com

techiny.com