Cross-validation provides a more reliable estimate of model performance by partitioning the data into multiple subsets, ensuring each subset is used for both training and validation, which reduces the risk of overfitting. Train-test split divides the data once into separate training and testing sets, making it faster but often less robust to variance in performance evaluation. Cross-validation is preferred for small datasets, while train-test split is suitable for larger datasets where computational efficiency is prioritized.

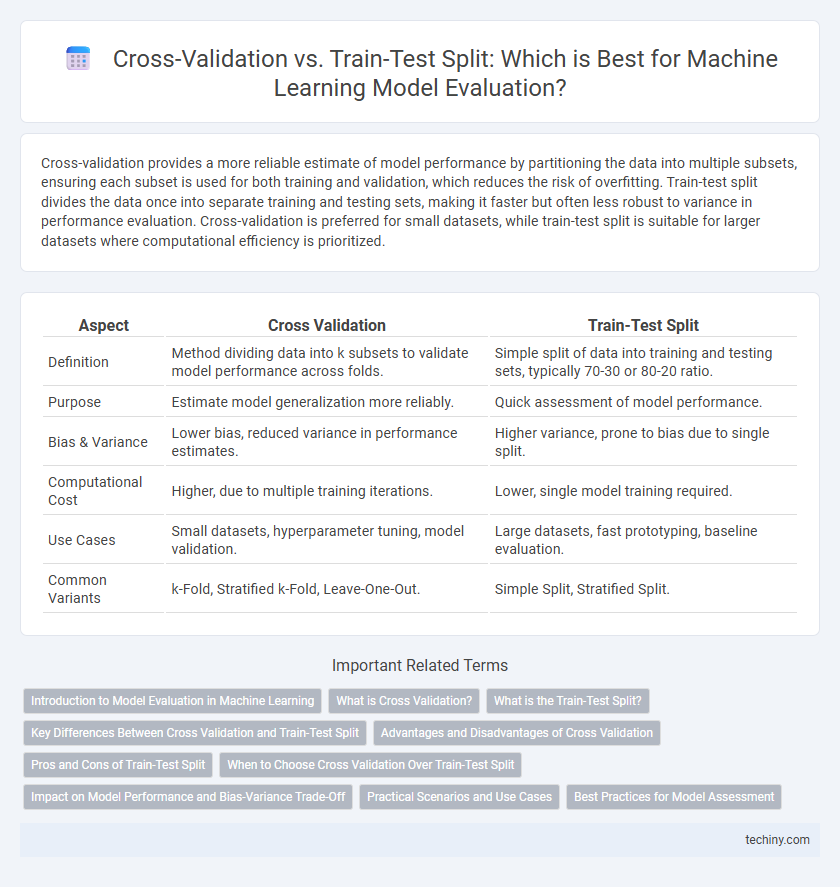

Table of Comparison

| Aspect | Cross Validation | Train-Test Split |

|---|---|---|

| Definition | Method dividing data into k subsets to validate model performance across folds. | Simple split of data into training and testing sets, typically 70-30 or 80-20 ratio. |

| Purpose | Estimate model generalization more reliably. | Quick assessment of model performance. |

| Bias & Variance | Lower bias, reduced variance in performance estimates. | Higher variance, prone to bias due to single split. |

| Computational Cost | Higher, due to multiple training iterations. | Lower, single model training required. |

| Use Cases | Small datasets, hyperparameter tuning, model validation. | Large datasets, fast prototyping, baseline evaluation. |

| Common Variants | k-Fold, Stratified k-Fold, Leave-One-Out. | Simple Split, Stratified Split. |

Introduction to Model Evaluation in Machine Learning

Cross validation provides a more reliable estimate of a machine learning model's performance by partitioning the data into multiple folds, ensuring that each data point is used for both training and testing. In contrast, the train-test split divides the dataset into two distinct sets, which can lead to higher variance in performance evaluation depending on the specific split. Effective model evaluation techniques are essential for detecting overfitting and improving the generalization ability of machine learning models.

What is Cross Validation?

Cross validation is a robust machine learning technique used to assess a model's generalization performance by partitioning the dataset into multiple subsets, typically k folds, to iteratively train and validate the model. This method reduces variance in performance estimation compared to a single train-test split, providing a more reliable evaluation metric. Common variants include k-fold cross validation, stratified k-fold, and leave-one-out cross validation, each optimizing for balanced bias-variance trade-offs.

What is the Train-Test Split?

The train-test split is a fundamental technique in machine learning used to evaluate model performance by dividing the dataset into two parts: a training set and a testing set. Typically, 70-80% of the data is allocated for training the model, while the remaining 20-30% is reserved for testing its generalization ability on unseen data. This method helps prevent overfitting by providing an unbiased evaluation of the model's accuracy and robustness.

Key Differences Between Cross Validation and Train-Test Split

Cross validation involves partitioning the dataset into multiple folds to train and validate models iteratively, providing a more reliable estimate of model performance by reducing variance. Train-test split divides the data once into separate training and testing sets, which is straightforward but may result in higher variance and biased performance estimates due to a single split. Cross validation is preferred for model tuning and evaluation when dataset size is limited, while train-test split is commonly used for a quick, less computationally intensive assessment.

Advantages and Disadvantages of Cross Validation

Cross-validation offers a robust method for assessing machine learning model performance by using multiple training and validation sets, which reduces the risk of overfitting and provides a more reliable estimate of model accuracy compared to a single train-test split. It is computationally intensive and can be time-consuming, especially with large datasets or complex models, limiting its practicality in some scenarios. Cross-validation also helps in hyperparameter tuning by leveraging all data for training and validation, enhancing model generalization across diverse data distributions.

Pros and Cons of Train-Test Split

Train-Test Split offers a straightforward and fast approach for evaluating machine learning models by partitioning data into distinct training and testing sets, enabling quick performance assessment. This method provides simplicity and works well with large datasets but risks high variance in performance estimates due to the random division and potential data imbalance. Moreover, it may lead to overfitting or underfitting if the split does not represent the full data distribution accurately, limiting the model's generalization capabilities.

When to Choose Cross Validation Over Train-Test Split

Cross-validation is preferred over train-test split when maximizing model reliability and minimizing variance in performance evaluation, especially with limited data samples. It provides a more comprehensive assessment by repeatedly partitioning the dataset, reducing the risk of overfitting and selection bias compared to a single train-test split. For complex models or smaller datasets, cross-validation ensures robust hyperparameter tuning and generalization accuracy.

Impact on Model Performance and Bias-Variance Trade-Off

Cross validation provides a more reliable estimate of model performance by reducing variance compared to a single train-test split, helping to mitigate overfitting and underfitting. Train-test split offers faster results but can lead to higher variance in performance metrics due to data partition randomness. The bias-variance trade-off is better managed with k-fold cross validation, which balances bias reduction and variance control, enhancing the generalizability of machine learning models.

Practical Scenarios and Use Cases

Cross-validation offers robust model evaluation by repeatedly partitioning the dataset, making it ideal for small or imbalanced datasets where maximizing data usage is critical. Train-test split is preferred in large-scale applications or real-time scenarios due to its computational efficiency and simplicity, providing quick insights into model performance. Practical use cases for cross-validation include hyperparameter tuning and model selection in academic research, while train-test split suits production environments with time constraints and large volumes of data.

Best Practices for Model Assessment

Cross validation offers a more reliable estimate of machine learning model performance by averaging results across multiple folds, reducing variance and mitigating overfitting risks compared to a single train-test split. Implementing k-fold cross validation, especially with stratification for classification tasks, ensures balanced class distributions in each fold and robust model evaluation. While train-test split is faster for initial assessments, best practices recommend cross validation for thorough model validation and hyperparameter tuning to achieve optimal generalization.

Cross Validation vs Train-Test Split Infographic

techiny.com

techiny.com