Bias refers to errors introduced by approximating a real-world problem with a simplified model, often leading to underfitting, while variance indicates the model's sensitivity to fluctuations in the training data, causing overfitting. Achieving an optimal balance between bias and variance is crucial for developing models that generalize well to unseen data. Techniques such as cross-validation and regularization help mitigate these issues by fine-tuning model complexity and improving predictive performance.

Table of Comparison

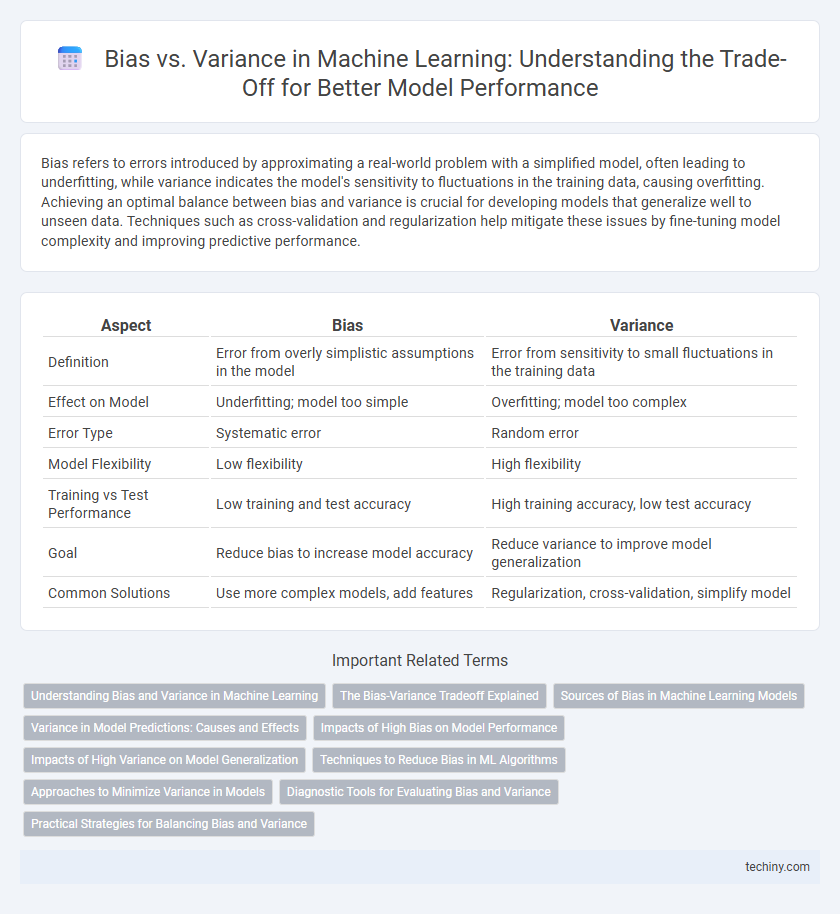

| Aspect | Bias | Variance |

|---|---|---|

| Definition | Error from overly simplistic assumptions in the model | Error from sensitivity to small fluctuations in the training data |

| Effect on Model | Underfitting; model too simple | Overfitting; model too complex |

| Error Type | Systematic error | Random error |

| Model Flexibility | Low flexibility | High flexibility |

| Training vs Test Performance | Low training and test accuracy | High training accuracy, low test accuracy |

| Goal | Reduce bias to increase model accuracy | Reduce variance to improve model generalization |

| Common Solutions | Use more complex models, add features | Regularization, cross-validation, simplify model |

Understanding Bias and Variance in Machine Learning

Understanding bias in machine learning involves recognizing errors caused by overly simplistic models that fail to capture underlying data patterns, leading to underfitting. Variance refers to model sensitivity to small fluctuations in training data, causing overfitting and poor generalization on unseen data. Balancing bias and variance is crucial for creating models that perform well on both training and test datasets, ensuring optimal predictive accuracy.

The Bias-Variance Tradeoff Explained

The bias-variance tradeoff in machine learning highlights the balance between model simplicity and complexity, where high bias causes underfitting and poor predictive accuracy, while high variance leads to overfitting and sensitivity to noise. Minimizing total error requires finding an optimal point where both bias and variance are balanced, enabling the model to generalize well on unseen data. Understanding this tradeoff is crucial for selecting appropriate algorithms and tuning hyperparameters to improve model performance.

Sources of Bias in Machine Learning Models

Sources of bias in machine learning models primarily stem from underrepresented or imbalanced training data, flawed data collection methods, and oversimplified model assumptions. Limited diversity in data samples causes the model to learn patterns that do not generalize well to real-world scenarios, resulting in systematic prediction errors. Model bias can also arise from feature selection processes that exclude relevant information, thereby restricting the model's ability to capture complex relationships within the data.

Variance in Model Predictions: Causes and Effects

Variance in model predictions arises when a machine learning model is excessively complex, capturing noise and fluctuations in the training data that do not generalize to new inputs. High variance leads to overfitting, causing significant prediction errors on unseen datasets and reducing the model's overall robustness. Techniques like cross-validation, regularization, and pruning help mitigate variance by simplifying the model and improving generalization performance.

Impacts of High Bias on Model Performance

High bias in machine learning models leads to systematic errors by oversimplifying the underlying data patterns, causing underfitting and poor generalization on both training and test datasets. Models with high bias typically exhibit low complexity and fail to capture important features, resulting in consistently inaccurate predictions. This limitation reduces the model's ability to learn from data variance, impairing overall performance and predictive reliability.

Impacts of High Variance on Model Generalization

High variance in machine learning models leads to overfitting, where the model captures noise in the training data as if it were a true pattern. This results in poor generalization and significant performance drops on unseen test data. Managing variance is crucial to ensure a balanced bias-variance tradeoff and improve model robustness across different datasets.

Techniques to Reduce Bias in ML Algorithms

Techniques to reduce bias in machine learning algorithms include increasing model complexity by using more sophisticated models like deep neural networks or ensemble methods such as Random Forests and Gradient Boosting Machines. Incorporating feature engineering and selecting relevant features enhance model expressiveness and reduce underfitting. Moreover, using larger and more diverse training datasets helps the model capture complex patterns and decreases systematic errors caused by biased assumptions.

Approaches to Minimize Variance in Models

Minimizing variance in machine learning models involves techniques such as regularization, which includes L1 (Lasso) and L2 (Ridge) penalties that constrain model complexity and prevent overfitting. Ensemble methods like bagging aggregate predictions from multiple models, reducing variance by averaging out errors from individual models. Cross-validation also helps by providing estimates of model performance on unseen data, enabling better tuning of hyperparameters to achieve a balance between bias and variance.

Diagnostic Tools for Evaluating Bias and Variance

Diagnostic tools such as learning curves and residual plots are essential for evaluating bias and variance in machine learning models. Learning curves help identify whether a model is underfitting (high bias) or overfitting (high variance) by plotting training and validation errors over varying sample sizes. Residual analysis provides insight into systematic errors, highlighting if the model struggles to capture underlying patterns or is overly sensitive to noise.

Practical Strategies for Balancing Bias and Variance

Optimizing model performance involves carefully tuning hyperparameters like tree depth or regularization strength to balance bias and variance. Techniques such as cross-validation help detect overfitting or underfitting by evaluating model accuracy on unseen data. Employing ensemble methods like bagging and boosting effectively reduces variance without substantially increasing bias, improving generalization.

Bias vs Variance Infographic

techiny.com

techiny.com