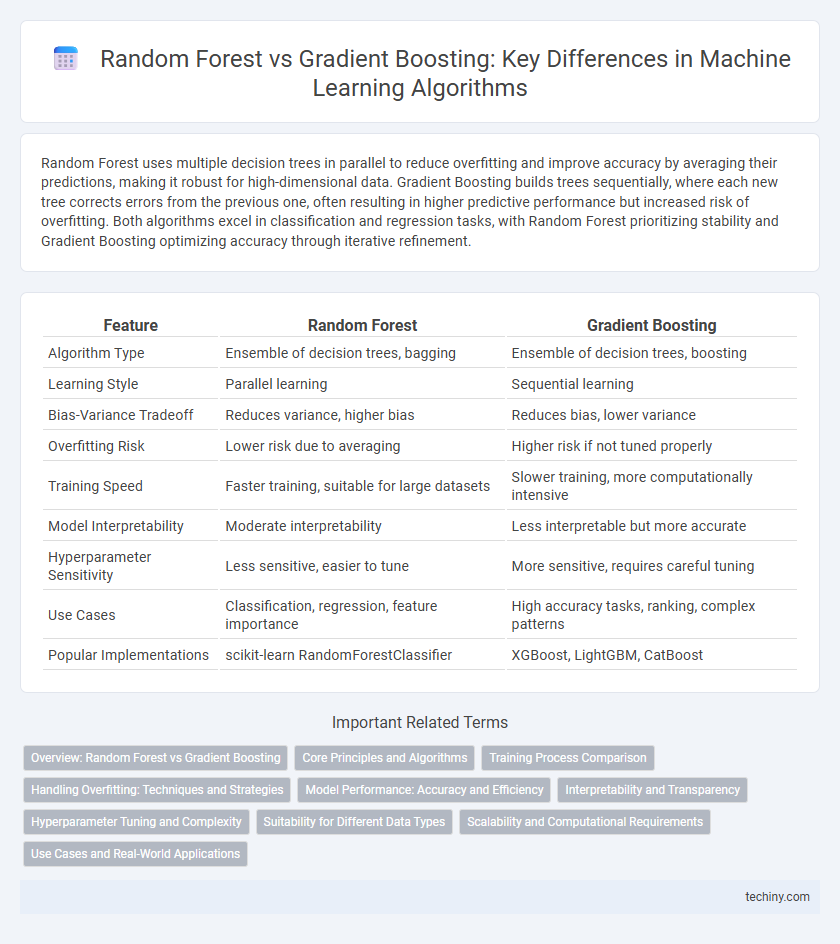

Random Forest uses multiple decision trees in parallel to reduce overfitting and improve accuracy by averaging their predictions, making it robust for high-dimensional data. Gradient Boosting builds trees sequentially, where each new tree corrects errors from the previous one, often resulting in higher predictive performance but increased risk of overfitting. Both algorithms excel in classification and regression tasks, with Random Forest prioritizing stability and Gradient Boosting optimizing accuracy through iterative refinement.

Table of Comparison

| Feature | Random Forest | Gradient Boosting |

|---|---|---|

| Algorithm Type | Ensemble of decision trees, bagging | Ensemble of decision trees, boosting |

| Learning Style | Parallel learning | Sequential learning |

| Bias-Variance Tradeoff | Reduces variance, higher bias | Reduces bias, lower variance |

| Overfitting Risk | Lower risk due to averaging | Higher risk if not tuned properly |

| Training Speed | Faster training, suitable for large datasets | Slower training, more computationally intensive |

| Model Interpretability | Moderate interpretability | Less interpretable but more accurate |

| Hyperparameter Sensitivity | Less sensitive, easier to tune | More sensitive, requires careful tuning |

| Use Cases | Classification, regression, feature importance | High accuracy tasks, ranking, complex patterns |

| Popular Implementations | scikit-learn RandomForestClassifier | XGBoost, LightGBM, CatBoost |

Overview: Random Forest vs Gradient Boosting

Random Forest and Gradient Boosting are powerful ensemble machine learning techniques primarily used for classification and regression tasks. Random Forest builds multiple decision trees independently and aggregates their results to reduce overfitting and improve accuracy, while Gradient Boosting constructs trees sequentially, optimizing for errors in prior models to enhance predictive performance. Gradient Boosting often achieves higher accuracy on complex datasets but is more sensitive to hyperparameter tuning and computationally intensive compared to the more robust and faster Random Forest.

Core Principles and Algorithms

Random Forest operates on the principle of bagging, where numerous decision trees are independently trained on bootstrapped data samples, and predictions are aggregated through majority voting for classification or averaging for regression. Gradient Boosting builds models sequentially by optimizing a loss function using gradient descent, where each new tree corrects errors made by previous trees, enhancing prediction accuracy over iterations. While Random Forest emphasizes variance reduction via parallel tree construction, Gradient Boosting focuses on bias reduction through additive model updates guided by gradients.

Training Process Comparison

Random Forest trains multiple decision trees independently using bootstrap samples and aggregates their outputs through majority voting or averaging, which enables parallelization and typically faster training times. Gradient Boosting sequentially builds trees where each new tree corrects errors from the previous ensemble, requiring careful tuning of learning rates and often resulting in longer, more resource-intensive training processes. The independent nature of Random Forest allows for easier scalability, while Gradient Boosting's sequential training produces more refined models at the cost of increased complexity and time.

Handling Overfitting: Techniques and Strategies

Random forest mitigates overfitting through extensive use of bootstrap aggregation (bagging) and random feature selection for tree construction, which promotes model diversity and reduces variance. Gradient boosting addresses overfitting by employing regularization techniques such as shrinkage (learning rate), early stopping, and tree constraints like maximum depth and minimum samples per leaf. Both methods incorporate cross-validation and hyperparameter tuning to optimize generalization and minimize model complexity.

Model Performance: Accuracy and Efficiency

Random Forest algorithms excel in accuracy through ensemble averaging, reducing overfitting and enhancing generalization on diverse datasets, while Gradient Boosting achieves higher predictive accuracy by sequentially correcting errors but at the cost of increased computational intensity and training time. Random Forest offers faster training and prediction efficiency due to parallel tree construction, whereas Gradient Boosting's iterative approach demands more resources but often yields superior fine-tuned models. The choice between the two depends on the trade-off between desired model precision and available computational efficiency.

Interpretability and Transparency

Random Forest offers better interpretability by averaging multiple decision trees, making feature importance clearer and model behavior easier to understand for domain experts. Gradient Boosting, while often more accurate, builds sequential trees that complicate transparency due to intricate dependencies and non-linear interactions. Tools like SHAP values partially improve interpretability for Gradient Boosting but still require more computational resources and expertise compared to Random Forest explanations.

Hyperparameter Tuning and Complexity

Random Forest typically requires fewer hyperparameters to tune, focusing mainly on the number of trees, maximum depth, and minimum samples per leaf, resulting in lower model complexity and faster training times. Gradient Boosting involves more sensitive hyperparameters such as learning rate, number of boosting stages, and subsample ratio, which demand careful optimization to prevent overfitting and improve predictive performance. Complexity in Gradient Boosting is inherently higher due to sequential tree building and iterative error correction, making hyperparameter tuning critical for balancing bias-variance tradeoff.

Suitability for Different Data Types

Random forest excels in handling high-dimensional datasets and is robust to noisy data, making it suitable for diverse and unstructured inputs such as images and text. Gradient boosting performs exceptionally well on structured tabular data by iteratively minimizing errors, but it requires careful tuning to avoid overfitting on noisy or imbalanced datasets. Choosing between the two depends on the data complexity, with random forest offering stability and gradient boosting providing higher predictive accuracy on well-preprocessed, clean datasets.

Scalability and Computational Requirements

Random forest algorithms are highly scalable due to their parallelizable structure, allowing simultaneous training of multiple decision trees, which reduces computational time on large datasets. Gradient boosting involves sequential tree construction, increasing computational requirements and training time but often yielding higher predictive accuracy. When managing resource constraints, random forests provide more efficient scalability, whereas gradient boosting requires optimized hardware or distributed computing for handling extensive data effectively.

Use Cases and Real-World Applications

Random Forest excels in handling large datasets with high dimensionality, making it ideal for applications like fraud detection, medical diagnosis, and customer segmentation where interpretability and robustness are crucial. Gradient Boosting offers superior predictive accuracy in scenarios like credit scoring, risk assessment, and sales forecasting by sequentially correcting errors of previous models. Both methods are widely used in real-world applications but differ in their approach to bias-variance trade-off and computational complexity.

random forest vs gradient boosting Infographic

techiny.com

techiny.com