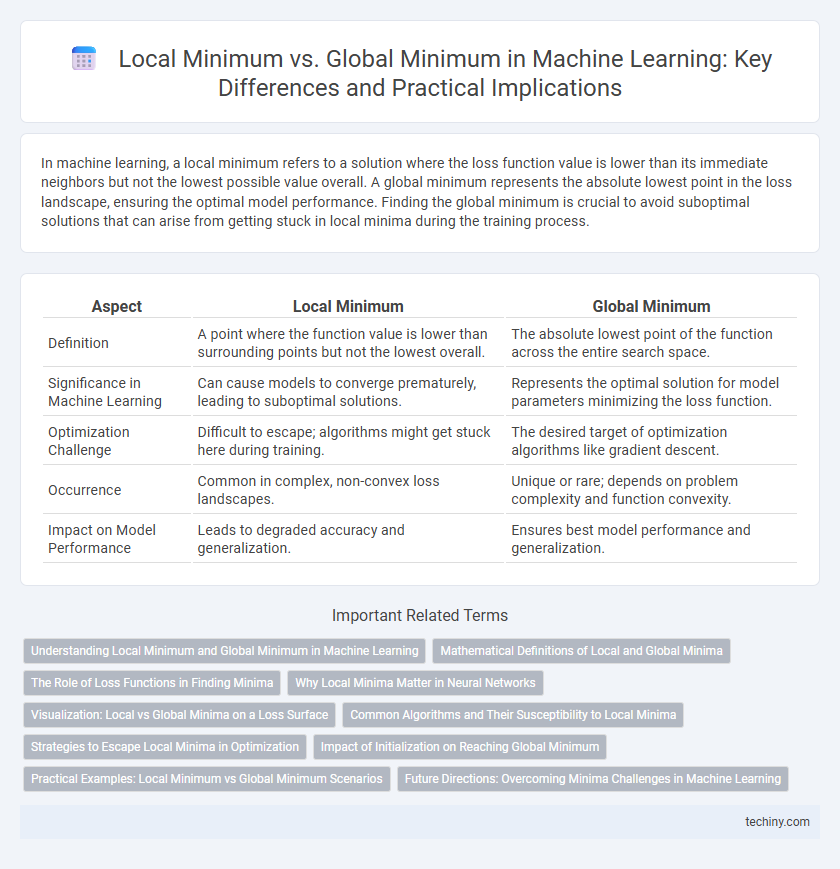

In machine learning, a local minimum refers to a solution where the loss function value is lower than its immediate neighbors but not the lowest possible value overall. A global minimum represents the absolute lowest point in the loss landscape, ensuring the optimal model performance. Finding the global minimum is crucial to avoid suboptimal solutions that can arise from getting stuck in local minima during the training process.

Table of Comparison

| Aspect | Local Minimum | Global Minimum |

|---|---|---|

| Definition | A point where the function value is lower than surrounding points but not the lowest overall. | The absolute lowest point of the function across the entire search space. |

| Significance in Machine Learning | Can cause models to converge prematurely, leading to suboptimal solutions. | Represents the optimal solution for model parameters minimizing the loss function. |

| Optimization Challenge | Difficult to escape; algorithms might get stuck here during training. | The desired target of optimization algorithms like gradient descent. |

| Occurrence | Common in complex, non-convex loss landscapes. | Unique or rare; depends on problem complexity and function convexity. |

| Impact on Model Performance | Leads to degraded accuracy and generalization. | Ensures best model performance and generalization. |

Understanding Local Minimum and Global Minimum in Machine Learning

Local minimum in machine learning refers to a solution point where the error or loss function has a lower value than neighboring points, but it is not the lowest possible value across the entire parameter space. Global minimum represents the absolute lowest value of the loss function, indicating the best possible model performance achievable during training. Understanding the distinction between local and global minima is critical for optimizing algorithms, as many optimization methods like gradient descent risk getting stuck in local minima rather than converging to the global minimum.

Mathematical Definitions of Local and Global Minima

A local minimum of a function f(x) is a point x = a where f(a) <= f(x) for all x in a neighborhood around a, meaning no nearby points have a lower function value. A global minimum is a point x = b where f(b) <= f(x) for all x in the entire domain, representing the absolute lowest value of the function. In optimization problems within machine learning, distinguishing between local minima and the global minimum is critical for ensuring the best model performance.

The Role of Loss Functions in Finding Minima

Loss functions play a critical role in guiding optimization algorithms toward minima by quantifying the error between predicted and true values. The shape and complexity of a loss function's surface determine whether the algorithm converges to a local minimum, representing a suboptimal solution, or the global minimum, which offers the lowest possible error. Carefully designing and selecting loss functions helps in navigating the optimization landscape to achieve better model performance and generalization.

Why Local Minima Matter in Neural Networks

Local minima significantly impact neural networks by trapping the optimization process in suboptimal weight configurations, resulting in poorer model performance. These points can hinder convergence to the global minimum, where the loss function achieves its lowest possible value, thereby limiting generalization accuracy. Understanding the landscape of the loss function and employing techniques like stochastic gradient descent or regularization helps mitigate the adverse effects of local minima in training deep neural networks.

Visualization: Local vs Global Minima on a Loss Surface

Visualizing local and global minima on a loss surface reveals the complexity of optimizing machine learning models, where local minima represent suboptimal points and the global minimum corresponds to the best possible solution. The loss surface often exhibits multiple valleys and peaks, illustrating how gradient descent algorithms can become trapped in local minima, hindering model performance. Effective visualization techniques like contour plots and 3D surface graphs help identify these minima, guiding the design of optimization strategies to achieve more accurate, generalized models.

Common Algorithms and Their Susceptibility to Local Minima

Gradient descent and its variants, such as stochastic gradient descent (SGD), are widely used optimization algorithms in machine learning that often converge to local minima due to non-convex loss landscapes in neural networks. Algorithms like simulated annealing and genetic algorithms incorporate stochastic elements or population-based search to better explore the solution space and avoid local minima, aiming for the global minimum. However, despite these techniques, high-dimensional models like deep networks remain prone to suboptimal local minima, influencing model performance and generalization.

Strategies to Escape Local Minima in Optimization

Techniques such as stochastic gradient descent introduce randomness to help models escape local minima by exploring diverse regions of the loss landscape. Incorporating momentum accelerates convergence and allows the optimization process to overcome shallow local minima by maintaining directionality in parameter updates. Advanced methods like simulated annealing and adaptive learning rates dynamically adjust optimization pathways to enhance the chances of reaching the global minimum rather than settling in suboptimal local minima.

Impact of Initialization on Reaching Global Minimum

Initialization plays a crucial role in determining whether a machine learning algorithm converges to a local minimum or the global minimum of the loss function. Poor initialization can cause gradient-based optimization methods, such as stochastic gradient descent, to become trapped in suboptimal local minima, resulting in reduced model accuracy. Techniques like Xavier or He initialization improve the chances of reaching the global minimum by providing better starting points in high-dimensional parameter spaces.

Practical Examples: Local Minimum vs Global Minimum Scenarios

In machine learning, local minimum and global minimum scenarios often arise during model training when optimizing loss functions. For example, in training deep neural networks, the optimizer may get stuck in a local minimum, leading to suboptimal model performance, whereas reaching the global minimum corresponds to the lowest possible error and best generalization. Practical cases like tuning hyperparameters for support vector machines or gradient descent in linear regression highlight the importance of escaping local minima to achieve improved predictive accuracy and model robustness.

Future Directions: Overcoming Minima Challenges in Machine Learning

Future directions in overcoming minima challenges in machine learning emphasize advanced optimization algorithms such as stochastic gradient descent variants, adaptive learning rates, and meta-heuristic methods to escape local minima traps. Research into loss surface topology through visualization and curvature analysis enhances understanding of global minima accessibility in deep neural networks. Integration of reinforcement learning and evolutionary strategies demonstrates promising potential for navigating complex error landscapes toward optimal model convergence.

Local Minimum vs Global Minimum Infographic

techiny.com

techiny.com