Early stopping prevents overfitting by halting training as soon as the model's performance on a validation set deteriorates, effectively controlling model complexity. Weight decay adds a regularization term to the loss function, penalizing large weights and encouraging simpler models with better generalization. Both techniques improve model robustness but target overfitting through different mechanisms: early stopping monitors training progress, while weight decay adjusts the optimization landscape.

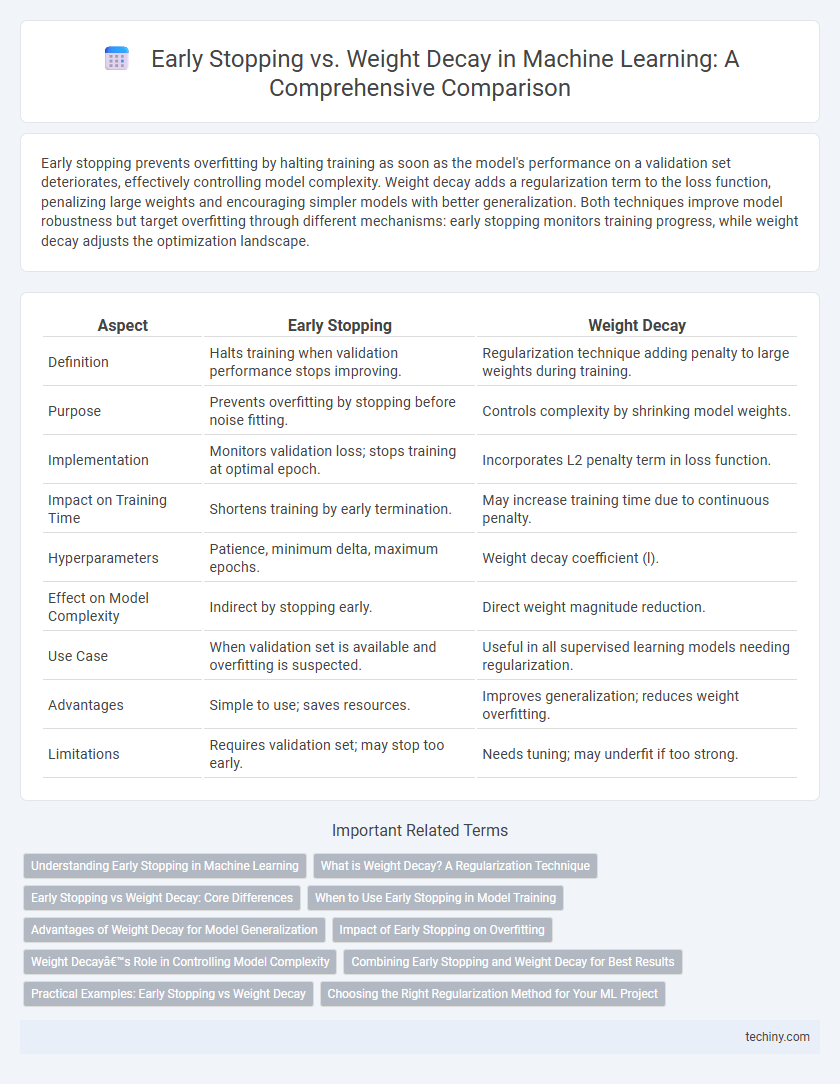

Table of Comparison

| Aspect | Early Stopping | Weight Decay |

|---|---|---|

| Definition | Halts training when validation performance stops improving. | Regularization technique adding penalty to large weights during training. |

| Purpose | Prevents overfitting by stopping before noise fitting. | Controls complexity by shrinking model weights. |

| Implementation | Monitors validation loss; stops training at optimal epoch. | Incorporates L2 penalty term in loss function. |

| Impact on Training Time | Shortens training by early termination. | May increase training time due to continuous penalty. |

| Hyperparameters | Patience, minimum delta, maximum epochs. | Weight decay coefficient (l). |

| Effect on Model Complexity | Indirect by stopping early. | Direct weight magnitude reduction. |

| Use Case | When validation set is available and overfitting is suspected. | Useful in all supervised learning models needing regularization. |

| Advantages | Simple to use; saves resources. | Improves generalization; reduces weight overfitting. |

| Limitations | Requires validation set; may stop too early. | Needs tuning; may underfit if too strong. |

Understanding Early Stopping in Machine Learning

Early stopping in machine learning is a regularization technique used to prevent overfitting by halting training when the model's performance on a validation set starts to degrade. This method monitors the validation loss during training and stops the process once the loss increases, thereby preserving generalization. Unlike weight decay, which adds a penalty to the loss function to restrict model complexity, early stopping directly limits the number of training iterations based on validation metrics.

What is Weight Decay? A Regularization Technique

Weight decay is a regularization technique in machine learning that penalizes large weights by adding a term proportional to the magnitude of the weights to the loss function, thereby preventing overfitting. This method effectively constrains the model complexity and encourages simpler models by shrinking weights toward zero during training. By reducing model variance, weight decay improves generalization performance on unseen data compared to early stopping alone.

Early Stopping vs Weight Decay: Core Differences

Early stopping and weight decay are two regularization techniques in machine learning aimed at preventing overfitting but differ fundamentally in approach. Early stopping halts training once the model's performance on a validation set ceases to improve, effectively controlling complexity by limiting training time. Weight decay adds a penalty to the loss function proportional to the magnitude of model weights, encouraging smaller weights and smoother models.

When to Use Early Stopping in Model Training

Early stopping is most effective during model training when overfitting is detected early in the learning process, as it halts training once validation performance begins to degrade. This technique is particularly useful for neural networks and deep learning models where lengthy training runs risk memorizing noise instead of generalizing patterns. In contrast, weight decay serves as a regularization method throughout training by penalizing large weights but does not inherently determine the optimal stopping point.

Advantages of Weight Decay for Model Generalization

Weight decay improves model generalization by penalizing large weights, which reduces overfitting and helps maintain simpler models that better capture underlying patterns in data. It continuously regularizes the training process, ensuring robustness across different datasets without halting training prematurely. This method effectively balances model complexity and training duration, leading to more reliable performance on unseen data.

Impact of Early Stopping on Overfitting

Early stopping effectively reduces overfitting by halting training once the model's performance on a validation set begins to decline, preventing it from fitting noise in the training data. Unlike weight decay, which penalizes large weights to control model complexity, early stopping directly monitors generalization performance, making it a practical and adaptive regularization technique. Empirical studies demonstrate that early stopping often results in better generalization compared to fixed regularization parameters such as weight decay.

Weight Decay’s Role in Controlling Model Complexity

Weight decay acts as a regularization technique by adding a penalty term proportional to the magnitude of model weights, effectively discouraging overly complex models that may overfit training data. It constrains the growth of parameter values, promoting simpler hypotheses that generalize better on unseen datasets. By controlling the L2 norm of weights, weight decay stabilizes training dynamics and improves model robustness compared to early stopping, which halts training based on validation performance without directly modifying model complexity.

Combining Early Stopping and Weight Decay for Best Results

Combining early stopping with weight decay enhances model generalization by balancing training duration and parameter regularization, preventing overfitting more effectively than either technique alone. Early stopping monitors validation performance to halt training at the optimal point, while weight decay adds an L2 penalty to model weights, encouraging simpler (lower norm) solutions. This synergy reduces both variance and bias, improving predictive accuracy in complex machine learning tasks such as deep neural network training.

Practical Examples: Early Stopping vs Weight Decay

Early stopping monitors validation loss during training to halt the process once performance degrades, effectively preventing overfitting in models like neural networks and gradient boosting machines. Weight decay, implemented as L2 regularization, adds a penalty proportional to the squared magnitude of weights, encouraging smaller parameter values and improving generalization in deep learning architectures. In practice, early stopping suits scenarios with limited epochs or costly computation, while weight decay is preferred for continuous training environments requiring robust regularization across layers.

Choosing the Right Regularization Method for Your ML Project

Early stopping halts training when validation performance stops improving, effectively preventing overfitting by limiting model complexity. Weight decay adds a penalty term to the loss function, encouraging smaller weights and promoting generalization by controlling model capacity. Choosing between early stopping and weight decay depends on your dataset size, model architecture, and computational resources to achieve optimal regularization and performance.

Early stopping vs Weight decay Infographic

techiny.com

techiny.com