The curse of dimensionality refers to the exponential increase in data sparsity and computational complexity as the number of features grows, hindering learning efficiency and model performance. The manifold hypothesis posits that high-dimensional data often lie on a lower-dimensional manifold embedded within the ambient space, allowing algorithms to exploit this structure for improved generalization. Leveraging manifold learning techniques helps mitigate the curse of dimensionality by uncovering intrinsic data geometry and reducing effective dimensionality.

Table of Comparison

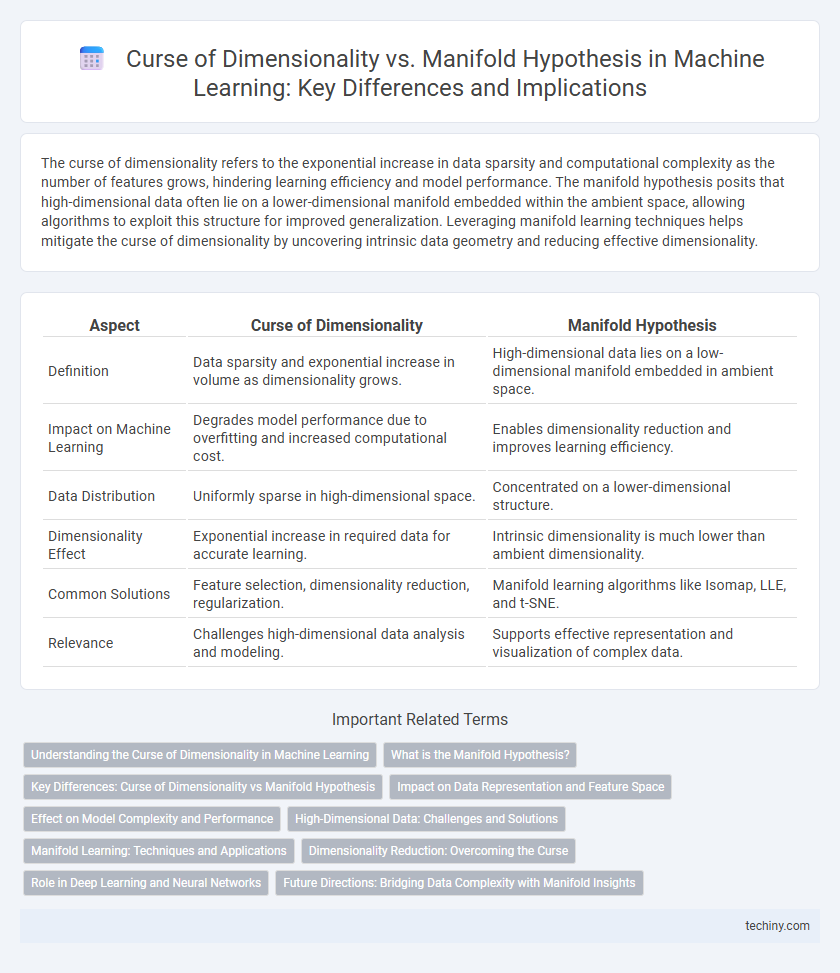

| Aspect | Curse of Dimensionality | Manifold Hypothesis |

|---|---|---|

| Definition | Data sparsity and exponential increase in volume as dimensionality grows. | High-dimensional data lies on a low-dimensional manifold embedded in ambient space. |

| Impact on Machine Learning | Degrades model performance due to overfitting and increased computational cost. | Enables dimensionality reduction and improves learning efficiency. |

| Data Distribution | Uniformly sparse in high-dimensional space. | Concentrated on a lower-dimensional structure. |

| Dimensionality Effect | Exponential increase in required data for accurate learning. | Intrinsic dimensionality is much lower than ambient dimensionality. |

| Common Solutions | Feature selection, dimensionality reduction, regularization. | Manifold learning algorithms like Isomap, LLE, and t-SNE. |

| Relevance | Challenges high-dimensional data analysis and modeling. | Supports effective representation and visualization of complex data. |

Understanding the Curse of Dimensionality in Machine Learning

The curse of dimensionality in machine learning refers to the exponential increase in data sparsity as the number of features grows, which undermines model accuracy and increases computational complexity. Manifold hypothesis suggests high-dimensional data often lies on lower-dimensional manifolds, enabling more effective learning and dimensionality reduction techniques like PCA and t-SNE. Understanding this relationship guides the design of algorithms that overcome sparsity and improve generalization in complex data spaces.

What is the Manifold Hypothesis?

The Manifold Hypothesis posits that high-dimensional data in machine learning often lies on a lower-dimensional manifold embedded within the ambient space, allowing models to exploit intrinsic data structures. This contrasts with the curse of dimensionality, which highlights challenges like sparse data and increased computational complexity in high-dimensional settings. Understanding the manifold structure enables more efficient learning algorithms and improved generalization by focusing on relevant features in reduced dimensions.

Key Differences: Curse of Dimensionality vs Manifold Hypothesis

The curse of dimensionality refers to the exponential increase in data sparsity and computational complexity as the number of features grows, often leading to overfitting and poor model generalization in high-dimensional spaces. In contrast, the manifold hypothesis suggests that high-dimensional data actually lies on a lower-dimensional manifold embedded within the higher-dimensional space, enabling machine learning models to leverage intrinsic data structure to improve learning efficiency and accuracy. Key differences include the curse of dimensionality emphasizing challenges in high dimensions due to sparsity, while the manifold hypothesis focuses on exploiting inherent low-dimensional geometries to overcome those challenges.

Impact on Data Representation and Feature Space

The curse of dimensionality significantly increases data sparsity in high-dimensional feature spaces, making distance metrics less meaningful and hindering model performance due to overfitting and computational inefficiency. The manifold hypothesis addresses this by suggesting that high-dimensional data often lies on lower-dimensional manifolds, enabling more efficient data representation and improved learning by capturing the intrinsic structure of the data. Leveraging manifold learning techniques reduces the effective dimensionality of the feature space, enhancing generalization and mitigating the negative impacts of the curse of dimensionality.

Effect on Model Complexity and Performance

The curse of dimensionality exponentially increases model complexity and degrades performance by causing sparse data distributions in high-dimensional spaces, leading to overfitting and poor generalization. The manifold hypothesis suggests that high-dimensional data lie on lower-dimensional manifolds, enabling models to learn more efficiently by focusing on relevant intrinsic features, reducing complexity and improving predictive accuracy. Leveraging manifold structures helps mitigate dimensionality issues, enhancing robustness and scalability in machine learning algorithms.

High-Dimensional Data: Challenges and Solutions

High-dimensional data often suffers from the curse of dimensionality, where the exponential increase in feature space volume leads to sparse data representations and degraded model performance. The manifold hypothesis addresses this by suggesting that high-dimensional data lies on low-dimensional manifolds, enabling more efficient learning through dimensionality reduction techniques such as t-SNE, UMAP, and autoencoders. Leveraging these approaches mitigates challenges like overfitting and computational complexity, improving model generalization and scalability in machine learning tasks.

Manifold Learning: Techniques and Applications

Manifold learning techniques such as Isomap, Locally Linear Embedding (LLE), and t-SNE address the curse of dimensionality by uncovering low-dimensional structures embedded in high-dimensional data. These methods leverage the manifold hypothesis, which assumes data lies on a smooth, lower-dimensional manifold, allowing for efficient representation and improved machine learning model performance. Applications of manifold learning span image recognition, natural language processing, and bioinformatics, where dimensionality reduction enhances visualization, clustering, and classification tasks.

Dimensionality Reduction: Overcoming the Curse

Dimensionality reduction techniques such as Principal Component Analysis (PCA) and t-Distributed Stochastic Neighbor Embedding (t-SNE) effectively mitigate the curse of dimensionality by transforming high-dimensional data into lower-dimensional manifolds while preserving essential structural relationships. The manifold hypothesis posits that real-world high-dimensional data lies on low-dimensional manifolds embedded within the ambient space, enabling algorithms to learn meaningful patterns more efficiently. Leveraging this hypothesis, dimensionality reduction methods improve model generalization and computational efficiency by focusing on intrinsic data geometry instead of the full feature space.

Role in Deep Learning and Neural Networks

The curse of dimensionality refers to the exponential increase in data sparsity and computational complexity as feature dimensions grow, posing significant challenges in deep learning by leading to overfitting and inefficient training. The manifold hypothesis suggests that high-dimensional data in neural networks lies on low-dimensional manifolds, enabling models to learn meaningful representations by exploiting intrinsic data structures. Deep learning architectures leverage this hypothesis through techniques such as convolutional layers and autoencoders to reduce dimensionality, improve generalization, and mitigate the negative effects of the curse of dimensionality.

Future Directions: Bridging Data Complexity with Manifold Insights

Future directions in machine learning research emphasize bridging the curse of dimensionality with the manifold hypothesis by developing algorithms that exploit low-dimensional manifold structures within high-dimensional data. Techniques such as manifold learning, representation learning, and geometric deep learning enable more efficient data embedding, reducing computational complexity while preserving intrinsic data geometry. Integrating manifold insights with scalable methods promises advances in generalization, robustness, and interpretability across complex, high-dimensional datasets.

curse of dimensionality vs manifold hypothesis Infographic

techiny.com

techiny.com