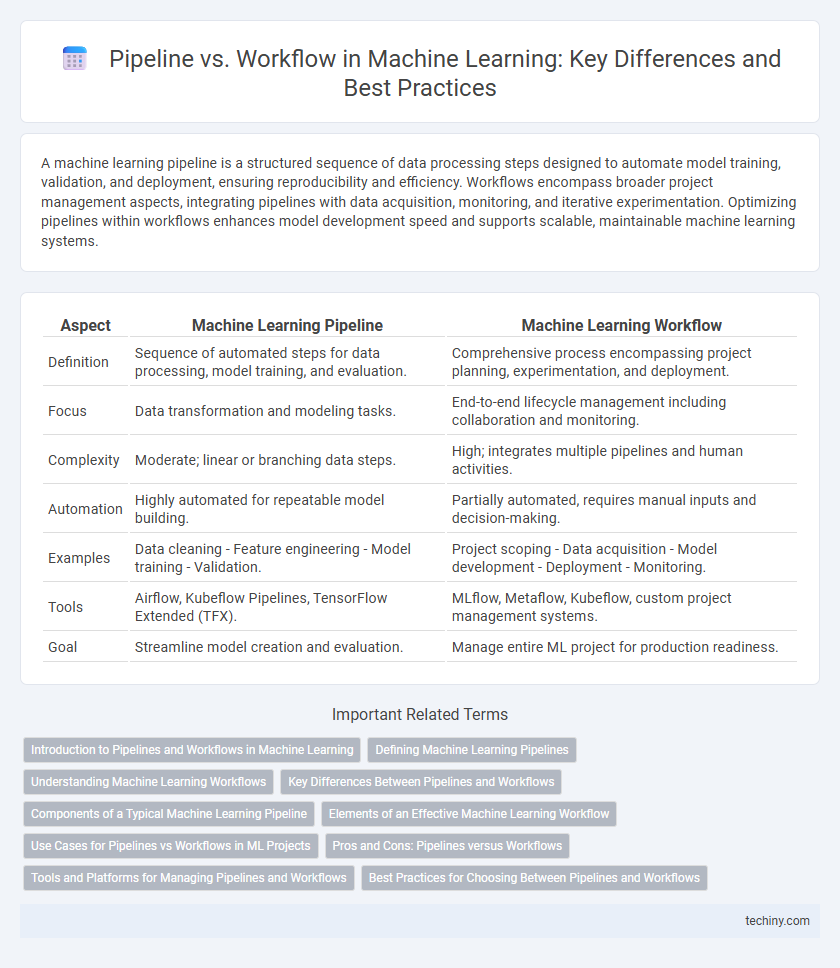

A machine learning pipeline is a structured sequence of data processing steps designed to automate model training, validation, and deployment, ensuring reproducibility and efficiency. Workflows encompass broader project management aspects, integrating pipelines with data acquisition, monitoring, and iterative experimentation. Optimizing pipelines within workflows enhances model development speed and supports scalable, maintainable machine learning systems.

Table of Comparison

| Aspect | Machine Learning Pipeline | Machine Learning Workflow |

|---|---|---|

| Definition | Sequence of automated steps for data processing, model training, and evaluation. | Comprehensive process encompassing project planning, experimentation, and deployment. |

| Focus | Data transformation and modeling tasks. | End-to-end lifecycle management including collaboration and monitoring. |

| Complexity | Moderate; linear or branching data steps. | High; integrates multiple pipelines and human activities. |

| Automation | Highly automated for repeatable model building. | Partially automated, requires manual inputs and decision-making. |

| Examples | Data cleaning - Feature engineering - Model training - Validation. | Project scoping - Data acquisition - Model development - Deployment - Monitoring. |

| Tools | Airflow, Kubeflow Pipelines, TensorFlow Extended (TFX). | MLflow, Metaflow, Kubeflow, custom project management systems. |

| Goal | Streamline model creation and evaluation. | Manage entire ML project for production readiness. |

Introduction to Pipelines and Workflows in Machine Learning

Pipelines in machine learning automate data preprocessing, feature engineering, model training, and evaluation steps to ensure reproducibility and streamline experiment cycles. Workflows encompass broader processes including data collection, model deployment, continuous integration, and monitoring, integrating both automated pipelines and manual interventions. Understanding the distinction between pipelines and workflows enhances efficient model development and operationalization.

Defining Machine Learning Pipelines

Machine learning pipelines consist of a sequence of automated steps that process data, train models, and deploy solutions, ensuring consistency and efficiency in model development. Unlike broader workflows, pipelines specifically emphasize the structured flow of data transformations and model training tasks, enabling reproducibility and scalability. Key components include data ingestion, preprocessing, feature engineering, model training, validation, and deployment, all integrated into a seamless, repeatable process.

Understanding Machine Learning Workflows

Machine learning workflows encompass the entire process from data collection and preprocessing to model training, evaluation, and deployment, ensuring a structured approach to building predictive models. Pipelines refer to specific, automated sequences within this workflow that handle tasks like feature engineering and model training, enabling reproducibility and efficiency. Understanding the distinction between workflows and pipelines is crucial for optimizing machine learning projects and streamlining model development cycles.

Key Differences Between Pipelines and Workflows

Pipelines in machine learning are sequential processes that automate data transformation and model training steps, ensuring efficient execution and reproducibility. Workflows encompass broader, often nonlinear orchestration of multiple pipelines, integrating tasks such as data preprocessing, feature engineering, model evaluation, and deployment within a single framework. The key difference lies in pipelines focusing on streamlined, linear task sequences, while workflows manage complex, interdependent tasks across various stages of the ML lifecycle.

Components of a Typical Machine Learning Pipeline

A typical machine learning pipeline consists of several key components including data collection, data preprocessing, feature engineering, model training, model evaluation, and deployment. Each stage ensures data flows systematically from raw input to actionable predictions, optimizing efficiency and reproducibility. Incorporating validation and monitoring mechanisms within the pipeline enhances model performance and detects data drift or degradation over time.

Elements of an Effective Machine Learning Workflow

An effective machine learning workflow integrates data preprocessing, model training, validation, and deployment phases to ensure seamless progression from raw data to actionable insights. Key elements include data ingestion, feature engineering, algorithm selection, hyperparameter tuning, and model evaluation, which collectively optimize performance and robustness. Incorporating automation tools and monitoring systems enhances reproducibility and real-time adaptability throughout the workflow lifecycle.

Use Cases for Pipelines vs Workflows in ML Projects

Pipelines in machine learning are optimized for automating sequential data processing tasks such as data preprocessing, feature extraction, and model training, ensuring reproducibility and efficiency in model development. Workflows provide a broader framework, managing complex orchestration of interdependent tasks including model evaluation, hyperparameter tuning, and deployment across distributed environments. Use cases for pipelines include streamlined ETL and model retraining, while workflows excel in coordinating multi-stage experiments and integration with continuous delivery systems.

Pros and Cons: Pipelines versus Workflows

Machine learning pipelines streamline data processing and model training through a sequential, automated series of steps, enhancing reproducibility and reducing human error but may lack flexibility for complex, parallel tasks. Workflows provide greater customization and orchestration capabilities, accommodating heterogeneous tasks and diverse tools but often require more manual configuration and complex management. Choosing between pipelines and workflows depends on the project's scale, complexity, and the need for automation versus adaptability.

Tools and Platforms for Managing Pipelines and Workflows

Kubeflow, Apache Airflow, and MLflow are leading platforms for managing machine learning pipelines and workflows, each offering unique features for orchestration, tracking, and deployment. Kubeflow excels in Kubernetes-native pipeline management, providing scalability and seamless integration with cloud environments. Apache Airflow offers a workflow orchestration engine with flexible scheduling and monitoring, while MLflow focuses on managing the machine learning lifecycle, including experiment tracking and model registry.

Best Practices for Choosing Between Pipelines and Workflows

Selecting between machine learning pipelines and workflows depends on project complexity, scalability, and team collaboration. Pipelines excel in automating sequential data processing and model training tasks, ensuring reproducibility and efficiency in well-defined, linear processes. Workflows offer flexibility to manage diverse, branching tasks and integrate human-in-the-loop interventions, making them ideal for complex projects requiring dynamic decision-making and iterative experimentation.

pipeline vs workflow Infographic

techiny.com

techiny.com