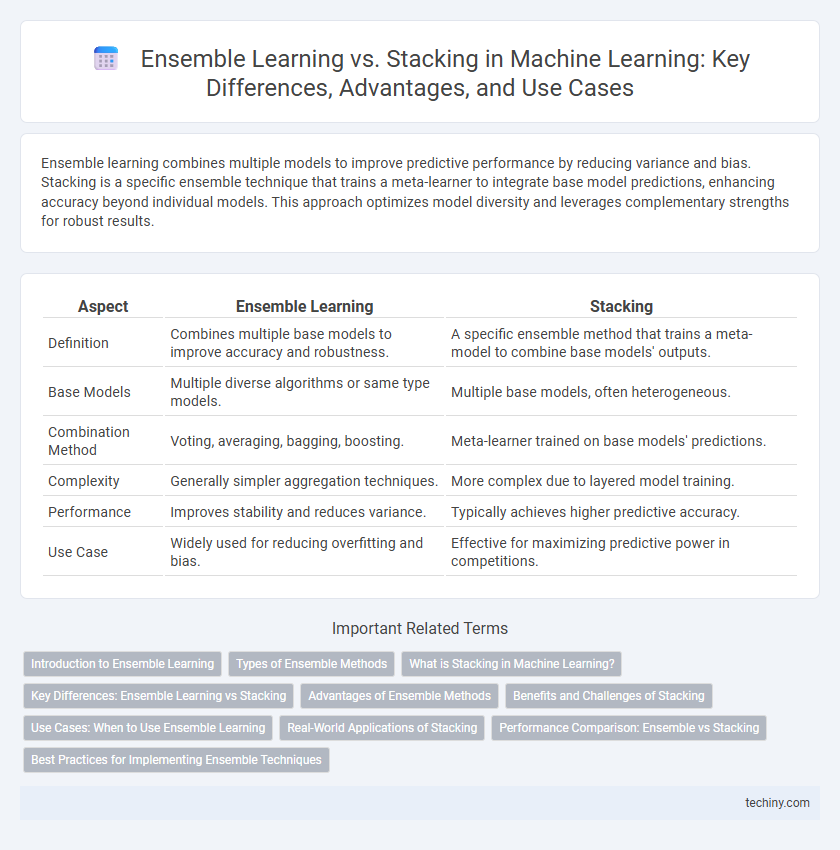

Ensemble learning combines multiple models to improve predictive performance by reducing variance and bias. Stacking is a specific ensemble technique that trains a meta-learner to integrate base model predictions, enhancing accuracy beyond individual models. This approach optimizes model diversity and leverages complementary strengths for robust results.

Table of Comparison

| Aspect | Ensemble Learning | Stacking |

|---|---|---|

| Definition | Combines multiple base models to improve accuracy and robustness. | A specific ensemble method that trains a meta-model to combine base models' outputs. |

| Base Models | Multiple diverse algorithms or same type models. | Multiple base models, often heterogeneous. |

| Combination Method | Voting, averaging, bagging, boosting. | Meta-learner trained on base models' predictions. |

| Complexity | Generally simpler aggregation techniques. | More complex due to layered model training. |

| Performance | Improves stability and reduces variance. | Typically achieves higher predictive accuracy. |

| Use Case | Widely used for reducing overfitting and bias. | Effective for maximizing predictive power in competitions. |

Introduction to Ensemble Learning

Ensemble learning combines multiple base models to improve predictive performance by leveraging their collective strengths and reducing individual weaknesses. Common ensemble methods include bagging, boosting, and stacking, each utilizing different strategies to aggregate model predictions. This approach enhances accuracy and robustness in machine learning tasks by mitigating overfitting and increasing generalization capabilities.

Types of Ensemble Methods

Ensemble learning techniques improve predictive performance by combining multiple models, with popular types including bagging, boosting, and stacking. Bagging methods like Random Forest create diverse models by training each on random subsets of data, reducing variance and overfitting. Stacking differs by training multiple base learners and using a meta-learner to optimally combine their predictions, leveraging the strengths of heterogeneous models for enhanced accuracy.

What is Stacking in Machine Learning?

Stacking in machine learning is an ensemble learning technique that combines multiple base models to improve predictive performance by training a meta-model on their outputs. Unlike simple averaging or voting methods, stacking leverages the strengths of diverse algorithms to reduce bias and variance. The meta-model learns how to best integrate base learner predictions, enhancing accuracy and robustness across various tasks.

Key Differences: Ensemble Learning vs Stacking

Ensemble learning combines multiple base models to improve overall performance by reducing variance, bias, or improving predictions through methods like bagging and boosting. Stacking is a specialized ensemble technique where predictions from base models serve as inputs to a meta-model, which learns to optimize the final output. The key difference lies in stacking's hierarchical structure using a meta-learner, while traditional ensemble methods aggregate base model outputs without an additional learning layer.

Advantages of Ensemble Methods

Ensemble learning improves predictive performance by combining multiple base models, reducing variance and bias compared to individual models. Methods like bagging enhance model stability, while boosting focuses on correcting errors from previous models, increasing overall accuracy. Stacking, a specific ensemble technique, leverages diverse algorithms to capture different data patterns, leading to superior generalization on complex datasets.

Benefits and Challenges of Stacking

Stacking enhances model performance by combining multiple base learners through a meta-learner, improving accuracy by leveraging diverse algorithms. Benefits include reduced overfitting and increased generalization, particularly in complex datasets. Challenges involve increased computational complexity, risk of data leakage, and the need for careful model selection and validation to prevent performance degradation.

Use Cases: When to Use Ensemble Learning

Ensemble learning is ideal for improving predictive performance in scenarios where individual models exhibit high variance or bias, such as in classification tasks involving imbalanced datasets or noisy data. It is widely used in industries like finance for credit scoring, healthcare for disease prediction, and marketing for customer segmentation, where accuracy and robustness are critical. Employing ensemble methods can enhance model stability and generalization when single models fail to capture complex patterns effectively.

Real-World Applications of Stacking

Stacking in ensemble learning combines multiple base models to improve predictive accuracy by leveraging their diverse strengths, making it highly effective in real-world applications like fraud detection, healthcare diagnosis, and credit scoring. This method outperforms individual models by training a meta-model to integrate predictions, enhancing robustness and generalization on complex datasets. Industries benefit from stacking's ability to reduce model bias and variance, resulting in more reliable outcomes in dynamic environments.

Performance Comparison: Ensemble vs Stacking

Ensemble learning combines multiple base models to improve prediction accuracy by reducing variance and bias, often leading to robust performance across diverse datasets. Stacking, a sophisticated ensemble technique, employs a meta-learner to integrate base model predictions, typically surpassing traditional averaging or voting methods in predictive performance. Empirical studies demonstrate stacking consistently achieves higher accuracy and lower error rates compared to standard ensemble methods, especially in complex machine learning tasks requiring model heterogeneity.

Best Practices for Implementing Ensemble Techniques

Ensemble learning improves predictive performance by combining multiple models, with stacking leveraging meta-models to learn optimal combinations of base learners. Best practices for implementing ensemble techniques include selecting diverse base models to reduce correlation, using cross-validation to prevent overfitting in stacking, and optimizing hyperparameters for both base and meta models. Balancing model complexity and computational efficiency ensures robust and scalable ensemble frameworks for real-world machine learning tasks.

Ensemble Learning vs Stacking Infographic

techiny.com

techiny.com