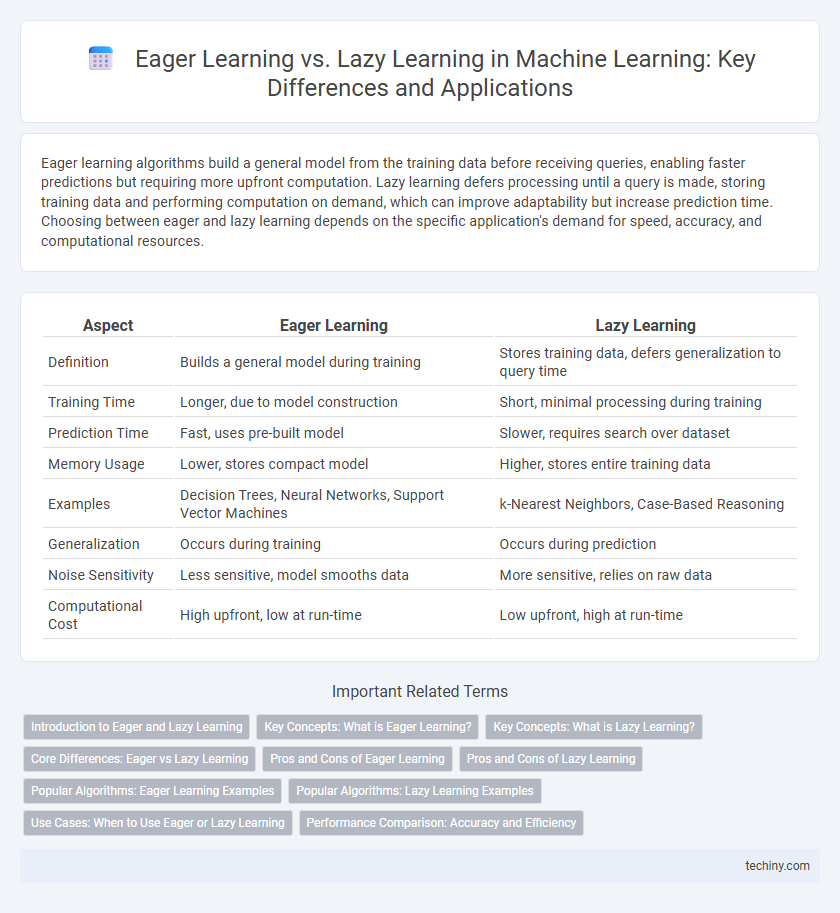

Eager learning algorithms build a general model from the training data before receiving queries, enabling faster predictions but requiring more upfront computation. Lazy learning defers processing until a query is made, storing training data and performing computation on demand, which can improve adaptability but increase prediction time. Choosing between eager and lazy learning depends on the specific application's demand for speed, accuracy, and computational resources.

Table of Comparison

| Aspect | Eager Learning | Lazy Learning |

|---|---|---|

| Definition | Builds a general model during training | Stores training data, defers generalization to query time |

| Training Time | Longer, due to model construction | Short, minimal processing during training |

| Prediction Time | Fast, uses pre-built model | Slower, requires search over dataset |

| Memory Usage | Lower, stores compact model | Higher, stores entire training data |

| Examples | Decision Trees, Neural Networks, Support Vector Machines | k-Nearest Neighbors, Case-Based Reasoning |

| Generalization | Occurs during training | Occurs during prediction |

| Noise Sensitivity | Less sensitive, model smooths data | More sensitive, relies on raw data |

| Computational Cost | High upfront, low at run-time | Low upfront, high at run-time |

Introduction to Eager and Lazy Learning

Eager learning builds a general model during the training phase, enabling faster predictions by abstracting the input data into a function or decision boundary. Lazy learning defers model creation until a query is received, relying on storing training instances and performing computation at prediction time. These approaches differ in efficiency and adaptability, with eager learners suited for static environments and lazy learners excelling in dynamic, data-rich scenarios.

Key Concepts: What is Eager Learning?

Eager learning refers to a machine learning approach where the model builds a generalization from the training data before receiving queries, enabling fast predictions during testing. Techniques such as decision trees, neural networks, and support vector machines exemplify eager learning by constructing a global model upfront. This method contrasts with lazy learning by investing computational effort early to produce a ready-to-use predictive model.

Key Concepts: What is Lazy Learning?

Lazy learning is a machine learning approach where the model defers generalization until a query is made, storing training data without building an explicit model. It relies on instance-based methods such as k-Nearest Neighbors (k-NN) to classify new data points by comparing them directly with stored examples. This technique emphasizes minimal training time but incurs higher prediction latency due to on-demand computation.

Core Differences: Eager vs Lazy Learning

Eager learning algorithms, such as neural networks and support vector machines, create a general model during the training phase, enabling faster predictions but requiring extensive upfront computation. Lazy learning methods, including k-nearest neighbors and case-based reasoning, delay generalization until query time, resulting in slower predictions but greater flexibility in adapting to new data. The core difference lies in when the model generalization occurs: eager learners build a model before seeing test data, while lazy learners perform minimal processing until prediction.

Pros and Cons of Eager Learning

Eager learning algorithms, such as decision trees and neural networks, build a generalized model during the training phase, enabling fast predictions on new data. Their main advantages include efficient real-time inference and reduced memory usage, as the model summarizes the training data. However, they may struggle with overfitting and require substantial computational resources upfront, limiting their adaptability to dynamically changing data distributions.

Pros and Cons of Lazy Learning

Lazy learning algorithms, such as k-nearest neighbors, offer the advantage of quick training since they defer processing until query time, making them flexible and adaptable to new data without retraining. However, they face challenges with high computational cost during inference, increased memory requirements to store the entire dataset, and vulnerability to noise and irrelevant features, which can degrade prediction accuracy. The trade-off between training efficiency and prediction latency makes lazy learning suitable for applications with infrequent querying but less ideal for real-time or resource-constrained environments.

Popular Algorithms: Eager Learning Examples

Eager learning algorithms build a generalized model during the training phase, enabling faster predictions with well-known examples such as Decision Trees, Support Vector Machines (SVM), and Neural Networks. These algorithms memorize underlying data patterns, reducing computational costs at query time while often requiring extensive training. Eager learning excels in applications demanding rapid inference and structured model representations, distinguishing it from lazy learning methods like k-Nearest Neighbors (k-NN) that delay generalization until prediction.

Popular Algorithms: Lazy Learning Examples

Lazy learning algorithms, such as k-Nearest Neighbors (k-NN) and Locally Weighted Regression, delay the generalization process until a query is made, storing training data for instance-based reasoning. k-NN finds the closest stored examples to predict new data points, offering simplicity and effectiveness in classification and regression tasks without an explicit training phase. These algorithms excel in scenarios with dynamic data requiring flexible models but may suffer from high computational cost during prediction and sensitivity to noisy data.

Use Cases: When to Use Eager or Lazy Learning

Eager learning models, such as neural networks and support vector machines, are optimal for scenarios requiring fast prediction times and consistent performance on large, well-labeled datasets like image classification or medical diagnosis. Lazy learning techniques, including k-nearest neighbors and case-based reasoning, excel in dynamic environments with evolving data or noisy inputs, such as recommendation systems and real-time decision-making where adaptability is crucial. Selecting between eager and lazy learning depends on factors like computational resources, data size, and the need for model update frequency during deployment.

Performance Comparison: Accuracy and Efficiency

Eager learning models, such as decision trees and neural networks, typically provide faster prediction times due to their pre-trained structure, resulting in higher efficiency during inference compared to lazy learning methods like k-nearest neighbors (k-NN). However, lazy learning algorithms often achieve competitive accuracy by adapting to local data variations at query time, making them advantageous in dynamic or smaller datasets. The trade-off between accuracy and efficiency depends heavily on dataset size, feature dimensionality, and application requirements, with eager learning excelling in large-scale, real-time scenarios and lazy learning favoring flexibility and simplicity.

Eager Learning vs Lazy Learning Infographic

techiny.com

techiny.com