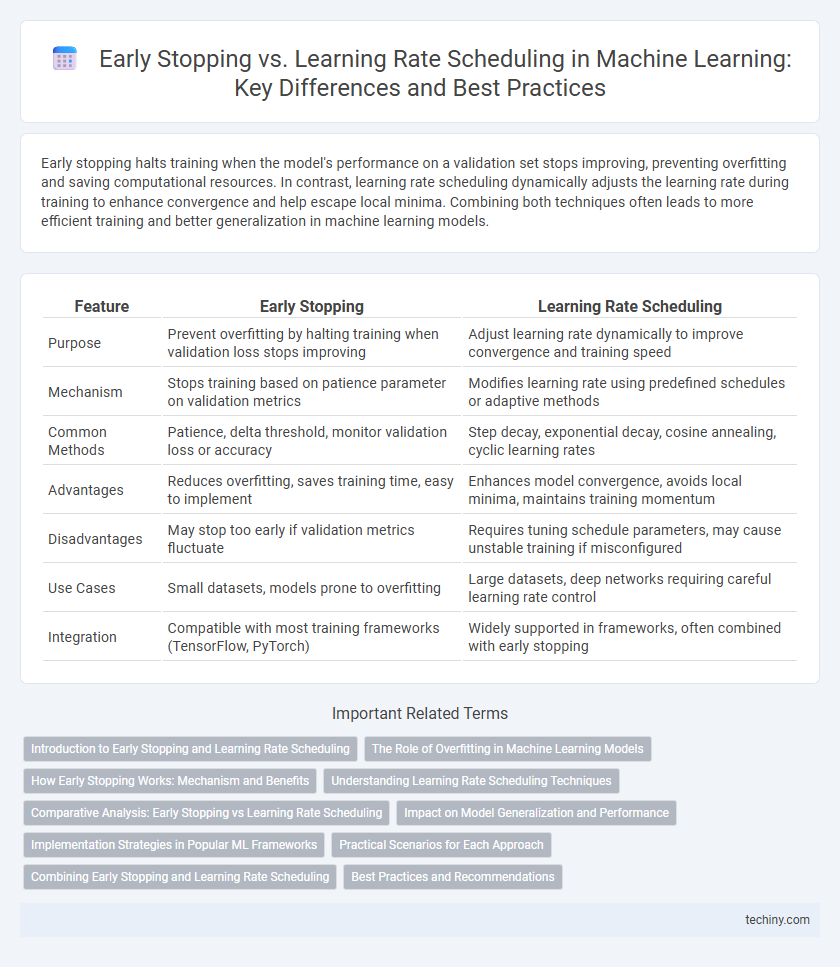

Early stopping halts training when the model's performance on a validation set stops improving, preventing overfitting and saving computational resources. In contrast, learning rate scheduling dynamically adjusts the learning rate during training to enhance convergence and help escape local minima. Combining both techniques often leads to more efficient training and better generalization in machine learning models.

Table of Comparison

| Feature | Early Stopping | Learning Rate Scheduling |

|---|---|---|

| Purpose | Prevent overfitting by halting training when validation loss stops improving | Adjust learning rate dynamically to improve convergence and training speed |

| Mechanism | Stops training based on patience parameter on validation metrics | Modifies learning rate using predefined schedules or adaptive methods |

| Common Methods | Patience, delta threshold, monitor validation loss or accuracy | Step decay, exponential decay, cosine annealing, cyclic learning rates |

| Advantages | Reduces overfitting, saves training time, easy to implement | Enhances model convergence, avoids local minima, maintains training momentum |

| Disadvantages | May stop too early if validation metrics fluctuate | Requires tuning schedule parameters, may cause unstable training if misconfigured |

| Use Cases | Small datasets, models prone to overfitting | Large datasets, deep networks requiring careful learning rate control |

| Integration | Compatible with most training frameworks (TensorFlow, PyTorch) | Widely supported in frameworks, often combined with early stopping |

Introduction to Early Stopping and Learning Rate Scheduling

Early stopping is a regularization technique in machine learning that halts training when the model's performance on a validation set stops improving, preventing overfitting and saving computational resources. Learning rate scheduling adjusts the learning rate during training, typically decreasing it to fine-tune model convergence and improve accuracy. Both methods optimize the training process, with early stopping controlling when to stop and learning rate scheduling controlling how fast the model learns.

The Role of Overfitting in Machine Learning Models

Early stopping halts training once validation error increases, directly preventing overfitting by stopping the model before it starts to memorize noise. Learning rate scheduling adjusts the step size during training to improve convergence but does not inherently stop overfitting, often requiring additional regularization techniques. Overfitting occurs when models capture noise rather than underlying patterns, making early stopping a more targeted method to mitigate this issue in machine learning models.

How Early Stopping Works: Mechanism and Benefits

Early stopping monitors model performance on a validation set during training, halting the process when improvements plateau to prevent overfitting. This technique effectively reduces training time by avoiding unnecessary epochs once the model's generalization potential peaks. By maintaining model parameters from the best performing epoch, early stopping enhances robustness and often results in better predictive accuracy compared to fixed training schedules.

Understanding Learning Rate Scheduling Techniques

Learning rate scheduling techniques dynamically adjust the learning rate during training to improve convergence and prevent overfitting. Common methods include step decay, exponential decay, and cosine annealing, each modifying the learning rate based on epochs or iterations to maintain optimal gradient descent steps. These schedules complement early stopping by controlling training progress, enhancing model performance without requiring interruption based solely on validation metrics.

Comparative Analysis: Early Stopping vs Learning Rate Scheduling

Early stopping halts training once the model's performance on validation data deteriorates, preventing overfitting by monitoring loss metrics, while learning rate scheduling dynamically adjusts the learning rate to optimize convergence speed and model accuracy. Early stopping favors model generalization by terminating training at the optimal point, whereas learning rate schedules systematically reduce the step size to escape local minima and ensure stable gradient updates. Combining both techniques can enhance training efficiency and model robustness by balancing training duration and adaptive learning progress control.

Impact on Model Generalization and Performance

Early stopping prevents overfitting by halting training once the validation loss stops improving, directly enhancing model generalization. Learning rate scheduling adjusts the learning rate dynamically during training, enabling the model to converge more efficiently and potentially achieve better performance by escaping local minima. Combining both techniques often results in improved robustness and accuracy across diverse datasets in machine learning models.

Implementation Strategies in Popular ML Frameworks

Early stopping and learning rate scheduling are commonly implemented in popular machine learning frameworks like TensorFlow and PyTorch through built-in callbacks and scheduler classes that monitor validation metrics to halt training or adjust learning rates dynamically. TensorFlow's `EarlyStopping` callback and `LearningRateScheduler` provide easy integration for adaptive training control, while PyTorch offers `torch.optim.lr_scheduler` modules combined with manual training loop logic for customized implementation. Effective use of these strategies optimizes model convergence and prevents overfitting by either terminating training early or fine-tuning the learning rate throughout the training process.

Practical Scenarios for Each Approach

Early stopping is effective in practical scenarios where overfitting occurs quickly, as it halts training once validation performance degrades, saving computational resources. Learning rate scheduling excels in environments requiring gradual convergence, adjusting the learning rate dynamically to avoid local minima and improve accuracy. Combining early stopping with learning rate schedules often yields robustness in training deep neural networks, balancing speed and precision.

Combining Early Stopping and Learning Rate Scheduling

Combining early stopping and learning rate scheduling enhances model training efficiency by preventing overfitting while optimizing convergence speed. Early stopping monitors validation loss to halt training when improvements plateau, whereas learning rate scheduling dynamically adjusts the learning rate to maintain effective gradient descent steps. Utilizing both techniques simultaneously allows for adaptive training control, improving generalization and reducing training time in machine learning models.

Best Practices and Recommendations

Early stopping effectively prevents overfitting by halting training once validation performance stagnates, making it essential for models prone to noise and complex data. Learning rate scheduling, such as cosine annealing or step decay, optimizes convergence speed and helps escape local minima by gradually adjusting the learning rate during training. Combining early stopping with adaptive learning rate schedulers enhances model generalization, reduces training time, and is recommended for achieving robust performance on diverse datasets.

early stopping vs learning rate scheduling Infographic

techiny.com

techiny.com