K-Means clustering partitions data into a predefined number of clusters by minimizing variance within each cluster, making it efficient for large datasets with spherical cluster shapes. Hierarchical clustering builds a tree-like structure of nested clusters without needing a preset number of clusters, enabling more flexibility to capture complex data relationships. The choice between K-Means and hierarchical clustering depends on dataset size, cluster shape, and the need for interpretability in clustering results.

Table of Comparison

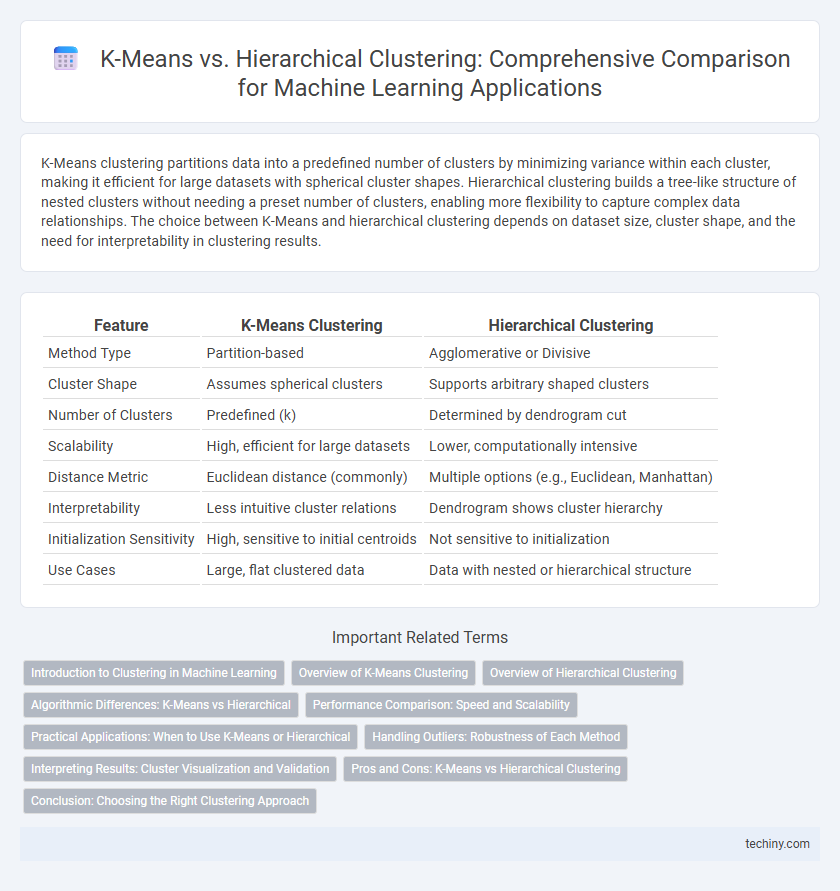

| Feature | K-Means Clustering | Hierarchical Clustering |

|---|---|---|

| Method Type | Partition-based | Agglomerative or Divisive |

| Cluster Shape | Assumes spherical clusters | Supports arbitrary shaped clusters |

| Number of Clusters | Predefined (k) | Determined by dendrogram cut |

| Scalability | High, efficient for large datasets | Lower, computationally intensive |

| Distance Metric | Euclidean distance (commonly) | Multiple options (e.g., Euclidean, Manhattan) |

| Interpretability | Less intuitive cluster relations | Dendrogram shows cluster hierarchy |

| Initialization Sensitivity | High, sensitive to initial centroids | Not sensitive to initialization |

| Use Cases | Large, flat clustered data | Data with nested or hierarchical structure |

Introduction to Clustering in Machine Learning

K-Means and Hierarchical Clustering are fundamental algorithms used in unsupervised machine learning for grouping data points based on similarity. K-Means partitions the dataset into a predefined number of clusters by minimizing the variance within each cluster, making it efficient for large datasets. Hierarchical Clustering builds nested clusters through agglomerative or divisive techniques, producing a dendrogram that reveals data structure at multiple granularity levels.

Overview of K-Means Clustering

K-Means clustering partitions data into k distinct clusters by minimizing the variance within each cluster, using an iterative algorithm that assigns points to the nearest centroid and updates centroids accordingly. It is computationally efficient for large datasets and performs well when clusters are spherical and evenly sized. Common applications include customer segmentation, image compression, and anomaly detection in machine learning.

Overview of Hierarchical Clustering

Hierarchical clustering builds nested clusters by either agglomerative (bottom-up) or divisive (top-down) approaches, creating a dendrogram that visually represents cluster relationships at various granularity levels. It does not require a predefined number of clusters, allowing more flexibility compared to K-Means, which depends on specifying cluster count beforehand. This method excels in capturing complex data structures and varying cluster sizes but can be computationally intensive for large datasets.

Algorithmic Differences: K-Means vs Hierarchical

K-Means clustering partitions data into k distinct non-overlapping clusters by minimizing within-cluster variance through iterative centroid recalculation, offering computational efficiency for large datasets. Hierarchical clustering builds a multilevel cluster tree (dendrogram) by either agglomerative bottom-up merging or divisive top-down splitting, enabling analysis of data structure without predefined cluster numbers. Unlike K-Means which requires specifying cluster count upfront, hierarchical clustering provides a richer representation of data relationships but is computationally more intensive, especially for large datasets.

Performance Comparison: Speed and Scalability

K-Means outperforms Hierarchical Clustering significantly in speed, especially on large datasets with high dimensionality, due to its iterative centroid updating mechanism. Hierarchical Clustering's time complexity, typically O(n3), limits scalability for big data, making it computationally expensive compared to the O(nkt) complexity of K-Means, where n is data points, k is clusters, and t is iterations. For applications requiring quick clustering and scalability to millions of samples, K-Means remains the preferred algorithm, while Hierarchical Clustering is more suitable for smaller datasets where interpretability of nested clusters is critical.

Practical Applications: When to Use K-Means or Hierarchical

K-Means clustering is ideal for large datasets with a predefined number of clusters, offering fast and scalable performance suitable for market segmentation and image compression. Hierarchical clustering excels in smaller datasets where the cluster number is unknown, providing detailed dendrograms useful in gene expression analysis and customer relationship management. Choosing between K-Means and Hierarchical depends on data size, desired cluster quantity, and the need for detailed cluster relationships.

Handling Outliers: Robustness of Each Method

K-Means clustering tends to be sensitive to outliers as they can distort the centroids, leading to less accurate cluster assignments. Hierarchical clustering, using methods like single linkage or complete linkage, often provides more robustness to outliers by considering proximity between individual points or clusters. Outlier handling in hierarchical clustering varies based on the linkage criterion, with average linkage offering a balanced sensitivity compared to K-Means.

Interpreting Results: Cluster Visualization and Validation

K-Means clustering produces distinct, non-overlapping clusters best visualized using scatter plots with clear centroids, facilitating straightforward interpretation of cluster boundaries. Hierarchical clustering offers dendrograms that reveal nested cluster relationships, providing insights into cluster similarity at various levels of granularity. Validation metrics like silhouette scores and Davies-Bouldin index assess cluster cohesion and separation in both methods, ensuring robust interpretation and model reliability in machine learning applications.

Pros and Cons: K-Means vs Hierarchical Clustering

K-Means clustering excels in scalability and efficiency, handling large datasets with lower computational complexity, but it requires predefining the number of clusters and performs poorly with non-spherical cluster shapes. Hierarchical clustering generates a dendrogram representing data relationships without preset cluster counts, enabling flexible cluster selection, though it suffers from high computational costs and limited scalability for large datasets. K-Means is preferred for quick partitioning in big data scenarios, while hierarchical clustering suits smaller datasets requiring detailed cluster structure analysis.

Conclusion: Choosing the Right Clustering Approach

K-Means clustering is ideal for large datasets with clearly defined, spherical clusters due to its scalability and efficiency. Hierarchical clustering excels in smaller datasets requiring a detailed dendrogram for understanding nested groupings and complex cluster shapes. Selecting the appropriate method depends on dataset size, cluster shape, and the need for interpretability in the clustering results.

K-Means vs Hierarchical Clustering Infographic

techiny.com

techiny.com