Feature scaling adjusts the range of data features to a standard scale, typically between 0 and 1 or -1 and 1, improving the performance of machine learning algorithms that rely on distance metrics. Feature normalization transforms data to have a mean of zero and a standard deviation of one, which stabilizes the variance and accelerates the convergence of gradient-based methods. Both techniques are essential for preprocessing, but the choice depends on the specific algorithm and the data distribution.

Table of Comparison

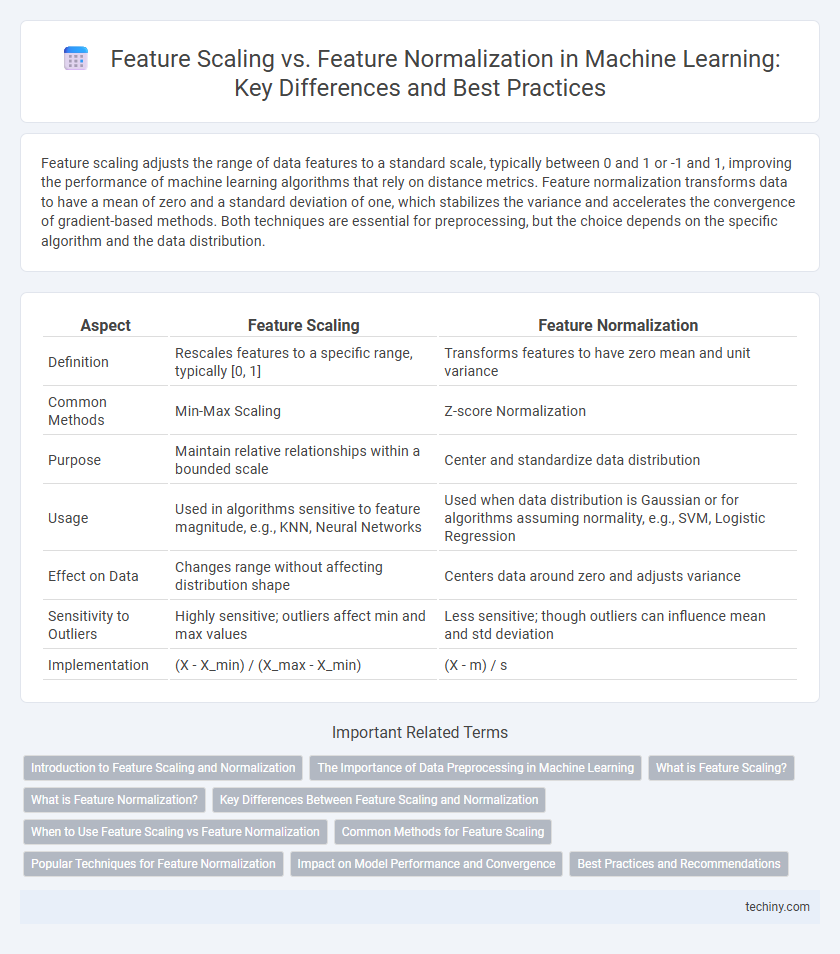

| Aspect | Feature Scaling | Feature Normalization |

|---|---|---|

| Definition | Rescales features to a specific range, typically [0, 1] | Transforms features to have zero mean and unit variance |

| Common Methods | Min-Max Scaling | Z-score Normalization |

| Purpose | Maintain relative relationships within a bounded scale | Center and standardize data distribution |

| Usage | Used in algorithms sensitive to feature magnitude, e.g., KNN, Neural Networks | Used when data distribution is Gaussian or for algorithms assuming normality, e.g., SVM, Logistic Regression |

| Effect on Data | Changes range without affecting distribution shape | Centers data around zero and adjusts variance |

| Sensitivity to Outliers | Highly sensitive; outliers affect min and max values | Less sensitive; though outliers can influence mean and std deviation |

| Implementation | (X - X_min) / (X_max - X_min) | (X - m) / s |

Introduction to Feature Scaling and Normalization

Feature scaling and feature normalization are essential preprocessing techniques in machine learning to ensure that data attributes contribute equally during model training. Feature scaling typically involves rescaling data to a fixed range, such as 0 to 1, using methods like min-max scaling, while feature normalization often refers to adjusting data to have a mean of zero and unit variance via techniques like z-score normalization. Proper implementation of these methods helps improve algorithm convergence speed, accuracy, and stability, especially in distance-based models and gradient descent optimization.

The Importance of Data Preprocessing in Machine Learning

Data preprocessing in machine learning significantly enhances model performance by ensuring consistent and comparable feature values through techniques like feature scaling and feature normalization. Feature scaling transforms data to a specific range, such as Min-Max scaling between 0 and 1, improving convergence in gradient-based algorithms. Feature normalization standardizes data to have a mean of zero and a standard deviation of one, which is crucial for algorithms sensitive to the variance of input features.

What is Feature Scaling?

Feature scaling is a preprocessing technique in machine learning that adjusts the range of independent variables or features to a standard scale without distorting differences in the ranges of values. Common methods for feature scaling include Min-Max scaling, which transforms features to a fixed range usually between 0 and 1, and Standardization, which centers features around the mean and scales to unit variance. This process improves the performance of gradient-based algorithms and distance-based models by ensuring that features contribute equally to the model training.

What is Feature Normalization?

Feature normalization is a preprocessing technique in machine learning that adjusts the range and distribution of feature values to improve model performance. It typically involves rescaling features to a common scale, such as transforming data to have zero mean and unit variance using methods like z-score normalization. This process helps algorithms converge faster and enhances the accuracy of models sensitive to feature scale, including gradient descent-based methods and distance-based classifiers.

Key Differences Between Feature Scaling and Normalization

Feature scaling typically refers to transforming features to a specific range, such as 0 to 1 in min-max scaling, while feature normalization standardizes features to have a mean of zero and a standard deviation of one. Feature scaling preserves the original distribution of data but compresses or stretches it to a desired range, whereas normalization adjusts data to follow a standard normal distribution. These key differences impact algorithm performance, as scaling is often preferred for algorithms sensitive to magnitude, like k-nearest neighbors, whereas normalization benefits algorithms assuming normally distributed inputs, such as logistic regression and neural networks.

When to Use Feature Scaling vs Feature Normalization

Feature scaling is essential when algorithms rely on distance measurements, such as k-nearest neighbors or support vector machines, where features need a consistent scale to avoid bias. Feature normalization is preferred in neural networks or gradient-based methods to accelerate convergence by ensuring input features have a mean of zero and unit variance. Choosing between feature scaling and feature normalization depends on the algorithm's sensitivity to feature magnitude and the data distribution characteristics.

Common Methods for Feature Scaling

Common methods for feature scaling in machine learning include Min-Max scaling, which transforms features to a fixed range typically between 0 and 1, and Standardization, which rescales features to have a mean of zero and a standard deviation of one. Robust scaling uses the median and interquartile range, making it effective for datasets with outliers. These scaling techniques improve the convergence of gradient-based algorithms and enhance model performance by ensuring all features contribute equally.

Popular Techniques for Feature Normalization

Popular techniques for feature normalization include Min-Max Scaling, which adjusts data to a fixed range typically between 0 and 1, and Z-Score Normalization that standardizes features based on mean and standard deviation, resulting in transformed values with zero mean and unit variance. Decimal Scaling and Robust Scaling are also widely used, with Decimal Scaling normalizing by moving the decimal point of values and Robust Scaling utilizing the median and interquartile range to reduce the impact of outliers. These methods improve model performance and training stability by ensuring that features contribute proportionally to the learning process.

Impact on Model Performance and Convergence

Feature scaling and feature normalization significantly impact model performance and convergence by ensuring that input data features operate on a comparable scale, which prevents biased weighting during algorithm training. Scaling methods like min-max scaling maintain the original data distribution, improving convergence speed in gradient-based algorithms, while normalization re-centers and rescales feature vectors to unit norm, enhancing performance in distance-based models like k-nearest neighbors and support vector machines. Models experience faster convergence and improved accuracy when features are appropriately scaled or normalized, reducing the risk of exploding or vanishing gradients in neural networks.

Best Practices and Recommendations

Feature scaling and feature normalization are essential preprocessing techniques in machine learning to ensure model convergence and stable performance. Best practices recommend using Min-Max scaling when preserving the range of data is critical and Standardization (z-score normalization) for algorithms sensitive to mean and variance, such as Support Vector Machines and Principal Component Analysis. Avoid applying scaling on categorical data and always fit the scaler on training data only to prevent data leakage and ensure model generalization.

feature scaling vs feature normalization Infographic

techiny.com

techiny.com