Hyperparameter tuning involves selecting the best configuration of external settings that govern the learning process, such as learning rate or batch size, to improve model performance. Parameter optimization focuses on adjusting internal weights and biases within a model during training to minimize error. Effective machine learning relies on a careful balance between hyperparameter tuning and parameter optimization to achieve optimal results.

Table of Comparison

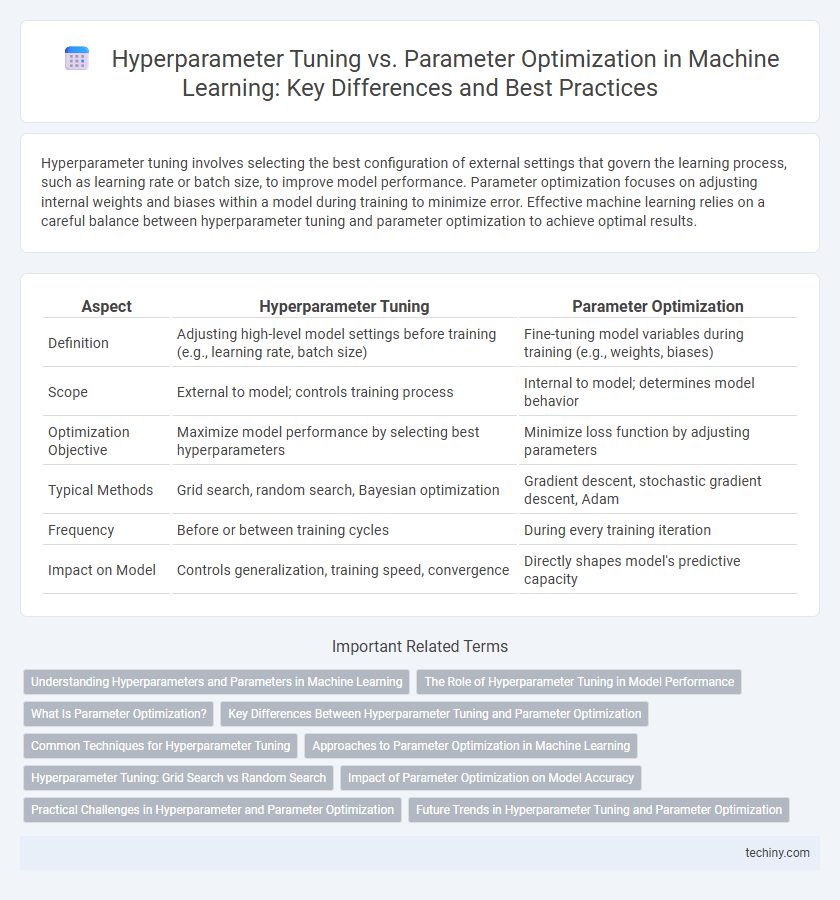

| Aspect | Hyperparameter Tuning | Parameter Optimization |

|---|---|---|

| Definition | Adjusting high-level model settings before training (e.g., learning rate, batch size) | Fine-tuning model variables during training (e.g., weights, biases) |

| Scope | External to model; controls training process | Internal to model; determines model behavior |

| Optimization Objective | Maximize model performance by selecting best hyperparameters | Minimize loss function by adjusting parameters |

| Typical Methods | Grid search, random search, Bayesian optimization | Gradient descent, stochastic gradient descent, Adam |

| Frequency | Before or between training cycles | During every training iteration |

| Impact on Model | Controls generalization, training speed, convergence | Directly shapes model's predictive capacity |

Understanding Hyperparameters and Parameters in Machine Learning

Hyperparameters in machine learning are external configurations set before the training process, such as learning rate, batch size, and number of layers, which control the overall behavior and performance of the model. Parameters, like weights and biases, are internal values learned by the model during training through optimization techniques such as gradient descent. Effective hyperparameter tuning enhances model accuracy by selecting the optimal hyperparameter values, while parameter optimization involves adjusting internal parameters to minimize the loss function.

The Role of Hyperparameter Tuning in Model Performance

Hyperparameter tuning is crucial for enhancing model performance by systematically adjusting external configurations like learning rate, batch size, or number of trees, which govern the training process without being learned from data. Unlike parameter optimization that aims to find the best internal model weights or coefficients, hyperparameter tuning controls the framework enabling effective learning and generalization. Efficient hyperparameter tuning techniques such as grid search, random search, and Bayesian optimization directly influence the model's accuracy, convergence speed, and robustness on unseen data.

What Is Parameter Optimization?

Parameter optimization in machine learning involves adjusting the internal parameters of a model, such as weights and biases in neural networks, to minimize the loss function and improve predictive accuracy. This process typically happens during training using algorithms like gradient descent, which iteratively update parameters based on error gradients. Unlike hyperparameter tuning, which adjusts external settings, parameter optimization directly refines the model's functional components to fit the training data.

Key Differences Between Hyperparameter Tuning and Parameter Optimization

Hyperparameter tuning involves selecting the best configuration of model settings such as learning rate, batch size, and number of epochs, which are set before training begins. Parameter optimization focuses on adjusting the internal weights and biases of a machine learning model during the training process to minimize loss functions. The key differences lie in hyperparameters being external, fixed variables controlling training behavior, while parameters are learned values updated iteratively within the model.

Common Techniques for Hyperparameter Tuning

Common techniques for hyperparameter tuning in machine learning include grid search, random search, and Bayesian optimization, each balancing exploration and computational efficiency differently. Grid search exhaustively evaluates predefined hyperparameter combinations, offering thorough but time-intensive results, while random search samples hyperparameter space randomly, often achieving comparable performance with fewer evaluations. Bayesian optimization employs surrogate models to predict promising hyperparameters, accelerating convergence to optimal values by focusing on regions with higher expected improvement.

Approaches to Parameter Optimization in Machine Learning

Approaches to parameter optimization in machine learning primarily involve techniques like grid search, random search, and Bayesian optimization, each designed to identify the best model settings for improved performance. Grid search exhaustively evaluates combinations of hyperparameters, while random search samples them stochastically, often yielding faster results. Bayesian optimization leverages probabilistic models to efficiently explore the hyperparameter space, adapting based on previous trials to optimize model accuracy and generalization.

Hyperparameter Tuning: Grid Search vs Random Search

Hyperparameter tuning in machine learning involves systematically searching for the optimal set of hyperparameters that maximize model performance, with grid search and random search being two prominent methods. Grid search exhaustively evaluates all possible hyperparameter combinations within a predefined range, ensuring thorough exploration but often at a high computational cost. Random search, on the other hand, samples hyperparameter combinations randomly, providing a more efficient and scalable approach that can discover competitive or superior solutions with fewer iterations.

Impact of Parameter Optimization on Model Accuracy

Parameter optimization significantly enhances model accuracy by fine-tuning algorithm-specific settings such as weights and biases that directly influence model predictions. Unlike hyperparameter tuning, which adjusts external settings like learning rates or tree depths, parameter optimization targets the internal components learned during training, leading to more precise generalization on unseen data. Efficient parameter optimization methods, including gradient descent and Bayesian optimization, help reduce error rates and improve the overall predictive performance of machine learning models.

Practical Challenges in Hyperparameter and Parameter Optimization

Hyperparameter tuning and parameter optimization face practical challenges including computational resource constraints, as exhaustive searches over hyperparameter spaces can be prohibitively expensive. The complexity of non-convex objective functions causes difficulties in finding global optima, often resulting in suboptimal model performance. Furthermore, the dependency between hyperparameters and parameters complicates optimization, requiring sophisticated techniques like Bayesian optimization or gradient-based methods to improve efficiency and accuracy.

Future Trends in Hyperparameter Tuning and Parameter Optimization

Emerging trends in hyperparameter tuning and parameter optimization emphasize automation through advanced techniques like Bayesian optimization, reinforcement learning, and meta-learning to enhance model performance and efficiency. The integration of quantum computing and distributed optimization promises to accelerate search processes for optimal hyperparameters and parameters. Future developments increasingly focus on scalability, adaptability to diverse model architectures, and real-time tuning in dynamic environments.

Hyperparameter Tuning vs Parameter Optimization Infographic

techiny.com

techiny.com