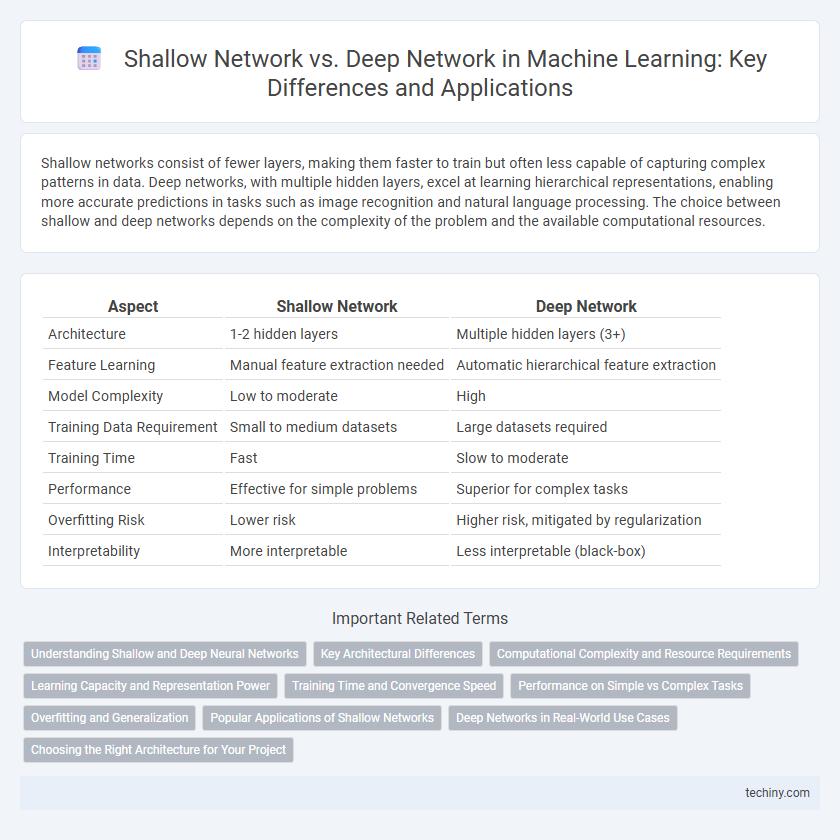

Shallow networks consist of fewer layers, making them faster to train but often less capable of capturing complex patterns in data. Deep networks, with multiple hidden layers, excel at learning hierarchical representations, enabling more accurate predictions in tasks such as image recognition and natural language processing. The choice between shallow and deep networks depends on the complexity of the problem and the available computational resources.

Table of Comparison

| Aspect | Shallow Network | Deep Network |

|---|---|---|

| Architecture | 1-2 hidden layers | Multiple hidden layers (3+) |

| Feature Learning | Manual feature extraction needed | Automatic hierarchical feature extraction |

| Model Complexity | Low to moderate | High |

| Training Data Requirement | Small to medium datasets | Large datasets required |

| Training Time | Fast | Slow to moderate |

| Performance | Effective for simple problems | Superior for complex tasks |

| Overfitting Risk | Lower risk | Higher risk, mitigated by regularization |

| Interpretability | More interpretable | Less interpretable (black-box) |

Understanding Shallow and Deep Neural Networks

Shallow neural networks consist of a single hidden layer, making them computationally efficient but limited in modeling complex patterns due to their reduced representational capacity. Deep neural networks feature multiple hidden layers, enabling hierarchical feature extraction and superior performance in tasks like image recognition and natural language processing. Understanding the trade-offs between shallow and deep architectures is essential for selecting the appropriate model complexity based on dataset size, computational resources, and problem complexity.

Key Architectural Differences

Shallow networks typically consist of one or two hidden layers, limiting their ability to model complex patterns, while deep networks contain multiple hidden layers that enable hierarchical feature extraction. Deep architectures utilize techniques like convolutional layers and pooling to capture spatial hierarchies and reduce dimensionality, enhancing performance on large-scale datasets. Shallow networks often rely on simpler activation functions and fewer parameters, which can lead to faster training but reduced representational power compared to deep networks.

Computational Complexity and Resource Requirements

Shallow networks typically require less computational power and memory due to their limited number of layers and fewer parameters, making them faster to train and deploy on resource-constrained devices. Deep networks involve multiple hidden layers that significantly increase computational complexity and demand extensive GPU or TPU resources for efficient training, especially with large datasets. Optimizing deep network architectures often includes techniques like dropout and batch normalization to manage resource usage and improve training efficiency.

Learning Capacity and Representation Power

Shallow networks possess limited learning capacity, often struggling with complex patterns due to their single or few hidden layers, which restricts their representation power. Deep networks leverage multiple hidden layers, enabling hierarchical feature extraction that significantly enhances their ability to model intricate data distributions and capture high-level abstractions. This increased depth empowers deep neural networks to achieve superior performance in tasks like image recognition, natural language processing, and speech synthesis compared to shallow architectures.

Training Time and Convergence Speed

Shallow networks typically require less training time due to their simpler architectures but may struggle with slower convergence on complex tasks compared to deep networks. Deep networks, with multiple hidden layers, often converge faster on large datasets by capturing intricate patterns and hierarchical features, although they demand more computational resources per epoch. Optimized training algorithms like Adam and techniques such as batch normalization further accelerate convergence speed in deep networks.

Performance on Simple vs Complex Tasks

Shallow networks excel in performance on simple tasks by efficiently learning linear or mildly nonlinear relationships with fewer parameters, leading to faster training and inference times. Deep networks outperform shallow ones on complex tasks by capturing hierarchical features and intricate patterns through multiple nonlinear layers, enabling superior generalization in image recognition, natural language processing, and speech analysis. However, deep networks require larger datasets and computational resources to avoid overfitting and optimize performance on high-dimensional, unstructured data.

Overfitting and Generalization

Shallow networks often struggle with underfitting complex data patterns due to their limited representational capacity, while deep networks can capture intricate features but are more prone to overfitting when not properly regularized. Techniques such as dropout, batch normalization, and early stopping are critical in deep networks to improve generalization and prevent memorization of training data. Effective generalization depends on balancing model complexity and training data size, where deep architectures excel in large datasets but require careful tuning to avoid overfitting.

Popular Applications of Shallow Networks

Shallow networks excel in applications such as image recognition, speech processing, and simple classification tasks due to their faster training times and lower computational requirements compared to deep networks. They are particularly effective in environments with limited data where overfitting is a concern, making them suitable for real-time and embedded systems. Popular use cases include spam detection, fraud identification, and basic sentiment analysis, where shallow architectures provide robust performance with reduced model complexity.

Deep Networks in Real-World Use Cases

Deep networks excel in real-world applications such as image recognition, natural language processing, and autonomous driving by leveraging multiple hidden layers to capture complex patterns and hierarchical features. Their ability to model non-linear relationships enables advancements in medical diagnosis, speech recognition, and recommendation systems. Compared to shallow networks, deep architectures provide higher accuracy and adaptability in handling large-scale, high-dimensional datasets.

Choosing the Right Architecture for Your Project

Choosing the right neural network architecture depends on the complexity of the data and the task requirements; shallow networks with fewer layers excel in simpler problems and smaller datasets due to faster training and reduced risk of overfitting. Deep networks, characterized by multiple hidden layers and millions of parameters, are better suited for complex tasks like image recognition and natural language processing, where hierarchical feature extraction is crucial. Evaluating factors such as computational resources, dataset size, and desired model interpretability helps determine whether a shallow or deep network optimally balances performance and efficiency for your machine learning project.

shallow network vs deep network Infographic

techiny.com

techiny.com