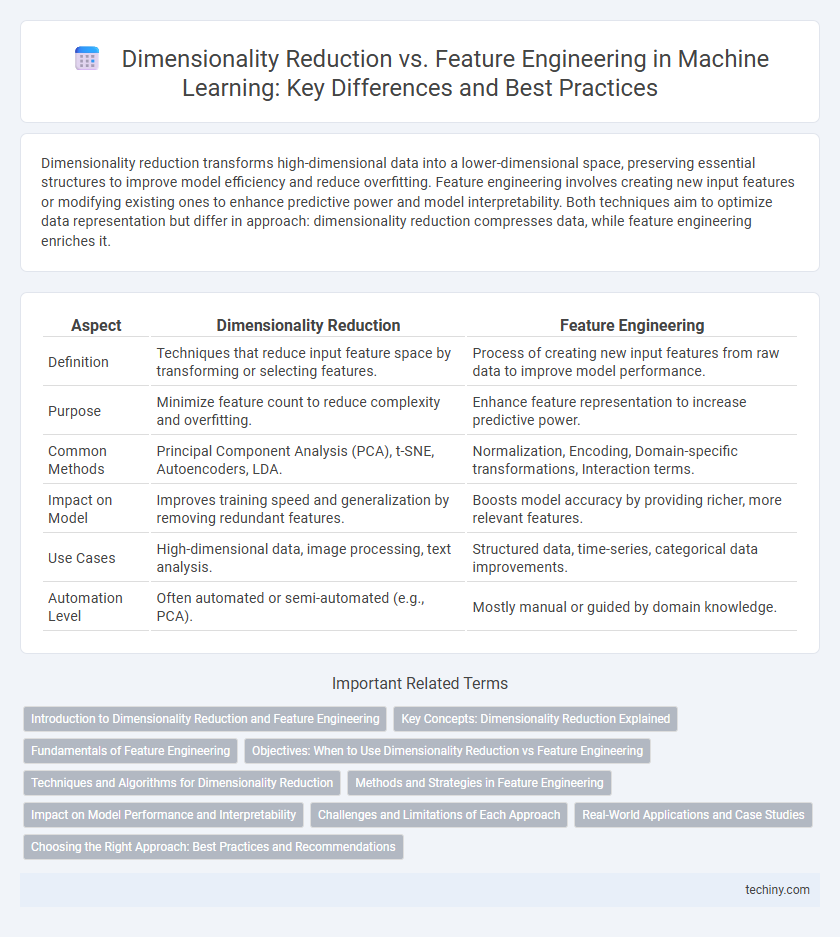

Dimensionality reduction transforms high-dimensional data into a lower-dimensional space, preserving essential structures to improve model efficiency and reduce overfitting. Feature engineering involves creating new input features or modifying existing ones to enhance predictive power and model interpretability. Both techniques aim to optimize data representation but differ in approach: dimensionality reduction compresses data, while feature engineering enriches it.

Table of Comparison

| Aspect | Dimensionality Reduction | Feature Engineering |

|---|---|---|

| Definition | Techniques that reduce input feature space by transforming or selecting features. | Process of creating new input features from raw data to improve model performance. |

| Purpose | Minimize feature count to reduce complexity and overfitting. | Enhance feature representation to increase predictive power. |

| Common Methods | Principal Component Analysis (PCA), t-SNE, Autoencoders, LDA. | Normalization, Encoding, Domain-specific transformations, Interaction terms. |

| Impact on Model | Improves training speed and generalization by removing redundant features. | Boosts model accuracy by providing richer, more relevant features. |

| Use Cases | High-dimensional data, image processing, text analysis. | Structured data, time-series, categorical data improvements. |

| Automation Level | Often automated or semi-automated (e.g., PCA). | Mostly manual or guided by domain knowledge. |

Introduction to Dimensionality Reduction and Feature Engineering

Dimensionality reduction techniques such as Principal Component Analysis (PCA) and t-Distributed Stochastic Neighbor Embedding (t-SNE) simplify high-dimensional datasets by transforming features into a lower-dimensional space while preserving essential information. Feature engineering involves creating, selecting, and transforming variables to enhance model performance by capturing relevant patterns and relationships within the raw data. Both approaches optimize data representation but serve distinct purposes: dimensionality reduction targets compactness and noise reduction, whereas feature engineering emphasizes feature creativity and interpretability.

Key Concepts: Dimensionality Reduction Explained

Dimensionality reduction involves transforming high-dimensional data into a lower-dimensional space while preserving its essential structure and patterns, which helps improve computational efficiency and visualization. Techniques such as Principal Component Analysis (PCA), t-Distributed Stochastic Neighbor Embedding (t-SNE), and Linear Discriminant Analysis (LDA) are commonly used to identify and retain the most informative features by eliminating redundancy and noise. In contrast to feature engineering, which creates new features from raw data, dimensionality reduction focuses on reducing the number of existing features to optimize model performance.

Fundamentals of Feature Engineering

Feature engineering involves transforming raw data into meaningful features that enhance model performance by capturing essential patterns and relationships. It requires domain knowledge and creativity to create, select, and modify variables, ensuring that the dataset effectively represents the problem space. Unlike dimensionality reduction, which primarily focuses on reducing feature space while preserving variance, feature engineering aims to improve model accuracy by constructing informative inputs from existing data.

Objectives: When to Use Dimensionality Reduction vs Feature Engineering

Dimensionality reduction is used to simplify data by reducing the number of input variables while retaining essential information, ideal for handling high-dimensional datasets and improving model performance by mitigating the curse of dimensionality. Feature engineering focuses on creating new, meaningful features from raw data to enhance model accuracy and interpretability, especially when domain knowledge can be leveraged to extract relevant patterns. Use dimensionality reduction when the dataset has many correlated or redundant features, and choose feature engineering when you can derive informative features to better represent the underlying problem.

Techniques and Algorithms for Dimensionality Reduction

Dimensionality reduction techniques such as Principal Component Analysis (PCA), t-Distributed Stochastic Neighbor Embedding (t-SNE), and Uniform Manifold Approximation and Projection (UMAP) effectively reduce the number of features by transforming data into lower-dimensional spaces while preserving essential structures. Algorithms like Linear Discriminant Analysis (LDA) and Autoencoders leverage supervised and unsupervised learning to enhance feature representation and improve model performance. These methods address the curse of dimensionality, facilitate visualization, and enhance computational efficiency in complex machine learning tasks.

Methods and Strategies in Feature Engineering

Feature engineering methods in machine learning focus on transforming raw data into meaningful features using techniques such as normalization, encoding categorical variables, and extracting polynomial or interaction terms. Strategies include domain knowledge application, dimensionality reduction techniques like Principal Component Analysis (PCA) used to summarize inputs, and automated feature creation with algorithms like feature crosses or embeddings. These methods enhance model performance by improving feature relevance and reducing noise, complementing but distinct from dimensionality reduction's goal of lowering feature space complexity.

Impact on Model Performance and Interpretability

Dimensionality reduction techniques such as PCA and t-SNE enhance model performance by removing redundant features and lowering computational complexity, often improving generalization but potentially reducing interpretability due to transformed feature spaces. Feature engineering, involving the creation and selection of meaningful variables, directly boosts model accuracy and preserves or enhances interpretability by maintaining human-understandable features. Balancing these approaches depends on the trade-off between achieving high predictive accuracy and maintaining transparency in machine learning models.

Challenges and Limitations of Each Approach

Dimensionality reduction techniques, such as PCA and t-SNE, often struggle with preserving interpretability and can lead to loss of important information when reducing high-dimensional data. Feature engineering requires domain expertise and extensive trial-and-error to create meaningful features, which can be time-consuming and may introduce bias or redundancy. Both approaches face scalability challenges with very large datasets, impacting model performance and generalization.

Real-World Applications and Case Studies

Dimensionality reduction techniques like PCA and t-SNE improve model performance and computational efficiency by eliminating redundant features in image recognition and bioinformatics datasets. Feature engineering, such as creating interaction terms or extracting domain-specific features, boosts predictive accuracy in finance and healthcare applications by capturing complex relationships. Case studies show combining both methods enhances fraud detection and customer segmentation, balancing data simplification with informative feature extraction.

Choosing the Right Approach: Best Practices and Recommendations

Choosing between dimensionality reduction and feature engineering depends on the dataset size, feature correlation, and model interpretability requirements. Techniques like Principal Component Analysis (PCA) are effective for reducing feature space when multicollinearity is high, whereas feature engineering enhances meaningful attributes through domain knowledge and transformation methods. Best practices include evaluating model performance via cross-validation, ensuring features maintain relevance, and balancing model complexity with computational efficiency.

Dimensionality Reduction vs Feature Engineering Infographic

techiny.com

techiny.com