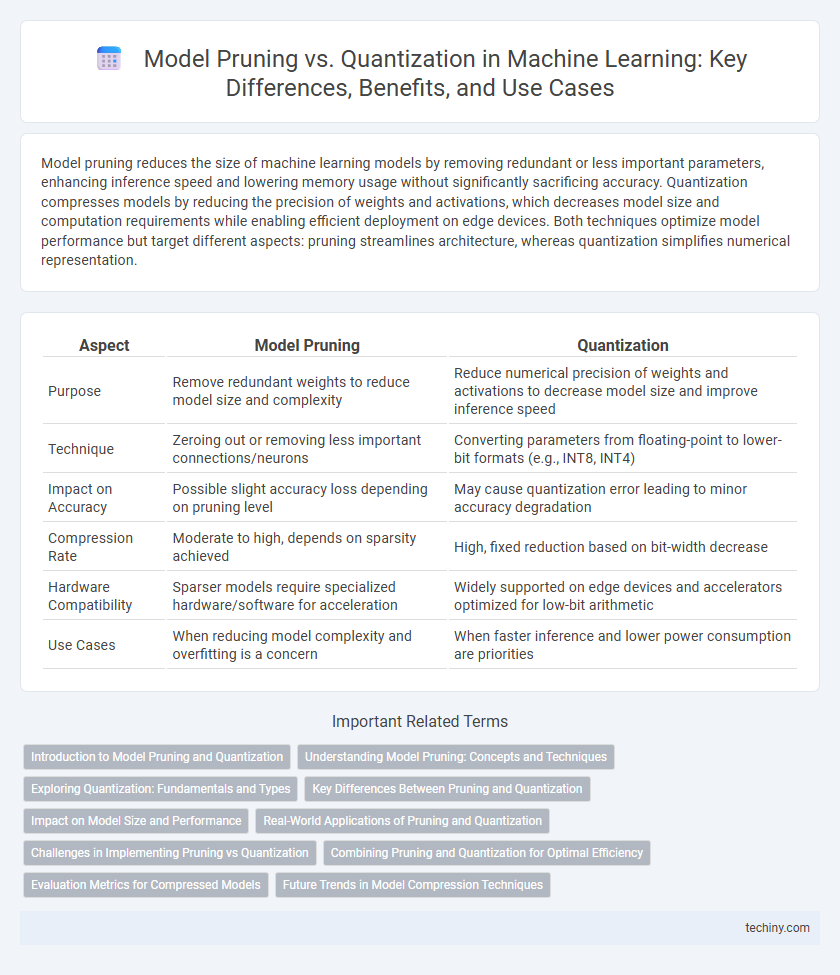

Model pruning reduces the size of machine learning models by removing redundant or less important parameters, enhancing inference speed and lowering memory usage without significantly sacrificing accuracy. Quantization compresses models by reducing the precision of weights and activations, which decreases model size and computation requirements while enabling efficient deployment on edge devices. Both techniques optimize model performance but target different aspects: pruning streamlines architecture, whereas quantization simplifies numerical representation.

Table of Comparison

| Aspect | Model Pruning | Quantization |

|---|---|---|

| Purpose | Remove redundant weights to reduce model size and complexity | Reduce numerical precision of weights and activations to decrease model size and improve inference speed |

| Technique | Zeroing out or removing less important connections/neurons | Converting parameters from floating-point to lower-bit formats (e.g., INT8, INT4) |

| Impact on Accuracy | Possible slight accuracy loss depending on pruning level | May cause quantization error leading to minor accuracy degradation |

| Compression Rate | Moderate to high, depends on sparsity achieved | High, fixed reduction based on bit-width decrease |

| Hardware Compatibility | Sparser models require specialized hardware/software for acceleration | Widely supported on edge devices and accelerators optimized for low-bit arithmetic |

| Use Cases | When reducing model complexity and overfitting is a concern | When faster inference and lower power consumption are priorities |

Introduction to Model Pruning and Quantization

Model pruning reduces the size of machine learning models by removing redundant or less important parameters, enhancing computational efficiency without significantly compromising accuracy. Quantization compresses models by lowering the precision of weights and activations, converting floating-point values into lower-bit representations such as INT8 or INT4. Both techniques are essential for deploying resource-efficient models on edge devices with limited memory and processing power.

Understanding Model Pruning: Concepts and Techniques

Model pruning systematically reduces the number of parameters in a neural network by eliminating redundant or less significant weights, which helps in decreasing model size and improving inference speed without substantial loss in accuracy. Techniques such as magnitude-based pruning remove weights with the smallest absolute values, while structured pruning targets entire neurons or filters to maintain hardware efficiency. Understanding these methods is essential for optimizing model deployment on resource-constrained devices.

Exploring Quantization: Fundamentals and Types

Quantization in machine learning compresses models by reducing the precision of weights and activations from floating-point to lower-bit representations, enhancing inference speed and reducing memory consumption. Common types include post-training quantization, which applies quantization after model training, and quantization-aware training, which simulates quantization effects during training to maintain higher accuracy. These techniques enable deployment on resource-constrained devices like smartphones and embedded systems without substantial performance degradation.

Key Differences Between Pruning and Quantization

Model pruning reduces the size of a neural network by removing less important or redundant weights, leading to sparsity and faster inference with minimal accuracy loss. Quantization compresses the model by lowering the precision of weights and activations, typically from 32-bit floating point to 8-bit integers, which decreases memory usage and accelerates computation on specialized hardware. Pruning targets model architecture simplification through weight elimination, whereas quantization focuses on numerical precision reduction without altering the network structure.

Impact on Model Size and Performance

Model pruning reduces model size by removing redundant neurons or weights, which leads to faster inference but may cause slight accuracy degradation if over-pruned. Quantization compresses the model by lowering precision of weights from 32-bit floating point to 8-bit or lower, significantly decreasing memory usage while maintaining near-original performance. Combining pruning and quantization often yields optimal balance between minimal model size and high inference speed without substantial loss in accuracy.

Real-World Applications of Pruning and Quantization

Model pruning significantly enhances real-world machine learning applications by reducing model size and inference latency, enabling deployment on resource-constrained devices such as smartphones and IoT sensors. Quantization facilitates efficient execution of neural networks by lowering numerical precision, which decreases memory footprint and speeds up inference without substantial loss in accuracy, benefiting edge computing and real-time systems. Combining pruning and quantization is critical in autonomous vehicles and mobile health monitoring, where optimized models deliver high performance under strict computational and power limitations.

Challenges in Implementing Pruning vs Quantization

Implementing model pruning faces challenges such as determining optimal sparsity levels without degrading accuracy and managing irregular memory access patterns that hinder hardware efficiency. Quantization encounters difficulties in maintaining model precision due to reduced numerical representation and requires specialized hardware support for low-bit computations. Both techniques demand careful tuning to balance compression rate and model performance in diverse deployment environments.

Combining Pruning and Quantization for Optimal Efficiency

Combining model pruning and quantization achieves optimal efficiency by reducing both the size and computational complexity of machine learning models. Pruning eliminates redundant neurons and weights, while quantization lowers the precision of weights and activations, enabling faster inference and reduced memory usage. This synergy enhances deployment on resource-constrained devices, maintaining accuracy while significantly improving speed and energy efficiency.

Evaluation Metrics for Compressed Models

Evaluation metrics for compressed models in machine learning focus primarily on accuracy, latency, and model size to balance performance and resource efficiency. Model pruning is typically assessed by sparsity levels and the impact on inference speed, while quantization evaluation emphasizes precision loss measured through metrics like top-1 accuracy and mean squared error. Compression ratio and energy consumption are also critical metrics used to compare the effectiveness of pruning versus quantization techniques.

Future Trends in Model Compression Techniques

Emerging model compression techniques increasingly combine pruning and quantization to enhance computational efficiency and reduce memory footprints in deep learning applications. Researchers focus on adaptive algorithms that dynamically adjust compression levels based on model architecture and deployment constraints, leveraging reinforcement learning and neural architecture search. Advances in hardware-aware compression also drive innovation, enabling real-time optimization tailored to edge devices and specialized AI accelerators.

Model Pruning vs Quantization Infographic

techiny.com

techiny.com