Mini-batch learning processes small subsets of data to balance between computational efficiency and model stability, improving convergence speed in training neural networks. Online learning updates the model incrementally with each new data point, enabling real-time adaptation and handling streaming data effectively. Choosing between mini-batch and online learning depends on dataset size, computational resources, and the need for immediate model updates.

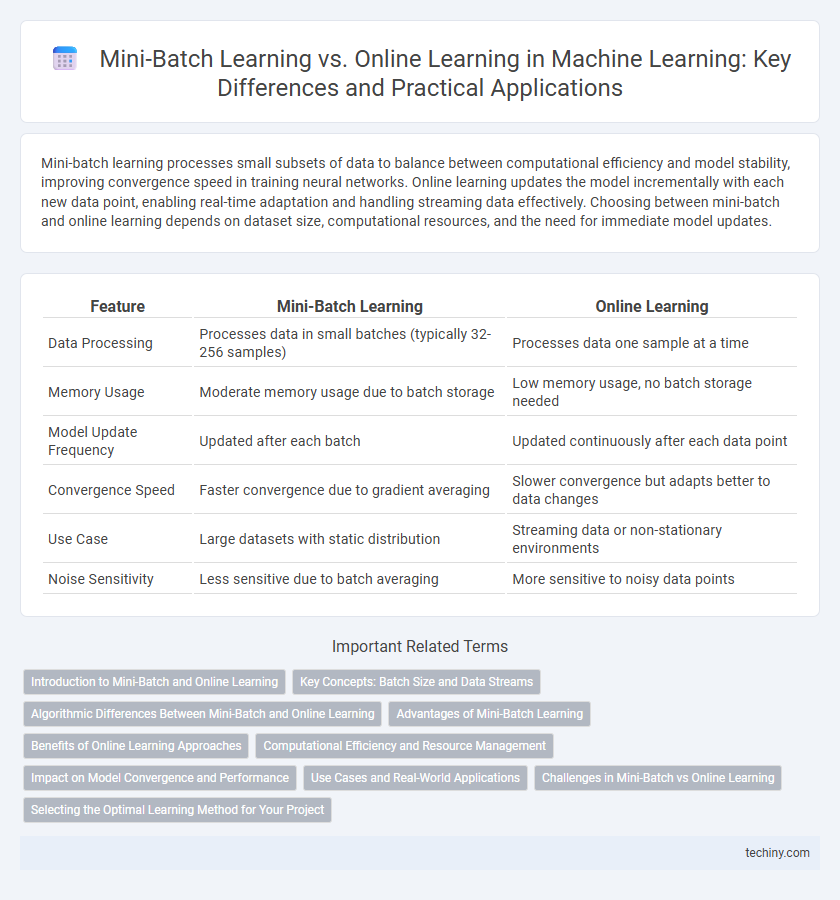

Table of Comparison

| Feature | Mini-Batch Learning | Online Learning |

|---|---|---|

| Data Processing | Processes data in small batches (typically 32-256 samples) | Processes data one sample at a time |

| Memory Usage | Moderate memory usage due to batch storage | Low memory usage, no batch storage needed |

| Model Update Frequency | Updated after each batch | Updated continuously after each data point |

| Convergence Speed | Faster convergence due to gradient averaging | Slower convergence but adapts better to data changes |

| Use Case | Large datasets with static distribution | Streaming data or non-stationary environments |

| Noise Sensitivity | Less sensitive due to batch averaging | More sensitive to noisy data points |

Introduction to Mini-Batch and Online Learning

Mini-batch learning processes data in small, fixed-size subsets, enabling efficient gradient estimation and faster convergence compared to using the entire dataset at once. Online learning updates model parameters incrementally with each new data point, making it suitable for real-time applications and streaming data scenarios. Both techniques balance computational efficiency and model adaptability, with mini-batch learning optimizing resource usage and online learning providing continuous updates.

Key Concepts: Batch Size and Data Streams

Mini-batch learning processes data in fixed-size batches, striking a balance between computational efficiency and model convergence by updating weights after each batch. Online learning handles data streams one instance at a time, enabling continuous model adaptation to incoming data without requiring storage of the entire dataset. Batch size influences memory usage and training speed, while data streams emphasize real-time learning and handling potentially infinite data sequences.

Algorithmic Differences Between Mini-Batch and Online Learning

Mini-batch learning processes fixed-size subsets of data during each training iteration, balancing computational efficiency and gradient noise reduction, whereas online learning updates model parameters incrementally with every individual data point, enabling faster adaptability to streaming data. Mini-batch algorithms leverage vectorized operations for parallel processing, improving convergence stability by averaging gradients, while online learning algorithms operate sequentially, often relying on stochastic gradient descent for real-time updates. The choice between mini-batch and online learning algorithms impacts convergence speed, model accuracy, and resource utilization based on data volume and application requirements.

Advantages of Mini-Batch Learning

Mini-batch learning offers a balanced approach by processing small subsets of data, which improves computational efficiency and accelerates convergence compared to online learning. It enhances gradient estimation stability, reducing the variance and helping models avoid noisy updates that can occur with single-instance processing. This method also benefits from parallel hardware optimization, leveraging GPUs effectively for faster training times in machine learning workflows.

Benefits of Online Learning Approaches

Online learning approaches enable models to update continuously with new data, improving adaptability and responsiveness to real-time changes. This method reduces memory consumption since it processes one sample or a few samples at a time, making it suitable for large-scale or streaming data environments. Enhanced model performance on dynamic datasets is achieved by avoiding the need to retrain on the entire dataset, resulting in efficient and timely predictions.

Computational Efficiency and Resource Management

Mini-batch learning balances computational efficiency and resource management by processing fixed-size subsets of data, enabling parallelization and reducing memory consumption compared to full-batch methods. Online learning optimizes resource utilization by updating model parameters incrementally with each new data point, leading to faster convergence and lower memory requirements. Mini-batch methods typically demand more consistent computational power, while online learning excels in streaming data scenarios with limited computational resources.

Impact on Model Convergence and Performance

Mini-batch learning stabilizes gradient estimates by averaging over small data subsets, leading to faster and more reliable model convergence compared to online learning, which updates parameters after each individual sample and can introduce high variance. This controlled gradient noise in mini-batch learning often results in improved performance and generalization on unseen data. Online learning excels in scenarios with streaming data and limited memory but may converge slower and with less stability due to noisy updates.

Use Cases and Real-World Applications

Mini-batch learning excels in scenarios with large datasets such as image recognition and natural language processing, balancing computational efficiency and model accuracy. Online learning is ideal for real-time applications like stock price prediction, fraud detection, and personalized recommendations where data streams continuously. Both methods improve model adaptability but differ in processing speed and data handling suited to specific use cases.

Challenges in Mini-Batch vs Online Learning

Mini-batch learning faces challenges such as tuning batch size to balance training stability and computational efficiency, while online learning struggles with adapting to streaming data that may be non-stationary and noisy. Mini-batch approaches require sufficient memory for storing multiple samples, whereas online learning demands rapid model updates in real-time, increasing computational overhead. Both methods encounter difficulties in managing concept drift, but online learning is more sensitive due to its continuous adaptation to incoming data streams.

Selecting the Optimal Learning Method for Your Project

Mini-batch learning balances stability and computational efficiency by processing small data subsets, making it ideal for projects with moderate-sized datasets and limited memory. Online learning updates models incrementally with each new data point, offering adaptability for real-time applications and streaming data scenarios. Selecting the optimal learning method depends on dataset size, available computational resources, and the need for immediate model updates in dynamic environments.

mini-batch learning vs online learning Infographic

techiny.com

techiny.com