Hyperparameter tuning involves optimizing the settings that govern the learning process, such as learning rate and regularization strength, to enhance model performance before training. Model training focuses on fitting the algorithm to the data by adjusting internal parameters based on the training dataset. Effective machine learning requires balancing hyperparameter tuning to achieve optimal generalization alongside thorough model training to ensure accurate predictions.

Table of Comparison

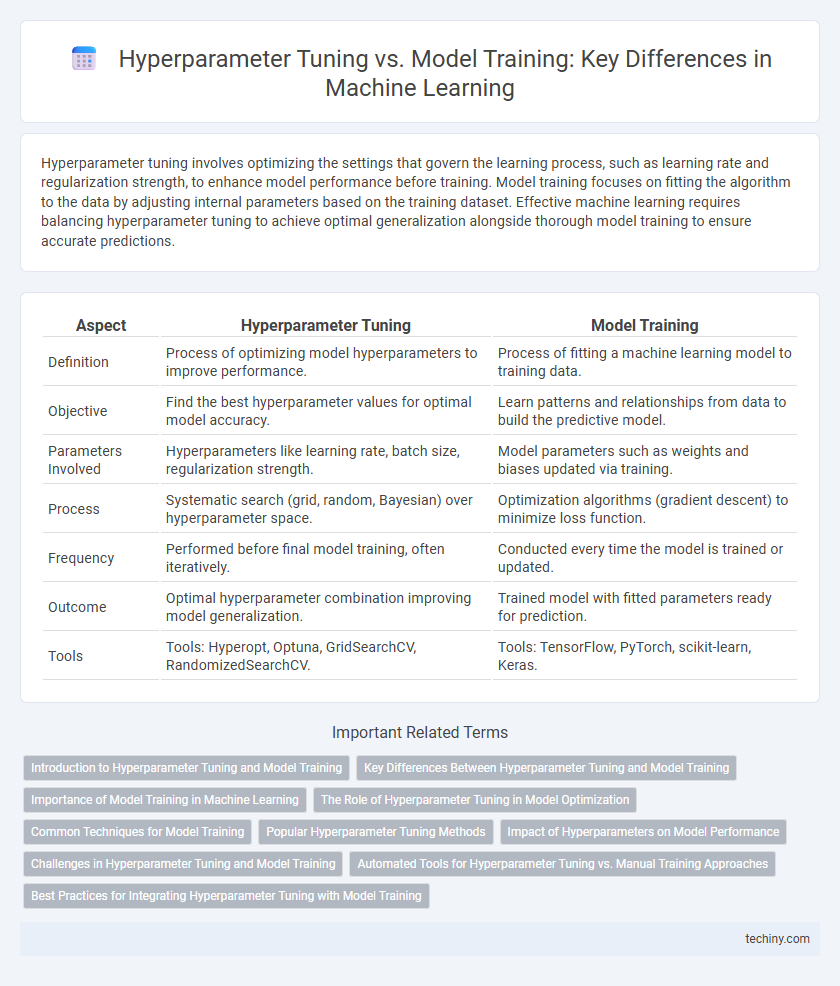

| Aspect | Hyperparameter Tuning | Model Training |

|---|---|---|

| Definition | Process of optimizing model hyperparameters to improve performance. | Process of fitting a machine learning model to training data. |

| Objective | Find the best hyperparameter values for optimal model accuracy. | Learn patterns and relationships from data to build the predictive model. |

| Parameters Involved | Hyperparameters like learning rate, batch size, regularization strength. | Model parameters such as weights and biases updated via training. |

| Process | Systematic search (grid, random, Bayesian) over hyperparameter space. | Optimization algorithms (gradient descent) to minimize loss function. |

| Frequency | Performed before final model training, often iteratively. | Conducted every time the model is trained or updated. |

| Outcome | Optimal hyperparameter combination improving model generalization. | Trained model with fitted parameters ready for prediction. |

| Tools | Tools: Hyperopt, Optuna, GridSearchCV, RandomizedSearchCV. | Tools: TensorFlow, PyTorch, scikit-learn, Keras. |

Introduction to Hyperparameter Tuning and Model Training

Hyperparameter tuning involves selecting the best set of parameters that govern the learning process in machine learning algorithms, such as learning rate, batch size, and number of epochs, to optimize model performance. Model training refers to the process where the algorithm learns patterns from the training data by adjusting internal weights, typically through gradient descent or similar optimization techniques. Effective hyperparameter tuning improves model accuracy by systematically exploring parameter configurations before or during model training to prevent overfitting and underfitting.

Key Differences Between Hyperparameter Tuning and Model Training

Hyperparameter tuning involves selecting the optimal settings for parameters that govern the learning process, such as learning rate, batch size, and number of epochs. Model training focuses on adjusting model weights using training data through algorithms like gradient descent to minimize loss functions. The key difference lies in tuning impacting algorithm performance and generalization, while training directly updates model parameters for pattern learning.

Importance of Model Training in Machine Learning

Model training is the foundational process in machine learning where algorithms learn from data to identify patterns and make accurate predictions. Effective model training directly impacts the model's generalization ability and performance on unseen data, making it crucial for real-world applications. While hyperparameter tuning optimizes model parameters, the quality and quantity of model training data largely determine the success of machine learning workflows.

The Role of Hyperparameter Tuning in Model Optimization

Hyperparameter tuning plays a crucial role in model optimization by systematically searching for the best set of hyperparameters that enhance a machine learning model's predictive performance. Unlike model training, which focuses on learning parameters from data, hyperparameter tuning adjusts external configurations such as learning rate, batch size, and regularization strength to prevent overfitting and improve generalization. Effective hyperparameter tuning leverages techniques like grid search, random search, and Bayesian optimization to fine-tune model accuracy and robustness across diverse datasets.

Common Techniques for Model Training

Common techniques for model training include batch gradient descent, stochastic gradient descent, and mini-batch gradient descent, which optimize model parameters by minimizing the loss function iteratively. Regularization methods such as L1 and L2 help prevent overfitting by adding penalty terms to the loss function during training. Early stopping and data augmentation are also widely applied to improve model generalization and robustness.

Popular Hyperparameter Tuning Methods

Popular hyperparameter tuning methods in machine learning include grid search, random search, and Bayesian optimization, each offering unique advantages in navigating the hyperparameter space to enhance model performance. Grid search systematically evaluates all combinations in a specified parameter grid, ensuring comprehensive coverage but often at a high computational cost. Random search improves efficiency by sampling random parameter combinations, while Bayesian optimization models the performance landscape to intelligently select promising hyperparameters, balancing exploration and exploitation.

Impact of Hyperparameters on Model Performance

Hyperparameter tuning plays a critical role in optimizing model performance by adjusting parameters such as learning rate, batch size, and regularization strength. These hyperparameters influence the model's ability to generalize, converge speed, and risk of overfitting, directly affecting metrics like accuracy, precision, and recall. Effective tuning can significantly enhance the predictive power and robustness of machine learning models compared to default or arbitrarily chosen settings.

Challenges in Hyperparameter Tuning and Model Training

Hyperparameter tuning presents challenges such as high computational cost and the risk of overfitting due to extensive search spaces. Model training struggles with issues like data quality, convergence speed, and the trade-off between bias and variance. Both processes require efficient resource management and careful validation strategies to optimize predictive performance.

Automated Tools for Hyperparameter Tuning vs. Manual Training Approaches

Automated tools for hyperparameter tuning leverage advanced algorithms such as Bayesian optimization and genetic algorithms to efficiently search the parameter space, significantly reducing the time and computational resources compared to manual tuning. These tools integrate seamlessly with machine learning frameworks like TensorFlow and PyTorch, enabling scalable experimentation and improved model performance. Manual training approaches, by contrast, often involve trial-and-error methods that can be time-consuming and less effective in navigating complex hyperparameter landscapes.

Best Practices for Integrating Hyperparameter Tuning with Model Training

Effective integration of hyperparameter tuning with model training involves using automated search methods like grid search, random search, or Bayesian optimization to systematically explore hyperparameter spaces. Employing cross-validation during tuning ensures robust performance evaluation and prevents overfitting by validating model generalizability across subsets of the training data. Prioritizing parallel processing and early stopping techniques optimizes computational efficiency while maintaining model accuracy throughout the combined tuning and training workflow.

Hyperparameter Tuning vs Model Training Infographic

techiny.com

techiny.com