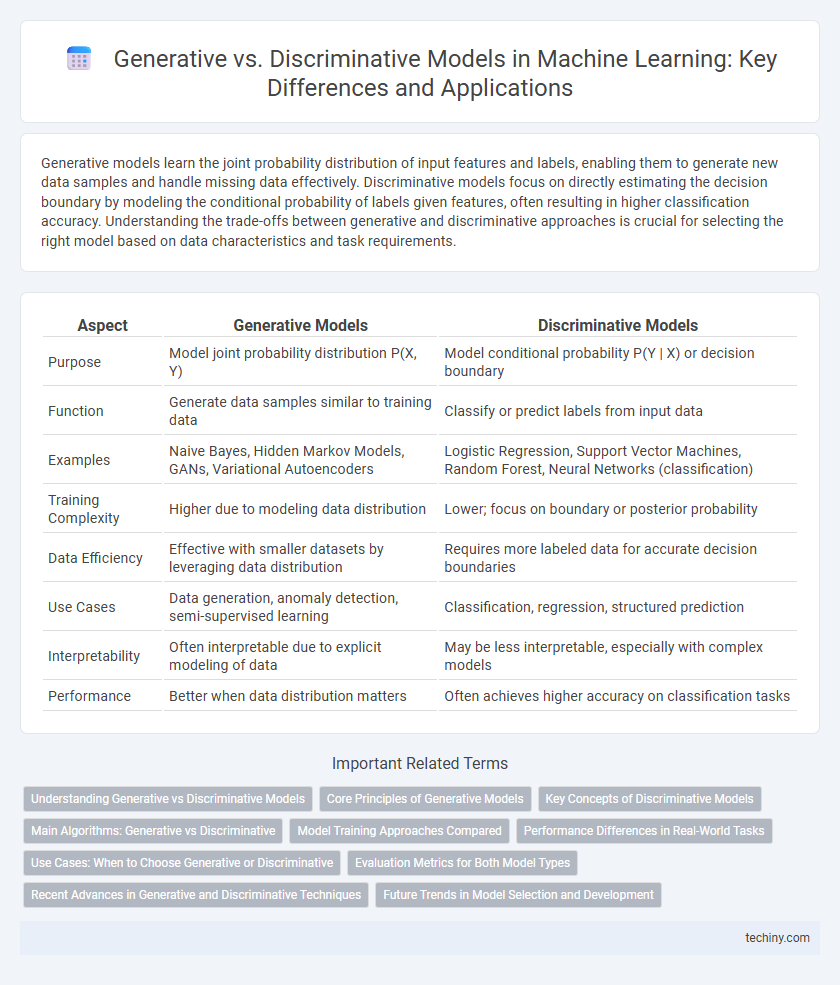

Generative models learn the joint probability distribution of input features and labels, enabling them to generate new data samples and handle missing data effectively. Discriminative models focus on directly estimating the decision boundary by modeling the conditional probability of labels given features, often resulting in higher classification accuracy. Understanding the trade-offs between generative and discriminative approaches is crucial for selecting the right model based on data characteristics and task requirements.

Table of Comparison

| Aspect | Generative Models | Discriminative Models |

|---|---|---|

| Purpose | Model joint probability distribution P(X, Y) | Model conditional probability P(Y | X) or decision boundary |

| Function | Generate data samples similar to training data | Classify or predict labels from input data |

| Examples | Naive Bayes, Hidden Markov Models, GANs, Variational Autoencoders | Logistic Regression, Support Vector Machines, Random Forest, Neural Networks (classification) |

| Training Complexity | Higher due to modeling data distribution | Lower; focus on boundary or posterior probability |

| Data Efficiency | Effective with smaller datasets by leveraging data distribution | Requires more labeled data for accurate decision boundaries |

| Use Cases | Data generation, anomaly detection, semi-supervised learning | Classification, regression, structured prediction |

| Interpretability | Often interpretable due to explicit modeling of data | May be less interpretable, especially with complex models |

| Performance | Better when data distribution matters | Often achieves higher accuracy on classification tasks |

Understanding Generative vs Discriminative Models

Generative models, such as Gaussian Mixture Models and Hidden Markov Models, learn the joint probability distribution P(x, y) by modeling how data is generated, enabling them to create new data samples. Discriminative models, including Logistic Regression and Support Vector Machines, focus on learning the conditional probability P(y|x) directly for classification tasks, often resulting in higher accuracy on labeled data. Understanding the differences between these models is crucial for selecting appropriate algorithms based on data characteristics and desired outcomes in machine learning applications.

Core Principles of Generative Models

Generative models learn the joint probability distribution \(P(X, Y)\), enabling them to generate new data samples by understanding the underlying data structure. Key principles include modeling the data generation process, capturing latent variables, and estimating the likelihood of observed data. Techniques like Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs) exemplify these core concepts by producing realistic synthetic data based on learned probability distributions.

Key Concepts of Discriminative Models

Discriminative models in machine learning focus on modeling the decision boundary between classes by directly estimating the conditional probability P(y|x), where y represents the label and x the input features. These models excel in classification tasks by learning how to distinguish between different categories without requiring knowledge of the data distribution P(x). Common examples include logistic regression, support vector machines, and conditional random fields, which offer strong performance and efficient training in supervised learning scenarios.

Main Algorithms: Generative vs Discriminative

Generative algorithms, such as Naive Bayes and Hidden Markov Models, model the joint probability distribution P(X, Y) to generate data and perform classification by estimating how data is generated. Discriminative algorithms like Logistic Regression and Support Vector Machines focus directly on modeling the conditional probability P(Y|X), optimizing decision boundaries between classes for higher predictive accuracy. Understanding the distinction enhances model selection in applications ranging from natural language processing to image recognition.

Model Training Approaches Compared

Generative models learn the joint probability distribution p(x, y) and are trained by maximizing the likelihood of observed data, enabling them to generate new samples and capture underlying data distributions. Discriminative models focus on learning the conditional probability p(y | x) directly, optimizing the decision boundary between classes to improve classification accuracy. Training generative models often involves more complex inference and higher computational cost, whereas discriminative models typically achieve faster convergence and better performance in supervised learning tasks.

Performance Differences in Real-World Tasks

Generative models like Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs) excel in creating realistic data samples, benefiting tasks such as image synthesis and data augmentation, but often require more computational resources. Discriminative models, including Support Vector Machines (SVMs) and deep neural networks, generally achieve higher accuracy and faster inference on classification problems by directly modeling decision boundaries. In real-world tasks like speech recognition and fraud detection, discriminative approaches typically outperform generative models due to their focus on optimizing predictive performance rather than data distribution.

Use Cases: When to Choose Generative or Discriminative

Generative models excel in use cases requiring data synthesis, anomaly detection, and unsupervised learning due to their ability to model joint probability distributions and generate new samples. Discriminative models are preferred for classification and regression tasks where prediction accuracy is paramount, as they directly model decision boundaries. Choosing between generative and discriminative depends on the availability of labeled data, complexity of the problem, and whether interpretability or data generation is the primary goal.

Evaluation Metrics for Both Model Types

Generative models are often evaluated using metrics such as log-likelihood, perplexity, and reconstruction error, which measure their ability to generate data distributions closely matching the real dataset. Discriminative models prioritize classification accuracy, precision, recall, F1-score, and area under the ROC curve (AUC) to assess their capability in differentiating between classes. In tasks like speech recognition or image classification, combining these metrics can provide nuanced insights into a model's performance across generative and discriminative aspects.

Recent Advances in Generative and Discriminative Techniques

Recent advances in generative techniques such as GANs (Generative Adversarial Networks) and VAEs (Variational Autoencoders) have significantly improved the ability to model complex data distributions for image synthesis and natural language generation. Discriminative models, particularly deep learning architectures like convolutional neural networks (CNNs) and transformers, continue to dominate tasks involving classification and structured prediction due to their superior accuracy and efficiency. Hybrid approaches integrating generative and discriminative models are emerging, offering promising results in semi-supervised learning and anomaly detection by leveraging the strengths of both paradigms.

Future Trends in Model Selection and Development

Future trends in model selection and development emphasize hybrid approaches that combine generative and discriminative models to leverage the strengths of both, enhancing predictive accuracy and interpretability. Advances in deep learning architectures and transfer learning enable scalable and efficient integration of generative capabilities for data augmentation alongside discriminative models for classification tasks. The growing importance of explainability and robustness drives research toward models that balance generative understanding with discriminative power to address complex real-world applications.

generative vs discriminative Infographic

techiny.com

techiny.com