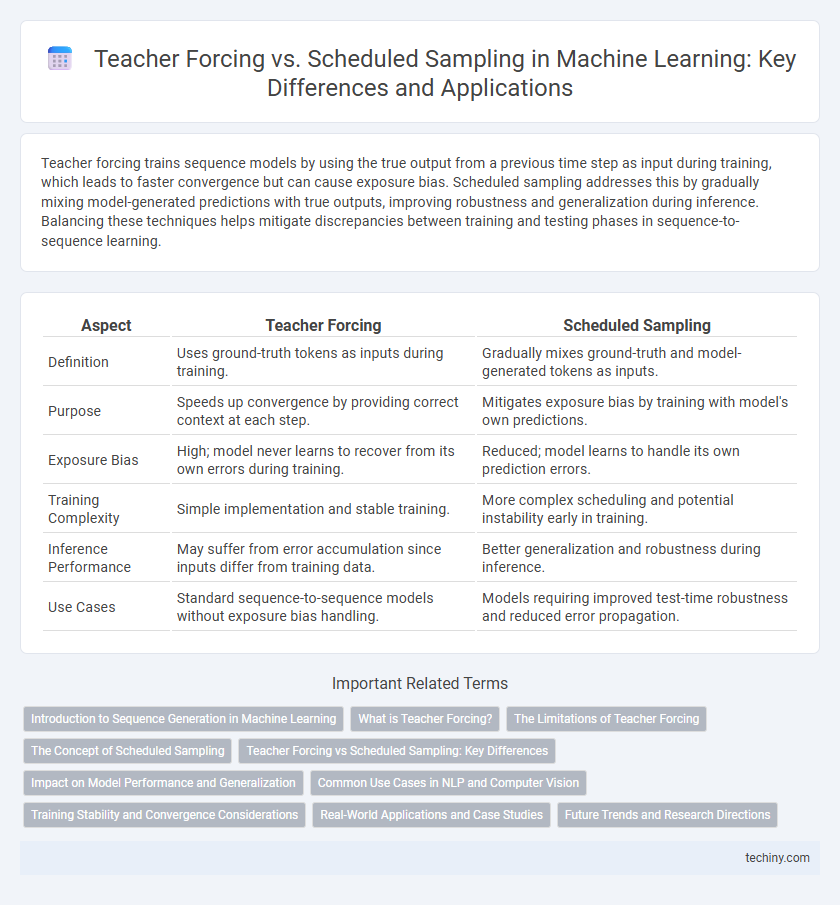

Teacher forcing trains sequence models by using the true output from a previous time step as input during training, which leads to faster convergence but can cause exposure bias. Scheduled sampling addresses this by gradually mixing model-generated predictions with true outputs, improving robustness and generalization during inference. Balancing these techniques helps mitigate discrepancies between training and testing phases in sequence-to-sequence learning.

Table of Comparison

| Aspect | Teacher Forcing | Scheduled Sampling |

|---|---|---|

| Definition | Uses ground-truth tokens as inputs during training. | Gradually mixes ground-truth and model-generated tokens as inputs. |

| Purpose | Speeds up convergence by providing correct context at each step. | Mitigates exposure bias by training with model's own predictions. |

| Exposure Bias | High; model never learns to recover from its own errors during training. | Reduced; model learns to handle its own prediction errors. |

| Training Complexity | Simple implementation and stable training. | More complex scheduling and potential instability early in training. |

| Inference Performance | May suffer from error accumulation since inputs differ from training data. | Better generalization and robustness during inference. |

| Use Cases | Standard sequence-to-sequence models without exposure bias handling. | Models requiring improved test-time robustness and reduced error propagation. |

Introduction to Sequence Generation in Machine Learning

Teacher forcing accelerates training in sequence generation by using the ground-truth token as the next input, preventing error accumulation during model prediction. Scheduled sampling gradually replaces ground-truth tokens with model-generated predictions, promoting robustness to discrepancies between training and inference distributions. This technique helps mitigate exposure bias, improving the model's performance on sequential data tasks such as language modeling and machine translation.

What is Teacher Forcing?

Teacher forcing is a training technique in machine learning where the model receives the ground truth output from the previous time step as input for the current step, rather than relying on its own predictions. This approach accelerates convergence by providing accurate context during sequence generation, reducing compounding errors. However, it may cause exposure bias since the model learns to depend heavily on correct previous tokens that differ from real inference conditions.

The Limitations of Teacher Forcing

Teacher forcing accelerates sequence model training by providing ground-truth inputs at each step, but it causes exposure bias, where the model struggles to recover from its own prediction errors during inference. This discrepancy between training and testing phases limits the model's robustness and generalization in real-world scenarios. Scheduled sampling mitigates this issue by gradually introducing the model's own predictions as inputs, improving stability and reducing error accumulation over time.

The Concept of Scheduled Sampling

Scheduled sampling addresses the exposure bias in sequence prediction models by gradually replacing ground truth inputs with model-generated predictions during training. This technique improves robustness by simulating inference conditions, allowing the model to better handle its own prediction errors. Unlike teacher forcing, scheduled sampling adapts the input distribution dynamically, enhancing sequence generation quality and reducing error accumulation.

Teacher Forcing vs Scheduled Sampling: Key Differences

Teacher Forcing trains sequence models by feeding the true previous output as input at each time step, which accelerates convergence but can cause exposure bias during inference. Scheduled Sampling addresses this by probabilistically mixing true previous outputs with model-predicted outputs during training, improving robustness against compounding errors. The key difference lies in input selection during training: Teacher Forcing uses ground truth exclusively, while Scheduled Sampling balances ground truth and model predictions to enhance generalization.

Impact on Model Performance and Generalization

Teacher forcing accelerates training convergence by guiding the model with ground-truth tokens, but it can lead to exposure bias that hampers generalization during inference. Scheduled sampling mitigates this by gradually integrating the model's own predictions during training, improving robustness and reducing error accumulation. Empirical studies demonstrate that scheduled sampling enhances long-term sequence generation quality and model adaptability compared to strict teacher forcing.

Common Use Cases in NLP and Computer Vision

Teacher forcing accelerates training in NLP tasks like sequence-to-sequence models by feeding ground-truth tokens during decoding, improving convergence for applications such as machine translation and text summarization. Scheduled sampling mitigates exposure bias in both NLP and computer vision by gradually replacing ground-truth inputs with model predictions during training, enhancing performance in tasks like image captioning and speech recognition. Common use cases leverage teacher forcing for stable early training and adopt scheduled sampling to improve robustness and generalization in dynamic, real-world scenarios.

Training Stability and Convergence Considerations

Teacher forcing improves training stability by providing ground-truth inputs during sequence prediction, reducing error accumulation and enabling faster convergence. Scheduled sampling gradually introduces model-generated inputs, mitigating exposure bias but potentially causing instability and slower convergence early in training. Balancing these methods ensures stable gradients and effective learning dynamics in recurrent neural network training.

Real-World Applications and Case Studies

Teacher forcing often accelerates training convergence in sequence-to-sequence models but can lead to exposure bias, reducing performance in real-world applications like speech recognition and machine translation. Scheduled sampling mitigates exposure bias by gradually blending predicted outputs during training, as demonstrated in improved results on tasks such as dialogue generation and robotic control systems. Case studies reveal scheduled sampling enhances model robustness and generalization, making it invaluable for deployment in noisy, dynamic environments.

Future Trends and Research Directions

Future trends in machine learning emphasize hybrid approaches combining teacher forcing and scheduled sampling to improve sequence prediction models' robustness. Research directions focus on adaptive sampling rates that dynamically balance ground truth input and model-generated data to mitigate exposure bias. Advances in reinforcement learning and curriculum learning are expected to further optimize training schedules, enhancing long-term dependency learning in recurrent neural networks and transformers.

teacher forcing vs scheduled sampling Infographic

techiny.com

techiny.com