Mini-batch gradient descent balances the efficiency of full-batch gradient descent with the noise-induced robustness of stochastic gradient descent by processing small subsets of data at each iteration, leading to faster convergence and improved generalization. Full-batch gradient descent computes gradients on the entire dataset, offering precise updates but often resulting in slower training and higher memory consumption. Selecting the optimal batch size depends on the specific dataset and computational resources, impacting training speed and model performance.

Table of Comparison

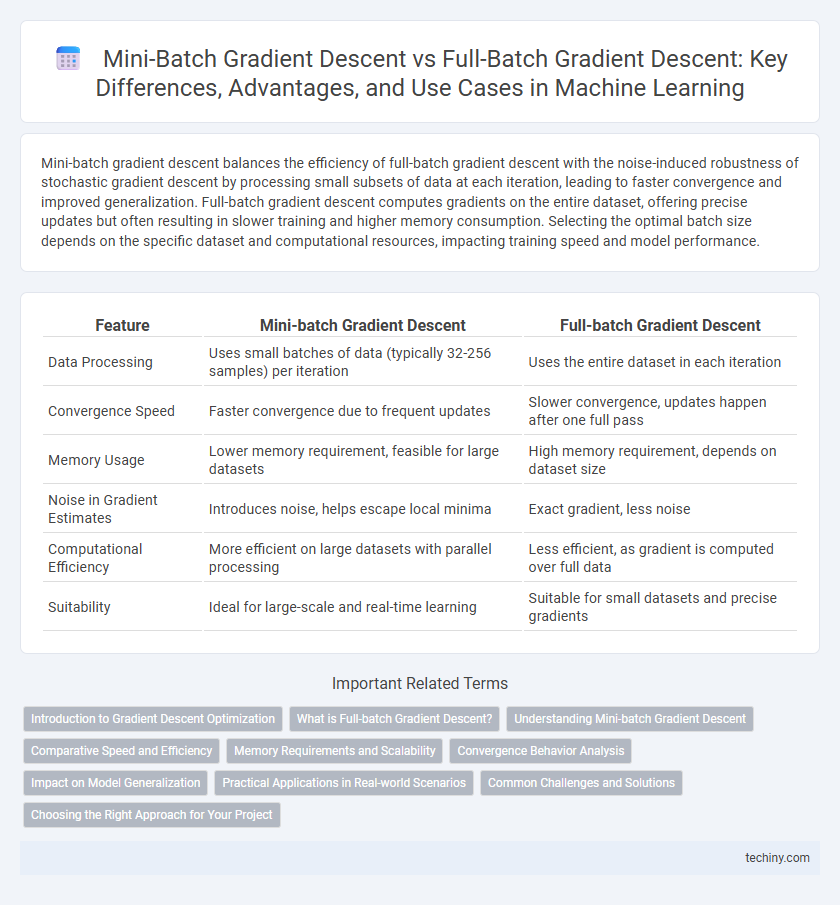

| Feature | Mini-batch Gradient Descent | Full-batch Gradient Descent |

|---|---|---|

| Data Processing | Uses small batches of data (typically 32-256 samples) per iteration | Uses the entire dataset in each iteration |

| Convergence Speed | Faster convergence due to frequent updates | Slower convergence, updates happen after one full pass |

| Memory Usage | Lower memory requirement, feasible for large datasets | High memory requirement, depends on dataset size |

| Noise in Gradient Estimates | Introduces noise, helps escape local minima | Exact gradient, less noise |

| Computational Efficiency | More efficient on large datasets with parallel processing | Less efficient, as gradient is computed over full data |

| Suitability | Ideal for large-scale and real-time learning | Suitable for small datasets and precise gradients |

Introduction to Gradient Descent Optimization

Gradient Descent optimization involves iteratively updating model parameters to minimize the loss function, with Mini-batch Gradient Descent using subsets of data for faster computation and reduced memory usage compared to Full-batch Gradient Descent, which processes the entire dataset at once for more stable but slower convergence. Mini-batch methods strike a balance between the noisy updates of Stochastic Gradient Descent and the computational intensity of Full-batch Gradient Descent, enhancing scalability for large datasets. Choosing between these methods depends on dataset size, available computational resources, and the desired convergence speed in training machine learning models.

What is Full-batch Gradient Descent?

Full-batch Gradient Descent calculates the gradient of the loss function using the entire training dataset in each iteration, ensuring accurate updates but often resulting in slower convergence and higher computational cost. This method is effective for smaller datasets where memory constraints are not an issue and precise gradient calculations can improve model stability. However, its inefficiency on large datasets limits its scalability compared to mini-batch approaches.

Understanding Mini-batch Gradient Descent

Mini-batch Gradient Descent optimizes model parameters by processing subsets of the training data, balancing between the noisy updates of stochastic gradient descent and the stability of full-batch gradient descent. This method significantly reduces computation time per iteration while maintaining convergence speed, making it ideal for large datasets in machine learning. Leveraging mini-batches enables parallel processing and better generalization, improving model accuracy and training efficiency.

Comparative Speed and Efficiency

Mini-batch Gradient Descent balances computational speed and convergence accuracy by processing smaller subsets of data, enabling faster iterations and more frequent updates compared to Full-batch Gradient Descent, which processes the entire dataset per update. Full-batch Gradient Descent offers stable and precise gradient estimates but suffers from slower training times and higher memory consumption, especially with large datasets. Mini-batch methods enhance efficiency by exploiting parallel hardware capabilities and reducing variance in gradient estimates, often leading to faster model convergence and better scalability in machine learning tasks.

Memory Requirements and Scalability

Mini-batch gradient descent significantly reduces memory requirements compared to full-batch gradient descent by processing smaller subsets of data, enabling efficient handling of large datasets that cannot fit entirely into memory. This approach enhances scalability, allowing models to be trained on distributed systems and GPUs with limited memory capacity. Full-batch gradient descent, while stable, is often impractical for massive datasets due to its high memory consumption and slower training iterations.

Convergence Behavior Analysis

Mini-batch Gradient Descent balances convergence speed and stability by updating parameters using subsets of data, reducing variance in gradient estimates compared to stochastic methods while promoting faster convergence than full-batch Gradient Descent. Full-batch Gradient Descent computes exact gradients over the entire dataset, resulting in stable but often slower convergence, particularly with large datasets due to computational overhead. The interplay between batch size and learning rate significantly influences convergence behavior, with mini-batch methods often finding a practical middle ground that enhances training efficiency and generalization.

Impact on Model Generalization

Mini-batch gradient descent often improves model generalization by introducing noise in gradient estimation, which helps escape local minima and prevents overfitting. Full-batch gradient descent uses the entire dataset for each update, leading to smoother convergence but increasing the risk of sharp minima and poorer generalization. The stochastic nature of mini-batches promotes better exploration of the loss landscape, enhancing the model's ability to generalize to unseen data.

Practical Applications in Real-world Scenarios

Mini-batch Gradient Descent accelerates training by processing subsets of data, making it ideal for large-scale datasets in applications like image recognition and natural language processing. Full-batch Gradient Descent uses the entire dataset to update model parameters, ensuring stable convergence but often becoming computationally expensive for big data tasks such as financial forecasting. Practical deployment prefers mini-batch methods for their balance between speed and accuracy in dynamic, real-time environments.

Common Challenges and Solutions

Mini-batch Gradient Descent faces challenges like noisy gradient estimates and potential convergence issues, mitigated by choosing appropriate batch sizes and adaptive learning rates such as Adam or RMSprop. Full-batch Gradient Descent struggles with high computational cost and slower updates, addressed by parallel processing and efficient hardware implementations like GPUs. Both methods require careful tuning of hyperparameters to balance convergence speed and model accuracy effectively.

Choosing the Right Approach for Your Project

Mini-batch gradient descent balances memory efficiency and convergence speed by processing small data subsets, making it ideal for large datasets and deep learning models. Full-batch gradient descent computes gradients using the entire dataset, providing stable updates but requiring significant computational resources, thus better suited for smaller datasets or when precise convergence is critical. Evaluating dataset size, available hardware, and model complexity is crucial to selecting between mini-batch and full-batch gradient descent for optimal training performance.

Mini-batch Gradient Descent vs Full-batch Gradient Descent Infographic

techiny.com

techiny.com