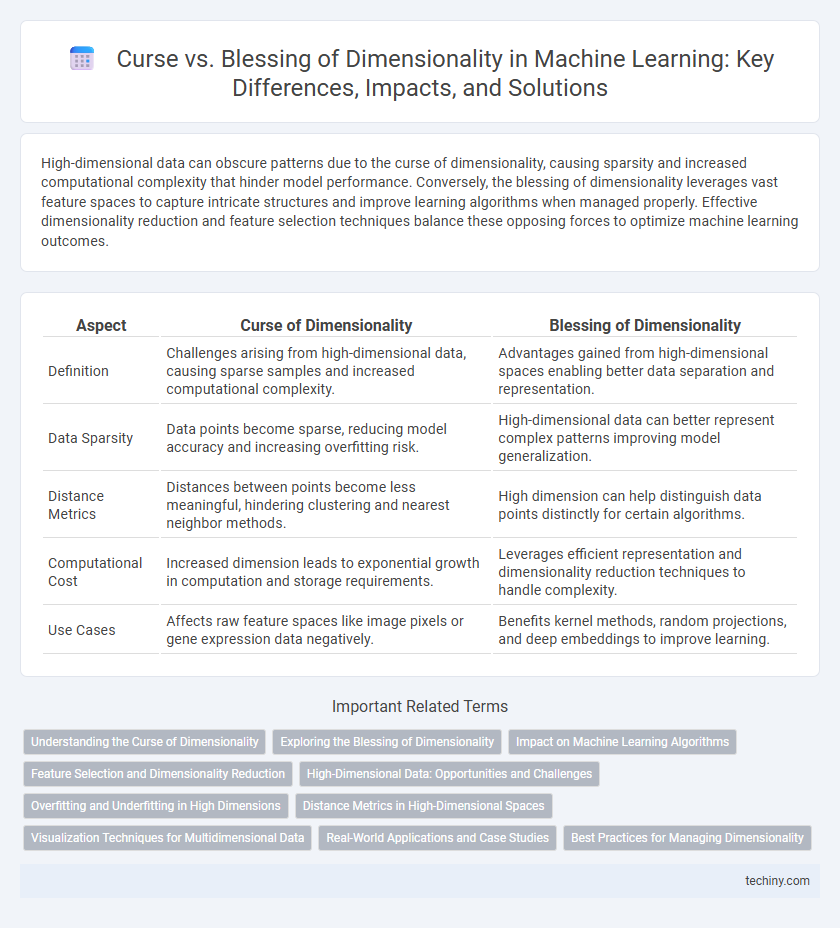

High-dimensional data can obscure patterns due to the curse of dimensionality, causing sparsity and increased computational complexity that hinder model performance. Conversely, the blessing of dimensionality leverages vast feature spaces to capture intricate structures and improve learning algorithms when managed properly. Effective dimensionality reduction and feature selection techniques balance these opposing forces to optimize machine learning outcomes.

Table of Comparison

| Aspect | Curse of Dimensionality | Blessing of Dimensionality |

|---|---|---|

| Definition | Challenges arising from high-dimensional data, causing sparse samples and increased computational complexity. | Advantages gained from high-dimensional spaces enabling better data separation and representation. |

| Data Sparsity | Data points become sparse, reducing model accuracy and increasing overfitting risk. | High-dimensional data can better represent complex patterns improving model generalization. |

| Distance Metrics | Distances between points become less meaningful, hindering clustering and nearest neighbor methods. | High dimension can help distinguish data points distinctly for certain algorithms. |

| Computational Cost | Increased dimension leads to exponential growth in computation and storage requirements. | Leverages efficient representation and dimensionality reduction techniques to handle complexity. |

| Use Cases | Affects raw feature spaces like image pixels or gene expression data negatively. | Benefits kernel methods, random projections, and deep embeddings to improve learning. |

Understanding the Curse of Dimensionality

The curse of dimensionality refers to the exponential increase in data sparsity and computational complexity as the number of features in a dataset grows, making machine learning models less effective and harder to train. High-dimensional spaces cause distance metrics to lose meaning, impacting clustering, classification, and nearest neighbor algorithms by reducing their accuracy. Understanding this phenomenon is critical for feature selection, dimensionality reduction techniques like PCA, and designing models that generalize well despite high feature counts.

Exploring the Blessing of Dimensionality

High-dimensional spaces in machine learning often reveal the Blessing of Dimensionality, where data points become more easily separable, improving classification performance. The sparsity in high dimensions enables algorithms like support vector machines and nearest neighbor classifiers to leverage intrinsic data structures for enhanced accuracy. Exploiting this property requires careful feature selection and dimensionality-aware model design to harness the full predictive power of complex datasets.

Impact on Machine Learning Algorithms

High-dimensional data significantly affects machine learning algorithms by increasing computational complexity and risk of overfitting, commonly known as the Curse of Dimensionality. Conversely, the Blessing of Dimensionality highlights that, with appropriate feature selection and regularization techniques, higher dimensions can improve model expressiveness and classification accuracy. Balancing dimensionality enables algorithms to capture complex patterns while maintaining generalization on unseen data.

Feature Selection and Dimensionality Reduction

High-dimensional feature spaces in machine learning often lead to the Curse of Dimensionality, where increased dimensions cause sparse data points and poor model generalization. Effective feature selection techniques reduce irrelevant or redundant attributes, mitigating overfitting and improving computational efficiency. Dimensionality reduction methods like PCA and t-SNE transform original features into lower-dimensional representations, enhancing model performance and interpretability while preserving essential data structure.

High-Dimensional Data: Opportunities and Challenges

High-dimensional data presents both significant challenges and opportunities in machine learning, as the Curse of Dimensionality leads to sparsity and increased computational complexity that can degrade model performance. However, the Blessing of Dimensionality emerges when high dimensions reveal underlying data structures, enabling more powerful representations and improved generalization through techniques like manifold learning and feature extraction. Effective handling of high-dimensional data requires balancing dimensionality reduction methods with advanced algorithms that exploit intrinsic data geometry for optimal predictive accuracy.

Overfitting and Underfitting in High Dimensions

High-dimensional spaces often exacerbate the curse of dimensionality, where sparse data leads to overfitting as models capture noise instead of true patterns. Conversely, the blessing of dimensionality can emerge when added features increase model capacity, reducing underfitting by enabling better representation of complex data structures. Balancing feature selection and regularization is crucial to mitigate overfitting while leveraging high-dimensional data advantages in machine learning.

Distance Metrics in High-Dimensional Spaces

Distance metrics in high-dimensional spaces exhibit counterintuitive behavior, where the Curse of Dimensionality causes distances between points to become nearly indistinguishable, reducing the effectiveness of traditional algorithms. Conversely, the Blessing of Dimensionality leverages relevant feature extraction and dimensionality reduction techniques to enhance meaningful distance calculations, improving model performance. Understanding the balance between these phenomena is critical for optimizing similarity measures and ensuring robust machine learning outcomes in high-dimensional datasets.

Visualization Techniques for Multidimensional Data

Effective visualization techniques for multidimensional data tackle the curse of dimensionality by reducing complexity through methods like t-SNE, PCA, and UMAP, which preserve essential patterns while minimizing information loss. These techniques transform high-dimensional spaces into interpretable 2D or 3D representations, facilitating feature extraction and clustering without sacrificing the intricate relationships inherent in the data. Leveraging these tools enables clearer insights and improved decision-making in machine learning applications where large feature sets often challenge direct analysis.

Real-World Applications and Case Studies

High-dimensional data in machine learning often faces the curse of dimensionality, where increased features lead to sparse data and degraded model performance, particularly in image recognition and genomic data analysis. However, the blessing of dimensionality emerges in applications like natural language processing and recommendation systems, where richer feature spaces enhance model accuracy and capture complex patterns. Case studies in healthcare analytics demonstrate that careful feature selection and dimensionality reduction techniques can mitigate the curse while leveraging the benefits of high-dimensional data.

Best Practices for Managing Dimensionality

Effective management of dimensionality in machine learning requires techniques such as feature selection, dimensionality reduction algorithms like PCA or t-SNE, and regularization methods to mitigate the curse of dimensionality. Leveraging high-dimensional data can enhance model performance when intrinsic data structures are preserved, exemplifying the blessing of dimensionality. Careful preprocessing and validation strategies ensure model generalization by balancing complexity and interpretability in high-dimensional spaces.

Curse of Dimensionality vs Blessing of Dimensionality Infographic

techiny.com

techiny.com