Global minima represent the absolute lowest point of a loss function, ensuring the most optimal solution in machine learning models. Local minima, by contrast, are points where the loss function reaches a minimum relative to its neighbors but are not the lowest possible value globally. Navigating the loss landscape effectively is critical to avoid local minima and achieve superior model performance through global minima.

Table of Comparison

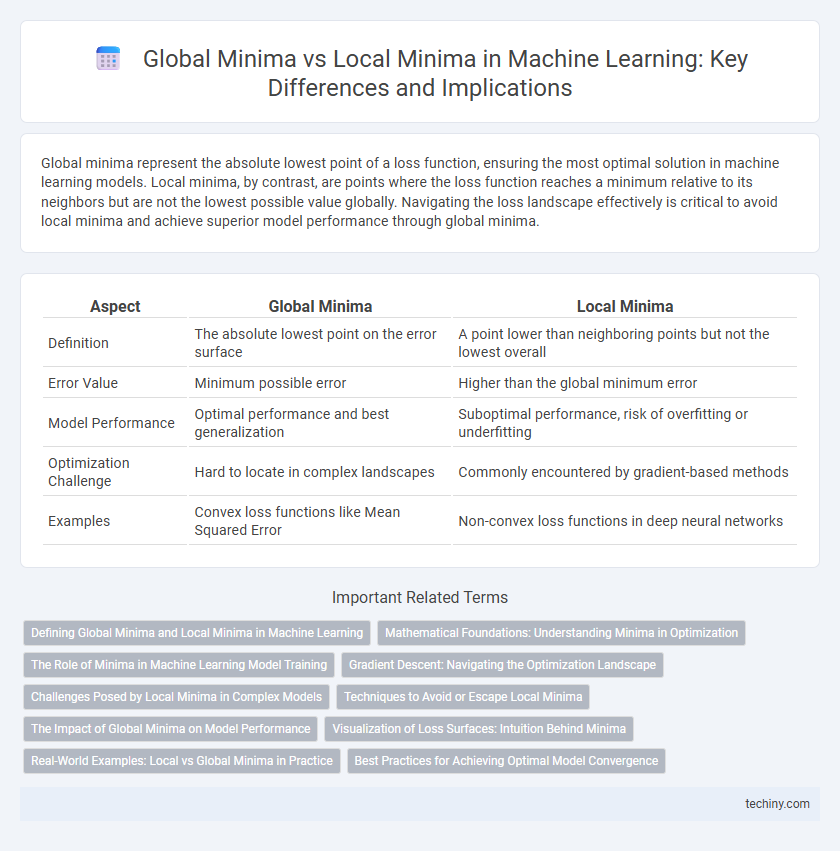

| Aspect | Global Minima | Local Minima |

|---|---|---|

| Definition | The absolute lowest point on the error surface | A point lower than neighboring points but not the lowest overall |

| Error Value | Minimum possible error | Higher than the global minimum error |

| Model Performance | Optimal performance and best generalization | Suboptimal performance, risk of overfitting or underfitting |

| Optimization Challenge | Hard to locate in complex landscapes | Commonly encountered by gradient-based methods |

| Examples | Convex loss functions like Mean Squared Error | Non-convex loss functions in deep neural networks |

Defining Global Minima and Local Minima in Machine Learning

Global minima in machine learning refer to points in the loss function landscape where the function attains its absolute lowest value, representing the most optimal solution for model parameters. Local minima are points where the loss function value is lower than neighboring points but not the absolute lowest, potentially leading to suboptimal model performance. Identifying global minima is crucial for achieving the best generalization and accuracy in machine learning models.

Mathematical Foundations: Understanding Minima in Optimization

Global minima represent the absolute lowest point on an optimization landscape, ensuring the most optimal solution in machine learning loss functions, while local minima are points where the function value is lower than neighboring points but not the absolute lowest. Understanding the mathematical properties of these minima involves analyzing gradient behaviors, Hessian matrices, and convexity of the cost function, which determine convergence characteristics during training. Techniques such as stochastic gradient descent and second-order methods help navigate complex loss surfaces to approximate or reach global minima, improving model accuracy and generalization.

The Role of Minima in Machine Learning Model Training

Global minima represent the absolute lowest point in the loss landscape, ensuring the best possible model performance by minimizing prediction error across the entire dataset. Local minima, while not the optimal solution, can still provide sufficiently low loss values, enabling faster convergence during training and preventing overfitting in some cases. Efficient optimization algorithms like gradient descent aim to navigate the complex loss surface to reach these minima, balancing the trade-off between accuracy and computational feasibility in machine learning model training.

Gradient Descent: Navigating the Optimization Landscape

Gradient Descent is a fundamental optimization algorithm in machine learning used to minimize the loss function by iteratively updating model parameters. It aims to find the global minimum, the absolute lowest point on the loss surface, but often converges to local minima due to non-convexity in complex models like deep neural networks. Techniques such as learning rate scheduling, momentum, and stochastic gradients help navigate the optimization landscape, improving the chances of escaping local minima and achieving better model performance.

Challenges Posed by Local Minima in Complex Models

Local minima in complex machine learning models create significant optimization challenges by trapping gradient-based algorithms, preventing convergence to the global minimum. These suboptimal points hinder model performance by producing higher error rates and unstable predictions. Overcoming local minima often requires advanced techniques such as stochastic optimization, adaptive learning rates, or ensemble methods to navigate the non-convex loss landscape effectively.

Techniques to Avoid or Escape Local Minima

Techniques to avoid or escape local minima in machine learning include using stochastic gradient descent (SGD) with momentum, which helps traverse shallow valleys by adding inertia to parameter updates. Another effective method is incorporating learning rate schedules or adaptive learning rates through optimizers like Adam or RMSprop, allowing the model to adjust step sizes dynamically and bypass deceptive local minima. Regularization methods such as dropout and batch normalization also aid in smoothing the loss landscape, reducing the likelihood of the model getting trapped in local minima.

The Impact of Global Minima on Model Performance

Global minima represent the absolute lowest points in the loss landscape, ensuring optimal model parameters that minimize error across the entire dataset. Achieving a global minimum typically results in superior generalization and robust performance on unseen data, reducing the risk of overfitting. In contrast, converging to local minima may trap the model in suboptimal solutions, leading to higher training and validation errors.

Visualization of Loss Surfaces: Intuition Behind Minima

Visualizing loss surfaces in machine learning reveals the complex topology of optimization landscapes, where global minima represent the absolute lowest points and local minima are suboptimal valleys. Intuition behind these minima helps in understanding why gradient descent may get trapped in local minima instead of reaching the global minimum. Tools like contour plots and 3D surface maps provide critical insight into the shape of loss functions, enabling better interpretation of convergence behavior during training.

Real-World Examples: Local vs Global Minima in Practice

In machine learning, training neural networks often encounters local minima where optimization algorithms settle but fail to reach the global minimum that represents the best solution. Real-world examples include image recognition tasks where local minima cause suboptimal model accuracy, while global minima achieve more precise feature extraction. Techniques like stochastic gradient descent and simulated annealing help escape local minima, thus improving performance by guiding models toward global minima in complex loss landscapes.

Best Practices for Achieving Optimal Model Convergence

Selecting appropriate optimization algorithms like Adam or RMSprop helps navigate complex loss landscapes and avoid entrapment in local minima, promoting convergence towards the global minimum. Employing techniques such as learning rate scheduling, batch normalization, and gradient clipping enhances training stability and mitigates issues like vanishing or exploding gradients. Regularizing models through dropout and early stopping further prevents overfitting, ensuring robust convergence to an optimal solution with better generalization.

global minima vs local minima Infographic

techiny.com

techiny.com