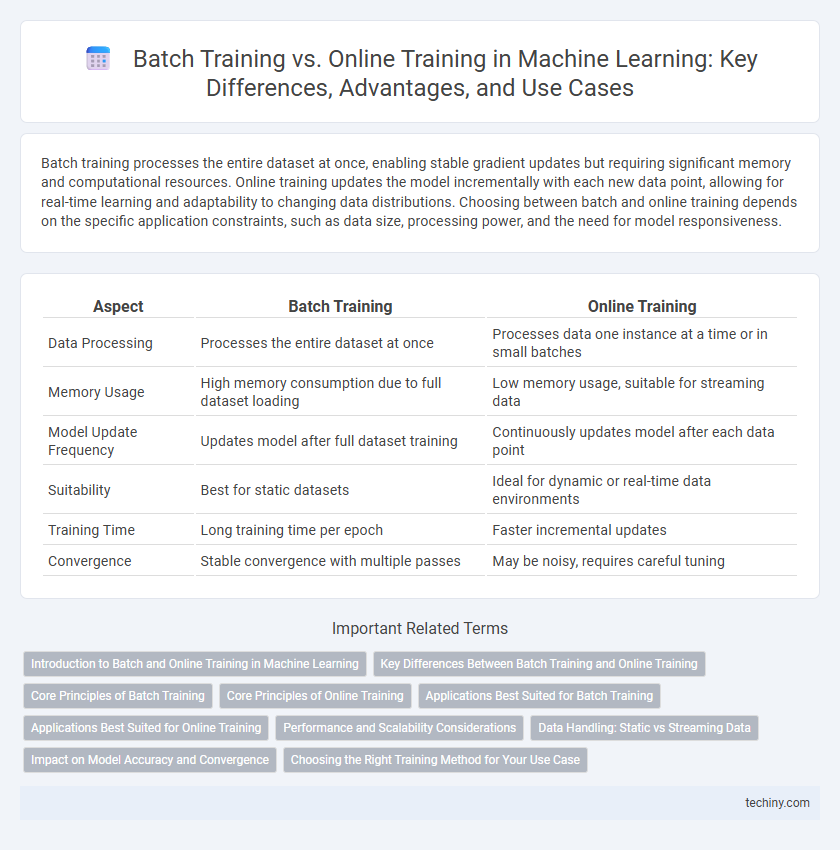

Batch training processes the entire dataset at once, enabling stable gradient updates but requiring significant memory and computational resources. Online training updates the model incrementally with each new data point, allowing for real-time learning and adaptability to changing data distributions. Choosing between batch and online training depends on the specific application constraints, such as data size, processing power, and the need for model responsiveness.

Table of Comparison

| Aspect | Batch Training | Online Training |

|---|---|---|

| Data Processing | Processes the entire dataset at once | Processes data one instance at a time or in small batches |

| Memory Usage | High memory consumption due to full dataset loading | Low memory usage, suitable for streaming data |

| Model Update Frequency | Updates model after full dataset training | Continuously updates model after each data point |

| Suitability | Best for static datasets | Ideal for dynamic or real-time data environments |

| Training Time | Long training time per epoch | Faster incremental updates |

| Convergence | Stable convergence with multiple passes | May be noisy, requires careful tuning |

Introduction to Batch and Online Training in Machine Learning

Batch training in machine learning involves processing the entire dataset at once to update model parameters, which ensures stable and accurate gradient estimates but requires substantial memory and computational resources. Online training updates the model incrementally with each new data point, enabling real-time learning and adaptability but potentially causing noisier gradient updates. Selecting between batch and online training depends on dataset size, computational capacity, and the need for immediate model updates in dynamic environments.

Key Differences Between Batch Training and Online Training

Batch training processes the entire dataset simultaneously, optimizing model parameters in bulk, which leads to stable but slower updates suitable for static data. Online training updates the model incrementally with each new data point, enabling faster adaptation to changing data distributions but potentially causing less stable convergence. The choice between batch and online training hinges on factors like dataset size, computational resources, and the need for real-time model updating.

Core Principles of Batch Training

Batch training in machine learning involves processing the entire dataset simultaneously to update model parameters, enhancing stability and convergence accuracy. This approach relies on epochs, where multiple passes over the full dataset refine the model with aggregated gradients, reducing noise compared to incremental updates. Batch training is especially effective for large, static datasets where computational resources permit extensive processing before deployment.

Core Principles of Online Training

Online training processes data sequentially, updating model parameters incrementally with each new sample. This approach enables models to adapt continuously to evolving data distributions, making it ideal for real-time applications and streaming data scenarios. Core principles include computational efficiency, low latency updates, and the ability to handle non-stationary environments without requiring access to the entire dataset.

Applications Best Suited for Batch Training

Batch training excels in applications where large datasets are available and consistent performance is crucial, such as image recognition, natural language processing, and fraud detection. It effectively handles complex models requiring extensive computational resources and multiple data passes to improve accuracy. Industries relying on periodic model updates rather than real-time learning, including finance and healthcare diagnostics, benefit significantly from batch training methodologies.

Applications Best Suited for Online Training

Real-time applications such as fraud detection, recommendation systems, and autonomous driving benefit significantly from online training due to its ability to continuously update models with streaming data. Online training excels in scenarios requiring rapid adaptation to changing data distributions, enabling models to maintain accuracy without retraining on entire datasets. This approach is ideal for environments with large-scale, dynamic data where immediate feedback and model refinement are critical for performance.

Performance and Scalability Considerations

Batch training processes the entire dataset at once, which allows for leveraging optimized matrix operations and often results in higher model accuracy but requires substantial memory and longer training times. Online training updates the model incrementally with each data point, enabling real-time adaptation and better scalability for large or streaming datasets while reducing memory consumption. Performance in batch training benefits from parallel processing on GPUs, whereas online training excels in scenarios demanding continuous learning and rapid response to data changes.

Data Handling: Static vs Streaming Data

Batch training processes static datasets by dividing the entire data into fixed-size batches, enabling models to learn from a comprehensive snapshot of information at once. Online training handles streaming data continuously, updating the model incrementally with each new data point to adapt to evolving patterns in real time. The choice between batch and online training hinges on the nature of the data source and the need for immediate learning versus thorough analysis.

Impact on Model Accuracy and Convergence

Batch training processes the entire dataset at once, leading to more stable and often higher model accuracy due to comprehensive gradient updates but can suffer from slower convergence in large datasets. Online training updates model parameters incrementally with each data point, enabling faster convergence and adaptability to changing data distributions but sometimes results in noisier gradients that may reduce accuracy. The choice between batch and online training depends on dataset size, real-time requirements, and the need for precise convergence in machine learning models.

Choosing the Right Training Method for Your Use Case

Batch training processes the entire dataset at once, making it ideal for large, static datasets where model updates occur infrequently. Online training updates the model incrementally with each new data point, which suits real-time applications and streaming data environments. Selecting the right training method depends on factors like data volume, latency requirements, and computational resources to optimize model performance and training efficiency.

Batch Training vs Online Training Infographic

techiny.com

techiny.com