Ridge Regression applies L2 regularization, which shrinks coefficients towards zero but never exactly zero, making it effective for handling multicollinearity and retaining all features in the model. Lasso Regression uses L1 regularization, which can shrink some coefficients to exactly zero, enabling feature selection by effectively excluding less important variables. Choosing between Ridge and Lasso depends on whether the goal is coefficient shrinkage with all features retained or sparse models with automatic feature selection.

Table of Comparison

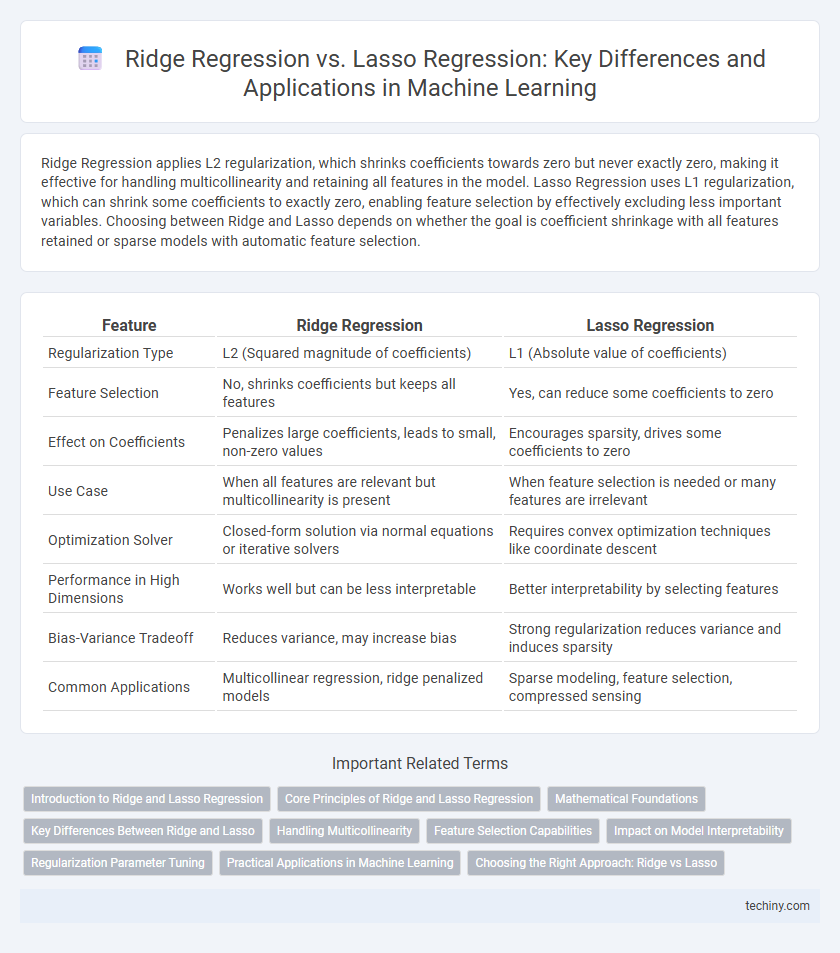

| Feature | Ridge Regression | Lasso Regression |

|---|---|---|

| Regularization Type | L2 (Squared magnitude of coefficients) | L1 (Absolute value of coefficients) |

| Feature Selection | No, shrinks coefficients but keeps all features | Yes, can reduce some coefficients to zero |

| Effect on Coefficients | Penalizes large coefficients, leads to small, non-zero values | Encourages sparsity, drives some coefficients to zero |

| Use Case | When all features are relevant but multicollinearity is present | When feature selection is needed or many features are irrelevant |

| Optimization Solver | Closed-form solution via normal equations or iterative solvers | Requires convex optimization techniques like coordinate descent |

| Performance in High Dimensions | Works well but can be less interpretable | Better interpretability by selecting features |

| Bias-Variance Tradeoff | Reduces variance, may increase bias | Strong regularization reduces variance and induces sparsity |

| Common Applications | Multicollinear regression, ridge penalized models | Sparse modeling, feature selection, compressed sensing |

Introduction to Ridge and Lasso Regression

Ridge regression introduces L2 regularization, adding a penalty proportional to the square of the coefficients to the loss function, which shrinks the coefficients and helps prevent overfitting while retaining all variables. Lasso regression applies L1 regularization by adding a penalty equal to the absolute value of the coefficients, promoting sparsity and performing feature selection by driving some coefficients to zero. Both techniques address multicollinearity and improve model generalization in high-dimensional machine learning scenarios.

Core Principles of Ridge and Lasso Regression

Ridge regression applies L2 regularization by adding the squared magnitude of coefficients to the loss function, effectively shrinking coefficients toward zero without eliminating any, which reduces model complexity and multicollinearity. Lasso regression incorporates L1 regularization by adding the absolute values of coefficients to the loss function, promoting sparsity by driving some coefficients exactly to zero, thus performing feature selection. Both techniques improve model generalization by penalizing large coefficients but differ in their impact on coefficient shrinkage and variable selection.

Mathematical Foundations

Ridge Regression minimizes the sum of squared residuals with an L2 penalty term, shrinking coefficients toward zero but never exactly zero, based on the optimization function ||y - Xb||2 + l||b||2. Lasso Regression incorporates an L1 penalty, optimizing ||y - Xb||2 + l||b||1, which enables coefficient sparsity by driving some coefficients exactly to zero, effectively performing variable selection. These differing penalty structures derive from convex optimization principles, with Ridge applying constraint on the Euclidean norm and Lasso enforcing sparsity via the Manhattan norm, which influences feature selection and model complexity.

Key Differences Between Ridge and Lasso

Ridge Regression applies L2 regularization by adding the squared magnitude of coefficients to the loss function, which helps in shrinking coefficients but does not eliminate them, making it suitable for handling multicollinearity. Lasso Regression uses L1 regularization, adding the absolute values of coefficients to the loss, which can shrink some coefficients to exactly zero, enabling feature selection and producing sparse models. The key difference lies in their impact on model complexity and interpretability: Ridge stabilizes coefficients without feature elimination, while Lasso performs both shrinkage and variable selection.

Handling Multicollinearity

Ridge Regression effectively addresses multicollinearity by applying L2 regularization, which shrinks coefficient estimates without forcing them to zero, thus stabilizing the model when predictors are highly correlated. Lasso Regression uses L1 regularization, promoting sparsity by driving some coefficients to zero, which not only handles multicollinearity but also performs feature selection. In scenarios with strong multicollinearity, Ridge often outperforms Lasso by retaining all correlated variables with reduced magnitude, while Lasso simplifies the model by excluding less important features.

Feature Selection Capabilities

Ridge Regression employs L2 regularization, which shrinks coefficients but does not set them exactly to zero, making it less effective for feature selection in high-dimensional datasets. Lasso Regression uses L1 regularization, promoting sparse solutions by driving some coefficients to zero, thus performing automatic feature selection and simplifying model interpretability. In scenarios demanding identification of key predictors, Lasso is preferred for its ability to exclude irrelevant features, whereas Ridge is better suited for multicollinearity handling without feature elimination.

Impact on Model Interpretability

Ridge Regression applies L2 regularization, shrinking coefficients but rarely reducing them to zero, which maintains all features in the model and can complicate interpretability. Lasso Regression uses L1 regularization, encouraging sparsity by driving some coefficients to exactly zero, effectively performing feature selection and enhancing model interpretability. The choice between Ridge and Lasso significantly affects the model's ability to highlight key predictors and simplify understanding in machine learning tasks.

Regularization Parameter Tuning

Ridge Regression utilizes L2 regularization to penalize large coefficients, effectively shrinking them toward zero but rarely driving them exactly to zero, which helps in handling multicollinearity. Lasso Regression employs L1 regularization, enabling feature selection by forcing some coefficients to zero, thus producing sparse models ideal for high-dimensional data. Fine-tuning the regularization parameter alpha through cross-validation optimizes model performance by balancing bias and variance, with Ridge favoring smaller alpha values for minimal coefficient shrinkage and Lasso requiring careful adjustment to control sparsity.

Practical Applications in Machine Learning

Ridge Regression excels in scenarios with multicollinearity where many features contribute small effects, effectively shrinking coefficients without forcing them to zero. Lasso Regression is preferable for feature selection in high-dimensional datasets by driving some coefficients exactly to zero, thus producing sparse models. In practical machine learning workflows, Ridge is often used when model interpretability is less critical, while Lasso suits applications requiring simplified models for improved interpretability and feature selection.

Choosing the Right Approach: Ridge vs Lasso

Ridge regression applies L2 regularization, which shrinks coefficients evenly and is effective when all features contribute to the outcome, preserving multicollinearity. Lasso regression uses L1 regularization, driving some coefficients to zero, making it ideal for feature selection in sparse models. Selecting between Ridge and Lasso depends on the data structure: prefer Ridge for correlated predictors and Lasso for models requiring feature elimination.

Ridge Regression vs Lasso Regression Infographic

techiny.com

techiny.com