Model-Based Reinforcement Learning uses a dynamic model of the environment to predict future states and rewards, enabling more sample-efficient learning by planning and simulating outcomes before taking actions. In contrast, Model-Free Reinforcement Learning directly learns a policy or value function from interaction data without an explicit model, often requiring more data but providing robustness in complex or unknown environments. Balancing sample efficiency and computational complexity is crucial when choosing between these approaches for practical applications.

Table of Comparison

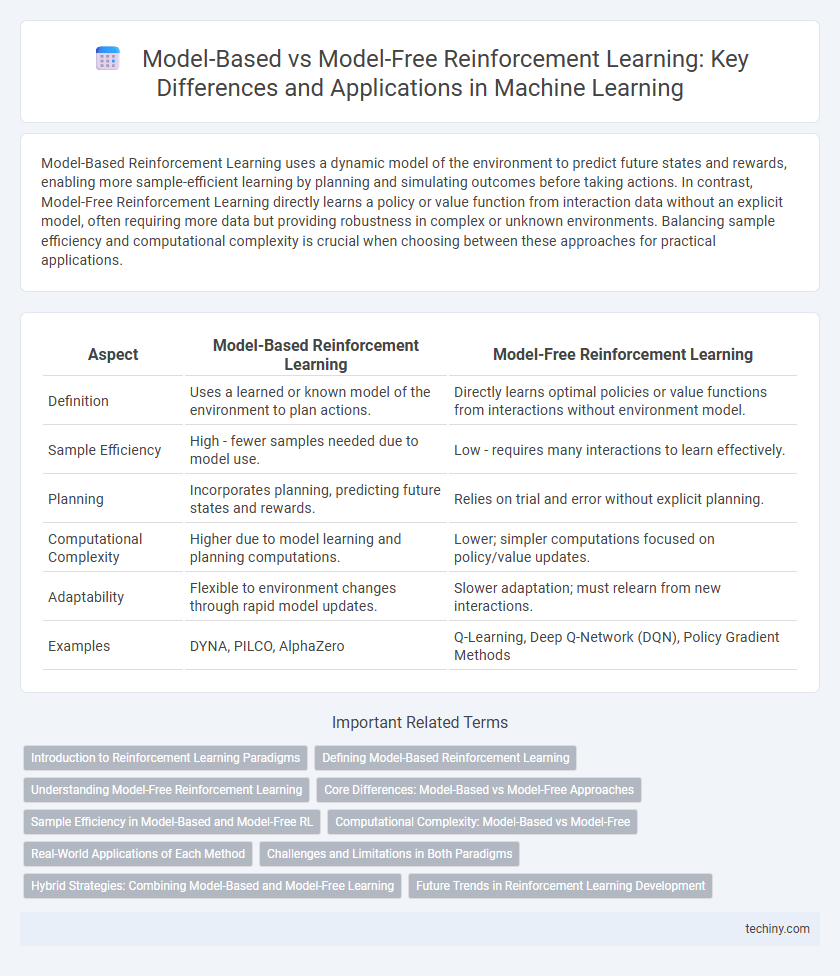

| Aspect | Model-Based Reinforcement Learning | Model-Free Reinforcement Learning |

|---|---|---|

| Definition | Uses a learned or known model of the environment to plan actions. | Directly learns optimal policies or value functions from interactions without environment model. |

| Sample Efficiency | High - fewer samples needed due to model use. | Low - requires many interactions to learn effectively. |

| Planning | Incorporates planning, predicting future states and rewards. | Relies on trial and error without explicit planning. |

| Computational Complexity | Higher due to model learning and planning computations. | Lower; simpler computations focused on policy/value updates. |

| Adaptability | Flexible to environment changes through rapid model updates. | Slower adaptation; must relearn from new interactions. |

| Examples | DYNA, PILCO, AlphaZero | Q-Learning, Deep Q-Network (DQN), Policy Gradient Methods |

Introduction to Reinforcement Learning Paradigms

Model-Based Reinforcement Learning leverages a learned or known environment model to simulate and plan optimal policies, enabling data efficiency and faster convergence. Model-Free Reinforcement Learning relies directly on trial-and-error interaction with the environment, optimizing policies based on observed rewards without need for explicit environment dynamics. Both paradigms address decision-making in Markov Decision Processes but differ fundamentally in their approach to policy evaluation and improvement.

Defining Model-Based Reinforcement Learning

Model-Based Reinforcement Learning (MBRL) involves creating an explicit model of the environment's dynamics, enabling agents to simulate future states and plan actions accordingly. This approach contrasts with Model-Free Reinforcement Learning, which relies solely on learning value functions or policies from experience without an internal model. By leveraging environment models, MBRL can achieve improved sample efficiency and faster adaptation to changes.

Understanding Model-Free Reinforcement Learning

Model-Free Reinforcement Learning relies on learning optimal policies directly from experiences without constructing a model of the environment's dynamics. Algorithms such as Q-Learning and Policy Gradient methods estimate value functions or policies based solely on observed state-action-reward sequences, enabling adaptability in complex or partially known environments. This approach emphasizes empirical learning efficiency and robustness, often at the cost of requiring larger amounts of interaction data compared to model-based methods.

Core Differences: Model-Based vs Model-Free Approaches

Model-Based Reinforcement Learning (MBRL) utilizes an explicit environment model to predict future states and rewards, enabling strategic planning and sample-efficient learning. In contrast, Model-Free Reinforcement Learning (MFRL) bypasses environment modeling by directly learning a policy or value function from interactions, often requiring more data but simplifying implementation. The core difference lies in MBRL's reliance on a dynamic model for decision-making, whereas MFRL depends solely on experience-derived mappings between states and actions.

Sample Efficiency in Model-Based and Model-Free RL

Model-based reinforcement learning achieves higher sample efficiency by using an explicit model of the environment to simulate outcomes and plan actions, reducing the need for extensive real-world interactions. In contrast, model-free reinforcement learning relies solely on trial-and-error experience, requiring significantly more samples to learn effective policies due to the absence of a predictive model. This fundamental difference makes model-based approaches preferable in environments where data collection is costly or limited.

Computational Complexity: Model-Based vs Model-Free

Model-Based Reinforcement Learning typically demands higher computational complexity due to the need to build and update an explicit model of the environment's dynamics, which involves planning algorithms and simulation steps. Model-Free Reinforcement Learning bypasses the model-building phase, relying directly on value functions or policies, resulting in generally lower computational overhead but often slower convergence. The trade-off between computational complexity and sample efficiency makes Model-Based approaches more suitable for environments where interactions are costly, while Model-Free methods excel in simpler or well-understood settings.

Real-World Applications of Each Method

Model-based reinforcement learning excels in robotics and autonomous systems where accurate environment models enable efficient planning and decision-making under uncertainty, reducing data requirements and improving safety. Model-free reinforcement learning is widely applied in complex, high-dimensional spaces such as game playing, recommendation systems, and real-time strategy optimization due to its ability to learn optimal policies directly from experience without explicit environmental models. Both approaches address distinct challenges in real-world applications, with model-based methods favoring scenarios demanding fast adaptation and model-free techniques thriving in dynamic, model-agnostic contexts.

Challenges and Limitations in Both Paradigms

Model-Based Reinforcement Learning struggles with model inaccuracies that lead to suboptimal policy decisions and increased computational complexity in dynamic environments. Model-Free Reinforcement Learning faces challenges such as sample inefficiency and slow convergence, making it less practical for real-world applications with limited data. Both paradigms require careful balancing between exploration and exploitation to overcome limitations in generalization and performance stability.

Hybrid Strategies: Combining Model-Based and Model-Free Learning

Hybrid strategies in reinforcement learning integrate model-based techniques, which utilize explicit environmental models, with model-free approaches that rely on value function approximation or policy gradients. This combination enhances sample efficiency by enabling planning via learned models while maintaining robustness through direct policy learning from experience. Recent advancements in algorithms like Dyna and MuZero demonstrate superior performance by effectively balancing the trade-off between exploration and exploitation.

Future Trends in Reinforcement Learning Development

Model-based reinforcement learning is anticipated to advance with enhanced model accuracy and efficient planning algorithms, enabling faster adaptation in dynamic environments. Model-free methods are improving through deep learning innovations that boost policy generalization and sample efficiency, driving their application in complex, high-dimensional tasks. Hybrid approaches combining model-based and model-free techniques are gaining traction, aiming to leverage the strengths of both paradigms for robust and scalable reinforcement learning solutions.

Model-Based Reinforcement Learning vs Model-Free Reinforcement Learning Infographic

techiny.com

techiny.com