Lazy learners store the training data and delay the generalization process until a query is received, making them highly flexible but often computationally expensive during prediction. Eager learners build a model immediately after training, enabling faster predictions at the cost of longer initial training times and potential overfitting. Understanding the trade-offs between lazy and eager learning approaches is crucial for selecting the appropriate algorithm based on dataset size, computational resources, and application requirements.

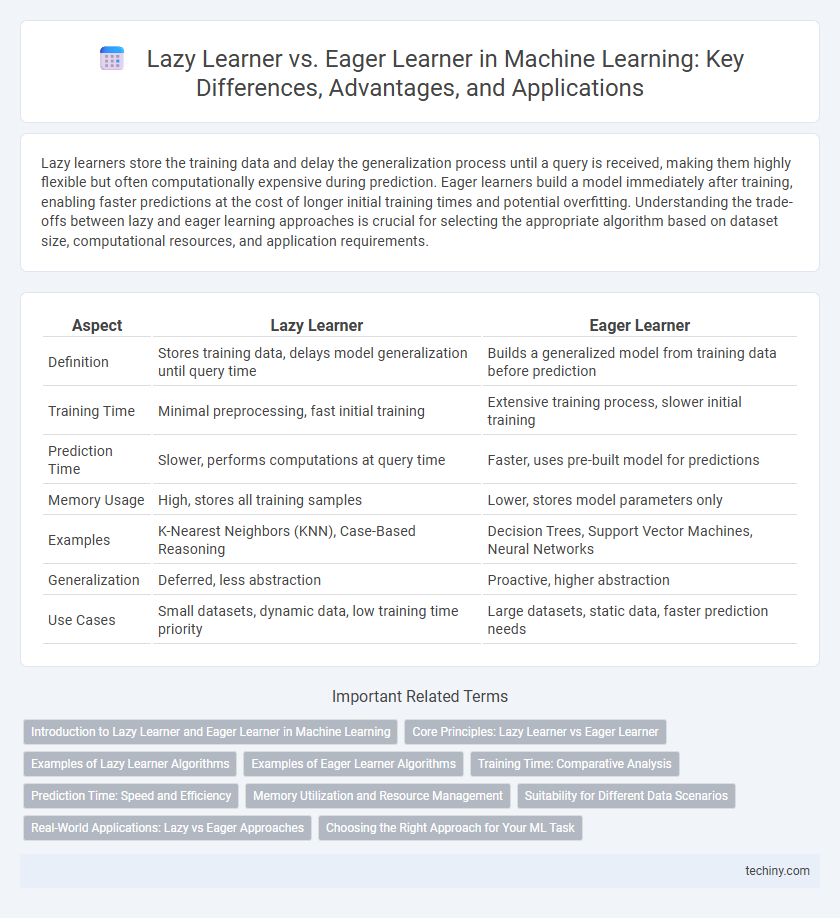

Table of Comparison

| Aspect | Lazy Learner | Eager Learner |

|---|---|---|

| Definition | Stores training data, delays model generalization until query time | Builds a generalized model from training data before prediction |

| Training Time | Minimal preprocessing, fast initial training | Extensive training process, slower initial training |

| Prediction Time | Slower, performs computations at query time | Faster, uses pre-built model for predictions |

| Memory Usage | High, stores all training samples | Lower, stores model parameters only |

| Examples | K-Nearest Neighbors (KNN), Case-Based Reasoning | Decision Trees, Support Vector Machines, Neural Networks |

| Generalization | Deferred, less abstraction | Proactive, higher abstraction |

| Use Cases | Small datasets, dynamic data, low training time priority | Large datasets, static data, faster prediction needs |

Introduction to Lazy Learner and Eager Learner in Machine Learning

Lazy learners in machine learning delay the generalization process until a query is made, storing training data and performing computation only during prediction, which enables flexibility but often results in slower response times. Eager learners, conversely, build a generalized model during training by extracting patterns and relationships from the dataset, allowing for faster predictions but requiring more upfront processing. Common examples of lazy learners include k-Nearest Neighbors (k-NN), while decision trees and neural networks typically represent eager learners.

Core Principles: Lazy Learner vs Eager Learner

Lazy learners store training data and delay processing until a query is made, enabling fast model updates but slower predictions; eager learners build a general model during training, resulting in faster predictions but require more upfront computation. Lazy learning methods, such as k-Nearest Neighbors, emphasize memorization and instance-based reasoning, whereas eager learners like decision trees and neural networks abstract patterns into models before inference. The core distinction lies in immediate generalization by eager learners versus deferred computation characteristic of lazy learners.

Examples of Lazy Learner Algorithms

Lazy learner algorithms like k-Nearest Neighbors (k-NN) and Locally Weighted Regression (LWR) delay generalizing beyond the training data, storing all instances for use during prediction. Case-Based Reasoning (CBR) is another example, solving new problems by adapting solutions from similar past cases. These algorithms typically offer faster training times but slower prediction speeds compared to eager learners.

Examples of Eager Learner Algorithms

Eager learner algorithms include decision trees, support vector machines (SVM), and artificial neural networks, which build a comprehensive model during training before making predictions. These algorithms optimize the hypothesis function upfront, enabling faster classification or regression at test time compared to lazy learners like k-nearest neighbors (k-NN). Eager learners are well-suited for large datasets where pre-computation improves prediction efficiency and generalization performance.

Training Time: Comparative Analysis

Lazy learners like k-nearest neighbors exhibit minimal training time as they defer computation until prediction, storing raw data instead of building explicit models. Eager learners such as decision trees and support vector machines invest significant time upfront to construct generalized models before making predictions. This trade-off impacts real-time responsiveness and resource utilization depending on application requirements.

Prediction Time: Speed and Efficiency

Lazy learners, such as k-Nearest Neighbors, exhibit slower prediction times due to on-demand computation and data search during inference, resulting in higher latency. Eager learners, like decision trees and neural networks, preprocess and generalize the model during training, enabling rapid and efficient predictions with lower computational overhead. The trade-off between prediction speed and model complexity is crucial when selecting an algorithm for real-time machine learning applications.

Memory Utilization and Resource Management

Lazy learners, such as k-Nearest Neighbors (k-NN), defer processing until query time, resulting in high memory utilization due to storing the entire training dataset and increased computational load during inference. Eager learners like decision trees and neural networks preprocess data into a compact model, optimizing resource management by reducing memory footprint and enabling faster predictions. This trade-off highlights the balance between memory demands and computational efficiency in machine learning algorithms.

Suitability for Different Data Scenarios

Lazy learners excel in scenarios with dynamic or changing data, as they delay generalization until query time, making them suitable for large, evolving datasets with many features. Eager learners are more effective for static or smaller datasets where building a model upfront enables faster predictions and better generalization. In high-dimensional or sparse data environments, lazy learners like k-NN can outperform eager models by adapting locally without extensive preprocessing.

Real-World Applications: Lazy vs Eager Approaches

Lazy learners like k-Nearest Neighbors excel in real-time recommendation systems due to their minimal training time and adaptive learning from new data. Eager learners such as Support Vector Machines dominate in fraud detection by building robust models offline for instant, accurate predictions. The choice between lazy and eager approaches hinges on the application's latency tolerance and data update frequency.

Choosing the Right Approach for Your ML Task

Selecting the appropriate approach between lazy learners and eager learners depends on the specific machine learning task and data characteristics. Lazy learners, like k-Nearest Neighbors, delay model generalization until prediction time, making them suitable for dynamic or evolving datasets with lower training costs but higher query time. Eager learners, such as decision trees and neural networks, build comprehensive models during training, offering faster predictions and better performance on large, well-labeled datasets with stable underlying distributions.

Lazy Learner vs Eager Learner Infographic

techiny.com

techiny.com