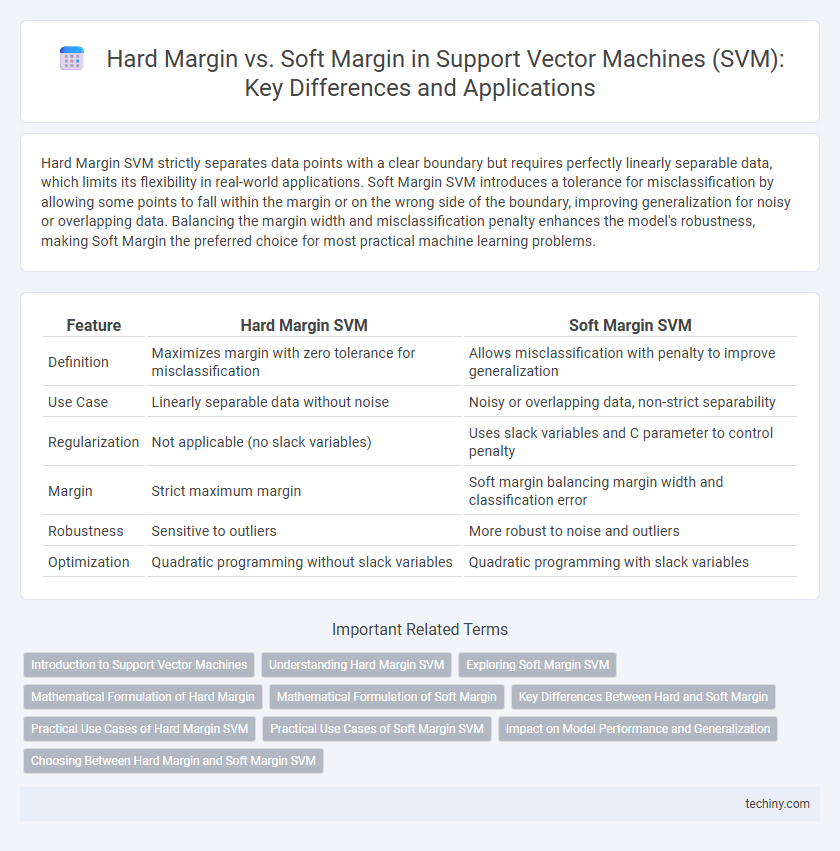

Hard Margin SVM strictly separates data points with a clear boundary but requires perfectly linearly separable data, which limits its flexibility in real-world applications. Soft Margin SVM introduces a tolerance for misclassification by allowing some points to fall within the margin or on the wrong side of the boundary, improving generalization for noisy or overlapping data. Balancing the margin width and misclassification penalty enhances the model's robustness, making Soft Margin the preferred choice for most practical machine learning problems.

Table of Comparison

| Feature | Hard Margin SVM | Soft Margin SVM |

|---|---|---|

| Definition | Maximizes margin with zero tolerance for misclassification | Allows misclassification with penalty to improve generalization |

| Use Case | Linearly separable data without noise | Noisy or overlapping data, non-strict separability |

| Regularization | Not applicable (no slack variables) | Uses slack variables and C parameter to control penalty |

| Margin | Strict maximum margin | Soft margin balancing margin width and classification error |

| Robustness | Sensitive to outliers | More robust to noise and outliers |

| Optimization | Quadratic programming without slack variables | Quadratic programming with slack variables |

Introduction to Support Vector Machines

Support Vector Machines (SVM) classify data by finding the optimal hyperplane that maximizes the margin between classes. Hard margin SVM requires data to be perfectly linearly separable, enforcing strict separation with no misclassifications. Soft margin SVM introduces a tolerance for some misclassification, allowing better generalization to non-linearly separable or noisy datasets by balancing margin maximization and classification error.

Understanding Hard Margin SVM

Hard Margin SVM is a classification technique that seeks to find the maximum margin hyperplane separating linearly separable classes without allowing any misclassifications. It requires strict adherence to correct classification of all training data points, making it sensitive to noise and outliers. This method is effective when data is clean and perfectly separable, ensuring a clear decision boundary with maximum margin.

Exploring Soft Margin SVM

Soft Margin SVM allows for some misclassification by introducing slack variables, enabling the model to handle non-linearly separable data and improve generalization. It balances maximizing the margin with minimizing classification errors, controlled by the regularization parameter C. This flexibility reduces overfitting in noisy datasets and enhances robustness compared to Hard Margin SVM.

Mathematical Formulation of Hard Margin

The mathematical formulation of the hard margin SVM aims to find a hyperplane defined by \( \mathbf{w} \cdot \mathbf{x} + b = 0 \) that perfectly separates two classes with no misclassification, subject to constraints \( y_i(\mathbf{w} \cdot \mathbf{x}_i + b) \geq 1 \) for all training examples \( i \). This optimization problem minimizes the norm \( \frac{1}{2} \|\mathbf{w}\|^2 \) to maximize the margin between the classes while maintaining zero training errors. Hard margin SVM requires linearly separable data to find a unique solution that provides maximum margin classification without slack variables.

Mathematical Formulation of Soft Margin

Soft Margin SVM introduces slack variables \(\xi_i \geq 0\) to allow some misclassification, modifying the optimization objective to minimize \(\frac{1}{2} \|w\|^2 + C \sum_{i=1}^n \xi_i\), where \(C\) controls the trade-off between margin width and classification errors. The constraints become \(y_i (w \cdot x_i + b) \geq 1 - \xi_i\), ensuring that points can lie within the margin or be misclassified but penalized proportionally to \(\xi_i\). This formulation enables greater flexibility in handling non-linearly separable data compared to the Hard Margin SVM, which requires strict separability with no slack.

Key Differences Between Hard and Soft Margin

Hard margin SVM requires data to be perfectly linearly separable without allowing any misclassifications, which can lead to overfitting when data is noisy. Soft margin SVM introduces a regularization parameter (C) that allows some misclassifications by creating a margin that balances between maximizing margin width and minimizing classification error, making it more robust to outliers. The key difference lies in the trade-off between margin maximization and tolerance for misclassification, with hard margin being strict and soft margin being more flexible.

Practical Use Cases of Hard Margin SVM

Hard margin SVM is ideal for perfectly linearly separable datasets where no misclassification is tolerated, commonly found in high-dimensional feature spaces such as text classification or biometric authentication. This approach ensures maximum margin separation, enhancing model interpretability and robustness in clean, noise-free environments. Hard margin SVM is particularly effective in scenarios with well-defined boundaries, such as spam detection or medical diagnosis with consistent and error-free training samples.

Practical Use Cases of Soft Margin SVM

Soft Margin SVM is preferred in real-world applications where data is noisy or not perfectly linearly separable, such as in image recognition or spam email classification, allowing some misclassifications to improve generalization. By introducing slack variables, Soft Margin SVM tolerates overlapping classes and balances margin maximization with classification error minimization. This flexibility makes Soft Margin SVM effective for medical diagnosis systems and financial fraud detection, where rigid separation is impractical.

Impact on Model Performance and Generalization

Hard margin SVM enforces strict separation with no tolerance for misclassification, leading to high complexity and potential overfitting on noisy data. Soft margin SVM introduces a penalty for misclassification, allowing some data points to violate the margin and improving model flexibility and generalization on real-world datasets. Choosing the appropriate margin balances model bias and variance, directly affecting prediction accuracy and robustness across different data distributions.

Choosing Between Hard Margin and Soft Margin SVM

Choosing between Hard Margin and Soft Margin SVM depends on the data's linear separability and noise level. Hard Margin SVM is ideal for perfectly linearly separable data, maximizing the margin without allowing misclassifications. Soft Margin SVM introduces a penalty parameter (C) to tolerate misclassifications, making it suitable for noisy or overlapping classes by balancing margin size and classification errors.

Hard Margin vs Soft Margin (SVM) Infographic

techiny.com

techiny.com