Cross-validation divides the dataset into multiple subsets to train and validate the model, providing robust estimates of its performance by averaging results from different folds. Bootstrap sampling involves repeatedly drawing samples with replacement from the original data to assess model stability and variance. Cross-validation emphasizes bias reduction through data partitioning, while bootstrap focuses on variance estimation by leveraging resampled datasets.

Table of Comparison

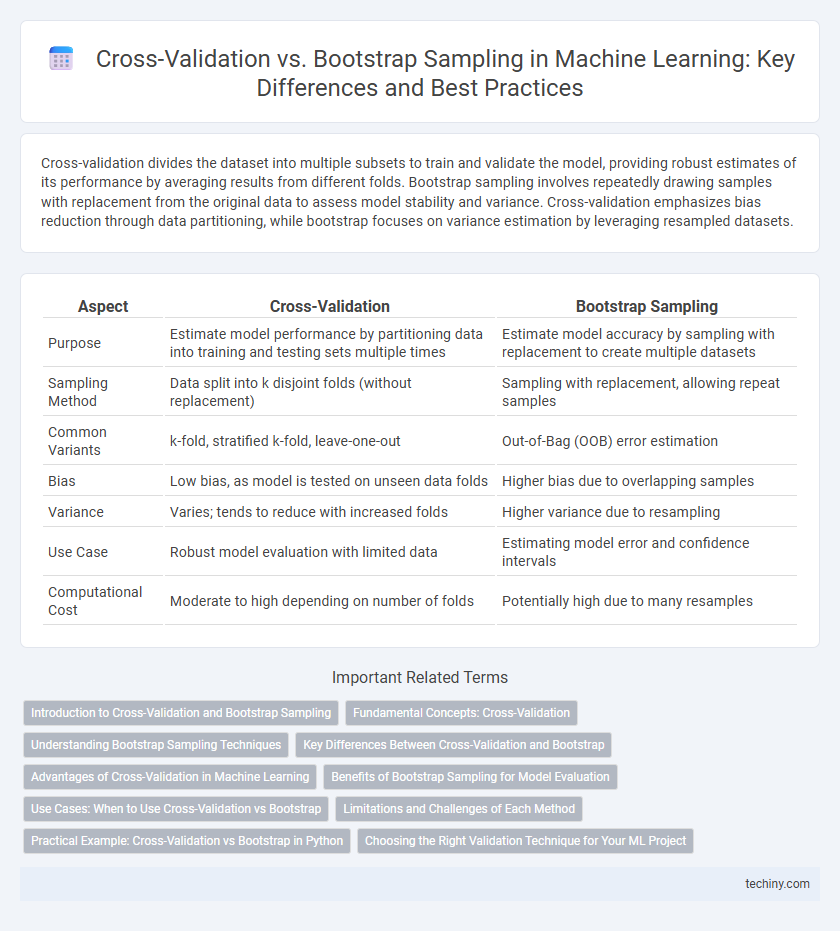

| Aspect | Cross-Validation | Bootstrap Sampling |

|---|---|---|

| Purpose | Estimate model performance by partitioning data into training and testing sets multiple times | Estimate model accuracy by sampling with replacement to create multiple datasets |

| Sampling Method | Data split into k disjoint folds (without replacement) | Sampling with replacement, allowing repeat samples |

| Common Variants | k-fold, stratified k-fold, leave-one-out | Out-of-Bag (OOB) error estimation |

| Bias | Low bias, as model is tested on unseen data folds | Higher bias due to overlapping samples |

| Variance | Varies; tends to reduce with increased folds | Higher variance due to resampling |

| Use Case | Robust model evaluation with limited data | Estimating model error and confidence intervals |

| Computational Cost | Moderate to high depending on number of folds | Potentially high due to many resamples |

Introduction to Cross-Validation and Bootstrap Sampling

Cross-validation and bootstrap sampling are key resampling techniques in machine learning used to estimate model performance. Cross-validation partitions the data into k subsets, training the model on k-1 folds and validating it on the remaining fold iteratively, which provides robust metrics for model generalization. Bootstrap sampling draws multiple samples with replacement from the dataset, enabling estimation of accuracy and variance while accounting for sample variability in model evaluation.

Fundamental Concepts: Cross-Validation

Cross-validation partitions a dataset into k subsets to systematically train and validate a machine learning model, ensuring robust performance estimation and reducing overfitting. Unlike bootstrap sampling, which relies on random sampling with replacement, cross-validation evaluates model generalization by iteratively using distinct validation folds. The k-fold cross-validation method balances bias and variance, providing reliable insight into model accuracy across diverse data subsets.

Understanding Bootstrap Sampling Techniques

Bootstrap sampling techniques involve repeatedly drawing random samples with replacement from a dataset to create multiple training sets, allowing for robust estimation of model performance and variance. Unlike cross-validation, which partitions data into distinct folds, bootstrap sampling ensures that some data points may appear multiple times within a sample, enhancing the assessment of model stability and bias. This approach is particularly effective for small datasets, providing insights into confidence intervals and reducing overfitting risks in machine learning models.

Key Differences Between Cross-Validation and Bootstrap

Cross-validation divides the dataset into multiple subsets to evaluate model performance by training and testing on different folds, providing a less biased estimate of generalization error. Bootstrap sampling randomly samples with replacement to create multiple training sets, allowing estimation of the variability and confidence intervals of model metrics. The key difference lies in cross-validation's systematic partitioning versus bootstrap's random resampling, impacting bias, variance, and computational efficiency in model evaluation.

Advantages of Cross-Validation in Machine Learning

Cross-validation provides a robust estimate of model performance by systematically partitioning data into training and testing subsets, reducing variance compared to single train-test splits. It effectively mitigates overfitting by evaluating the model on multiple folds, ensuring generalization to unseen data. Cross-validation's capacity to utilize all data points for both training and validation enhances model reliability in diverse machine learning tasks.

Benefits of Bootstrap Sampling for Model Evaluation

Bootstrap sampling enhances model evaluation by providing robust estimates of prediction error through repeated resampling with replacement, enabling the assessment of model stability and variance. It allows for constructing confidence intervals for performance metrics, which is crucial for understanding model reliability across different datasets. This method is particularly effective in small sample sizes, as it maximizes data utilization without the need for partitioning into separate training and testing sets.

Use Cases: When to Use Cross-Validation vs Bootstrap

Cross-validation is ideal for model performance evaluation when the dataset is moderately sized and requires robust estimation of generalization error, especially in supervised learning tasks. Bootstrap sampling is preferred for estimating the variability of statistics or models, particularly in small datasets where measuring the confidence intervals of predictions is crucial. Cross-validation provides a more stable error estimate for tuning hyperparameters, whereas bootstrap excels in uncertainty assessment and bias reduction for complex models.

Limitations and Challenges of Each Method

Cross-validation faces limitations such as high computational cost with large datasets and the risk of data leakage if folds are not properly shuffled, which can bias performance estimates. Bootstrap sampling challenges include increased variance in estimates due to overlap in resampled datasets and potential underrepresentation of rare instances, leading to less reliable model evaluation. Both methods require careful consideration of dataset characteristics and computational resources to ensure accurate and robust model assessment.

Practical Example: Cross-Validation vs Bootstrap in Python

Cross-validation divides data into k subsets, training the model on k-1 folds and validating on the remaining fold to estimate model performance with reduced bias, exemplified by k-fold cross-validation in Python's scikit-learn using the cross_val_score function. Bootstrap sampling, implemented via resampling with replacement, generates multiple datasets to estimate model accuracy and variability, demonstrated through the bootstrap function in Python's numpy by creating multiple samples and evaluating model predictions. Comparing results from cross_val_score and bootstrap methods highlights differences in bias and variance estimation, guiding model selection and hyperparameter tuning in practical machine learning workflows.

Choosing the Right Validation Technique for Your ML Project

Choosing the right validation technique in machine learning depends on the dataset size and the model's complexity. Cross-validation, especially k-fold, offers robust performance estimates by partitioning data into training and testing folds, making it ideal for balanced datasets. Bootstrap sampling provides variance estimates by resampling with replacement, useful for smaller datasets but may introduce bias if assumptions about data distribution are unmet.

cross-validation vs bootstrap sampling Infographic

techiny.com

techiny.com