Max pooling captures the most prominent features by selecting the highest value within a pooling window, enhancing edge detection and spatial invariance in convolutional neural networks. Average pooling, on the other hand, computes the mean value of the pooling window, providing a smoother and more generalized representation of the feature map. Choosing between max pooling and average pooling depends on the specific task, where max pooling is preferred for highlighting salient features, while average pooling is useful for retaining contextual information.

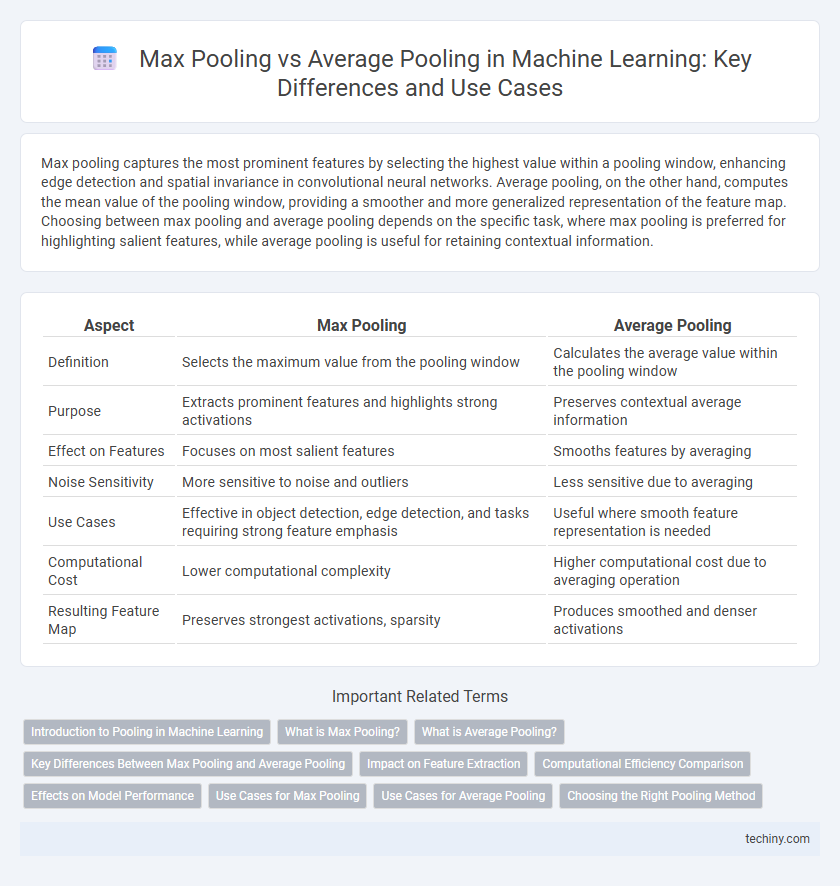

Table of Comparison

| Aspect | Max Pooling | Average Pooling |

|---|---|---|

| Definition | Selects the maximum value from the pooling window | Calculates the average value within the pooling window |

| Purpose | Extracts prominent features and highlights strong activations | Preserves contextual average information |

| Effect on Features | Focuses on most salient features | Smooths features by averaging |

| Noise Sensitivity | More sensitive to noise and outliers | Less sensitive due to averaging |

| Use Cases | Effective in object detection, edge detection, and tasks requiring strong feature emphasis | Useful where smooth feature representation is needed |

| Computational Cost | Lower computational complexity | Higher computational cost due to averaging operation |

| Resulting Feature Map | Preserves strongest activations, sparsity | Produces smoothed and denser activations |

Introduction to Pooling in Machine Learning

Pooling is a crucial operation in convolutional neural networks that reduces the spatial size of feature maps, enhancing computational efficiency and controlling overfitting. Max pooling selects the maximum value within a pooling window, preserving prominent features and edges, while average pooling computes the mean value, emphasizing overall background information. Both methods contribute to translation invariance and dimensionality reduction but differ in how they summarize local regions in input data.

What is Max Pooling?

Max pooling is a downsampling technique used in convolutional neural networks that selects the maximum value within a specified window or filter, effectively reducing the spatial dimensions of feature maps while retaining the most prominent features. This method enhances translation invariance and helps extract dominant patterns, improving model robustness and efficiency by focusing on the strongest activations. Max pooling is particularly beneficial in scenarios requiring edge detection and texture recognition due to its emphasis on high-intensity activations.

What is Average Pooling?

Average pooling is a downsampling technique in convolutional neural networks that calculates the mean value of pixels within a defined pooling window. It helps to reduce the spatial dimensions of feature maps while preserving the overall texture and background information. This method is often preferred in tasks requiring smooth feature representation and less sensitivity to noise compared to max pooling.

Key Differences Between Max Pooling and Average Pooling

Max pooling selects the maximum value from each pooling window, effectively capturing the most prominent features and enhancing edge and texture detection in convolutional neural networks. Average pooling computes the mean value within the window, providing a smoother, more generalized representation that reduces noise but may dilute important features. The choice between max pooling and average pooling impacts model performance, with max pooling often preferred for classification tasks and average pooling used in scenarios requiring spatial averaging or feature smoothing.

Impact on Feature Extraction

Max pooling enhances feature extraction by capturing the most prominent activations within a region, preserving edge and texture details crucial for tasks like object recognition. Average pooling smooths the feature map by computing the mean values, which can reduce noise but may blur important features, leading to less distinctive representations. Max pooling tends to improve model robustness in detecting salient features, while average pooling supports a more generalized feature aggregation.

Computational Efficiency Comparison

Max pooling reduces computational complexity by selecting the maximum value within a pooling window, requiring fewer operations than average pooling, which calculates the mean of all values in the window. This decreased arithmetic demand often leads to faster inference times and lower resource consumption in deep learning architectures. However, average pooling's smoothing effect can provide better feature generalization at a slight cost in computational efficiency.

Effects on Model Performance

Max pooling enhances feature extraction by capturing the most prominent activations, often resulting in better preservation of critical information and improved model accuracy, especially in tasks requiring edge or texture detection. Average pooling smooths feature maps by calculating the mean values, which can reduce noise but may dilute distinctive features, potentially leading to lower model sensitivity and generalization. Models employing max pooling typically achieve higher precision in classification tasks, whereas average pooling sometimes benefits scenarios demanding robustness over fine-grained detail.

Use Cases for Max Pooling

Max pooling is widely used in convolutional neural networks (CNNs) for tasks such as image classification and object detection, where it helps to capture the most prominent features by selecting the maximum value from each pooling window. This technique enhances model robustness to spatial variations and reduces computational complexity by downsampling feature maps while preserving important edge and texture information. Max pooling is particularly effective in scenarios with high-dimensional inputs, as it retains salient activations critical for accurate feature extraction and pattern recognition.

Use Cases for Average Pooling

Average pooling is often used in scenarios where preserving background information and reducing noise are important, such as in image segmentation and facial recognition tasks. It helps maintain smooth feature maps by computing the mean value within pooling windows, making it suitable for applications involving texture analysis and detecting subtle patterns. Average pooling is preferred in situations requiring more generalized feature representation rather than emphasizing prominent features highlighted by max pooling.

Choosing the Right Pooling Method

Choosing the right pooling method in machine learning depends on the specific task and data characteristics, where max pooling highlights the most prominent features by selecting the maximum value within a pooling window, often improving performance in tasks like image recognition by preserving edges and textures. Average pooling, in contrast, smooths feature maps by calculating the mean of values in the window, which helps reduce noise and maintain spatial information but may blur important details. For applications requiring strong feature detection and spatial invariance, max pooling is preferred, while average pooling suits scenarios needing feature generalization and smoother representations.

max pooling vs average pooling Infographic

techiny.com

techiny.com