Autoencoders and Principal Component Analysis (PCA) are both techniques used for dimensionality reduction in machine learning, with autoencoders leveraging neural networks to learn nonlinear mappings while PCA relies on linear transformations. Autoencoders can capture complex patterns and reconstruct data with higher fidelity in cases of nonlinear relationships, whereas PCA is computationally efficient and interpretable but limited to linear correlations. Choosing between the two depends on the data complexity, computational resources, and the specific application requirements for feature extraction or noise reduction.

Table of Comparison

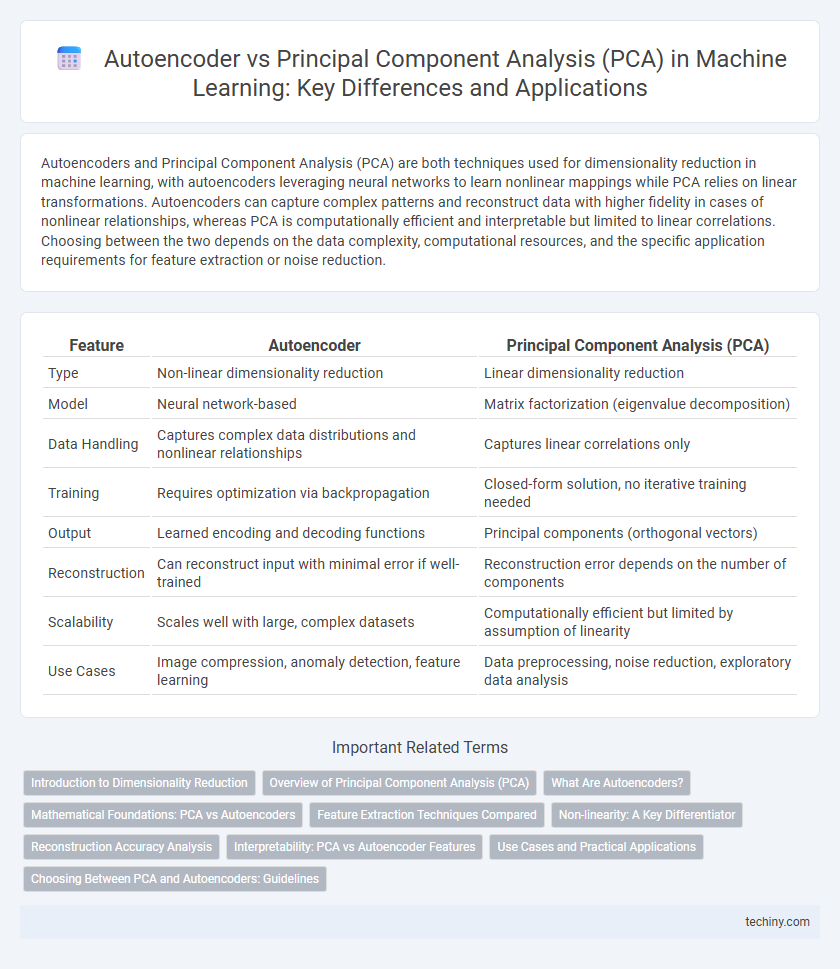

| Feature | Autoencoder | Principal Component Analysis (PCA) |

|---|---|---|

| Type | Non-linear dimensionality reduction | Linear dimensionality reduction |

| Model | Neural network-based | Matrix factorization (eigenvalue decomposition) |

| Data Handling | Captures complex data distributions and nonlinear relationships | Captures linear correlations only |

| Training | Requires optimization via backpropagation | Closed-form solution, no iterative training needed |

| Output | Learned encoding and decoding functions | Principal components (orthogonal vectors) |

| Reconstruction | Can reconstruct input with minimal error if well-trained | Reconstruction error depends on the number of components |

| Scalability | Scales well with large, complex datasets | Computationally efficient but limited by assumption of linearity |

| Use Cases | Image compression, anomaly detection, feature learning | Data preprocessing, noise reduction, exploratory data analysis |

Introduction to Dimensionality Reduction

Autoencoders and Principal Component Analysis (PCA) are prominent techniques for dimensionality reduction in machine learning, transforming high-dimensional data into lower-dimensional representations while preserving essential information. PCA is a linear method that identifies orthogonal components capturing maximum variance, ideal for datasets with linear correlations. Autoencoders leverage neural networks to learn non-linear feature encoding through reconstruction tasks, enabling more flexible and powerful dimensionality reduction for complex data structures.

Overview of Principal Component Analysis (PCA)

Principal Component Analysis (PCA) is a statistical technique used for dimensionality reduction by transforming data into a set of orthogonal components that capture the maximum variance. PCA identifies principal components through eigenvalue decomposition of the covariance matrix or singular value decomposition of the data matrix. This method preserves essential features while reducing noise and redundancy, making it effective for preprocessing in machine learning workflows.

What Are Autoencoders?

Autoencoders are neural networks designed to learn efficient data encodings by compressing input data into a lower-dimensional representation and then reconstructing the original input. They consist of an encoder that maps the input to a latent space and a decoder that attempts to reconstruct the input from this compressed representation, enabling nonlinear dimensionality reduction. Autoencoders excel in capturing complex data structures beyond the linear transformations performed by Principal Component Analysis (PCA).

Mathematical Foundations: PCA vs Autoencoders

Principal Component Analysis (PCA) relies on linear algebra, utilizing eigenvalue decomposition of the covariance matrix to identify directions of maximum variance and project data onto these principal components. Autoencoders employ nonlinear neural networks to learn efficient codings by minimizing reconstruction error through backpropagation, capturing complex data distributions beyond linear correlations. While PCA is limited to linear transformations, autoencoders flexibly model nonlinear relationships, offering superior representation learning in high-dimensional, complex datasets.

Feature Extraction Techniques Compared

Autoencoders leverage neural networks to learn nonlinear feature representations, capturing complex data patterns beyond linear correlations, unlike Principal Component Analysis (PCA) which reduces dimensionality through linear transformations preserving maximum variance. Autoencoders can model intricate structures via multiple hidden layers, enhancing reconstruction accuracy and enabling robust feature extraction for tasks like image compression and anomaly detection. PCA remains computationally efficient and interpretable for linearly separable data but lacks flexibility in handling highly nonlinear relationships that autoencoders effectively address.

Non-linearity: A Key Differentiator

Autoencoders leverage non-linear activation functions to capture complex, non-linear relationships within high-dimensional data, enabling more effective dimensionality reduction compared to Principal Component Analysis (PCA), which is limited to linear transformations. This non-linearity allows autoencoders to model intricate data structures such as images and speech more accurately. Consequently, autoencoders often outperform PCA in scenarios requiring the extraction of deep, non-linear feature representations.

Reconstruction Accuracy Analysis

Autoencoders consistently achieve higher reconstruction accuracy than Principal Component Analysis (PCA) by capturing nonlinear data relationships through deep neural network architectures. PCA relies on linear transformations, limiting its ability to represent complex data distributions, especially in high-dimensional spaces. Empirical studies demonstrate autoencoders reduce reconstruction error significantly in image and signal processing tasks compared to PCA, enhancing feature extraction and data compression quality.

Interpretability: PCA vs Autoencoder Features

Principal Component Analysis (PCA) offers highly interpretable features by projecting data onto orthogonal axes that capture maximum variance, making it easier to understand the contribution of each original variable. Autoencoders, in contrast, learn nonlinear representations through neural networks, often resulting in complex latent features that lack straightforward interpretability. Consequently, PCA is preferred for applications demanding transparent feature explanation, while autoencoders excel in capturing intricate data structures at the cost of interpretability.

Use Cases and Practical Applications

Autoencoders excel in nonlinear dimensionality reduction, making them ideal for image reconstruction, anomaly detection, and feature learning in complex datasets such as medical imaging and speech recognition. PCA is well-suited for linear feature extraction, commonly used in exploratory data analysis, noise reduction, and preprocessing in finance, genetics, and environmental data. Both techniques enhance model performance by reducing dimensionality, but autoencoders offer greater flexibility in capturing intricate data patterns across various industries.

Choosing Between PCA and Autoencoders: Guidelines

When choosing between PCA and Autoencoders, consider the data complexity and dimensionality reduction goals: PCA is effective for linear transformations and smaller datasets with clear variance directions, while Autoencoders excel in modeling non-linear relationships and large-scale data with complex patterns. Evaluate reconstruction error, interpretability, and computational resources, as Autoencoders require more training time and tuning but often yield better representations for deep learning applications. Use PCA for quick, interpretable results and Autoencoders when capturing intricate data structures or when deploying within neural network pipelines.

Autoencoder vs Principal Component Analysis (PCA) Infographic

techiny.com

techiny.com