The cold start problem in machine learning occurs when a model has little to no historical data to learn from, resulting in poor initial performance and slow adaptation. Warm start leverages pre-trained models or previously acquired knowledge to initialize learning, significantly improving convergence speed and accuracy. Techniques such as transfer learning and meta-learning help overcome cold start challenges by enabling models to build upon existing patterns.

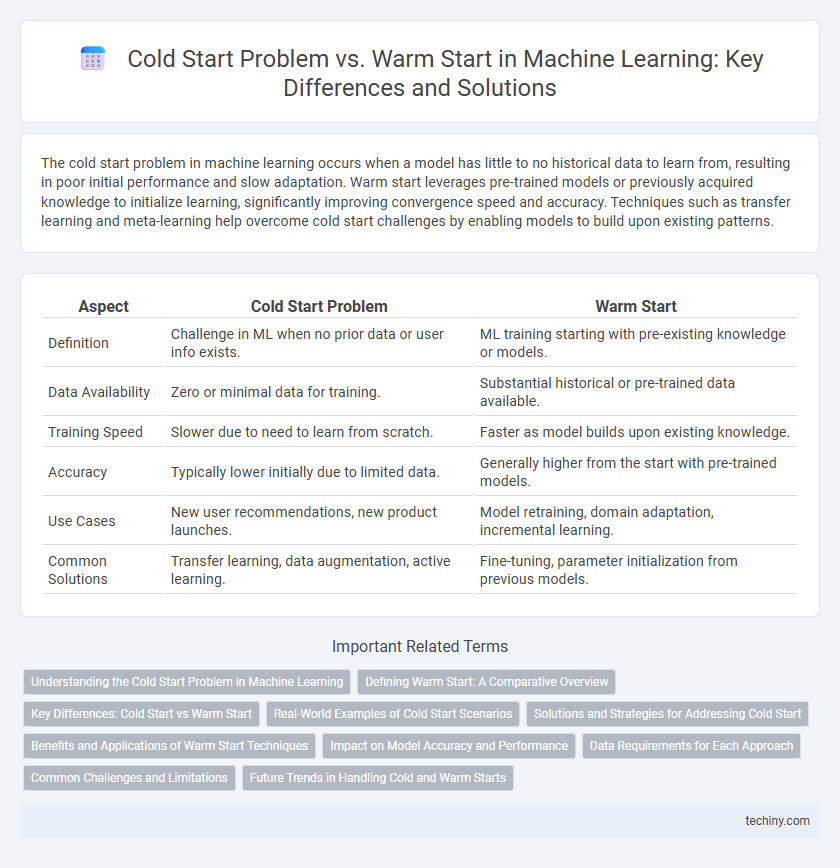

Table of Comparison

| Aspect | Cold Start Problem | Warm Start |

|---|---|---|

| Definition | Challenge in ML when no prior data or user info exists. | ML training starting with pre-existing knowledge or models. |

| Data Availability | Zero or minimal data for training. | Substantial historical or pre-trained data available. |

| Training Speed | Slower due to need to learn from scratch. | Faster as model builds upon existing knowledge. |

| Accuracy | Typically lower initially due to limited data. | Generally higher from the start with pre-trained models. |

| Use Cases | New user recommendations, new product launches. | Model retraining, domain adaptation, incremental learning. |

| Common Solutions | Transfer learning, data augmentation, active learning. | Fine-tuning, parameter initialization from previous models. |

Understanding the Cold Start Problem in Machine Learning

The cold start problem in machine learning occurs when a model lacks sufficient data to make accurate predictions, especially in recommendation systems or user personalization. It contrasts with the warm start scenario where previous data or pretrained models are available, enabling faster adaptation and improved performance. Addressing the cold start requires techniques like transfer learning, data augmentation, or hybrid recommendation systems to overcome data scarcity and enhance model effectiveness.

Defining Warm Start: A Comparative Overview

Warm start in machine learning refers to initializing an algorithm with prior knowledge or pre-trained models, significantly reducing training time compared to cold start scenarios where models start from scratch. This approach leverages previously learned parameters, enabling faster convergence and improved performance in iterative processes such as hyperparameter tuning and transfer learning. Warm start methods are particularly effective in resource-constrained environments and applications requiring rapid adaptation to new but related tasks.

Key Differences: Cold Start vs Warm Start

Cold start in machine learning refers to the challenge of making accurate predictions or recommendations when there is little to no prior data available for a new user, item, or model. Warm start leverages existing knowledge, such as pre-trained models or historical data, to accelerate training and improve initial performance. Key differences include cold start's reliance on limited or no prior information versus warm start's use of pre-initialized parameters or transfer learning to reduce convergence time and enhance accuracy.

Real-World Examples of Cold Start Scenarios

Cold start problems occur when machine learning models lack sufficient initial data, commonly seen in recommendation systems for new users or products, such as Netflix recommending movies to a first-time viewer. In contrast, warm start scenarios benefit from existing user interactions or prior model training, enabling faster and more accurate predictions in applications like personalized advertising on platforms with established user profiles. Real-world cold start challenges also arise in fraud detection systems for new merchants where historical data is unavailable, highlighting the need for innovative solutions like transfer learning or hybrid models.

Solutions and Strategies for Addressing Cold Start

The cold start problem in machine learning arises due to the lack of sufficient data for new users or items, hindering accurate predictions. Solutions include leveraging content-based filtering, transfer learning, and hybrid recommendation systems that integrate collaborative filtering with user/item metadata. Strategies such as active learning, data augmentation, and leveraging cross-domain data effectively mitigate cold start challenges, enhancing model performance from initial deployment.

Benefits and Applications of Warm Start Techniques

Warm start techniques in machine learning accelerate model convergence by initializing training with pre-trained weights or parameters from related tasks, reducing the need for extensive data and computational resources. These methods enhance performance in transfer learning, hyperparameter optimization, and iterative model updates, especially in scenarios with limited new data. Applications span recommendation systems, natural language processing, and computer vision, where rapid adaptation to evolving data improves accuracy and efficiency.

Impact on Model Accuracy and Performance

Cold start problems in machine learning arise when models lack sufficient historical data, leading to lower accuracy and slower convergence during training. Warm start methods leverage previously trained model parameters or related datasets, significantly improving model accuracy and reducing training time by providing a head start. The impact on performance is pronounced, with warm start models often exhibiting faster adaptation and higher predictive reliability compared to cold start scenarios.

Data Requirements for Each Approach

Cold start problems in machine learning arise due to the lack of historical data, making it challenging to generate accurate predictions or recommendations without initial user or item information. Warm start methods leverage pre-existing data, such as prior model parameters or related datasets, to accelerate training and improve performance by providing a strong initialization point. Data requirements for cold start include acquiring external or demographic data to compensate for missing context, while warm start depends on abundant pre-trained models or comprehensive datasets to enable smoother transitions in learning.

Common Challenges and Limitations

The cold start problem in machine learning occurs when models lack sufficient data to make accurate predictions, often leading to poor initial performance and slow learning. In contrast, warm start techniques leverage prior knowledge or pretrained models to jumpstart the learning process, yet they may inherit biases or outdated information. Both scenarios face challenges such as data sparsity, overfitting risks, and limited generalization, complicating effective model training and deployment.

Future Trends in Handling Cold and Warm Starts

Future trends in handling cold start problems focus on leveraging advanced transfer learning and meta-learning techniques to reduce dependency on large labeled datasets, enabling models to quickly adapt to new users or tasks. Warm start approaches are evolving with continual learning frameworks that integrate previous knowledge efficiently while preventing catastrophic forgetting, enhancing model performance over time. Hybrid strategies combining reinforcement learning with active learning are emerging to optimize personalized recommendations and predictions from initial interactions.

Cold start problem vs Warm start Infographic

techiny.com

techiny.com