Convex loss functions ensure a single global minimum, simplifying optimization and guaranteeing convergence to the best solution in machine learning models. Non-convex loss functions, common in deep learning, contain multiple local minima and saddle points, making optimization more challenging and often requiring advanced techniques like stochastic gradient descent. Choosing between convex and non-convex loss functions depends on the model complexity and the trade-off between computational efficiency and representational power.

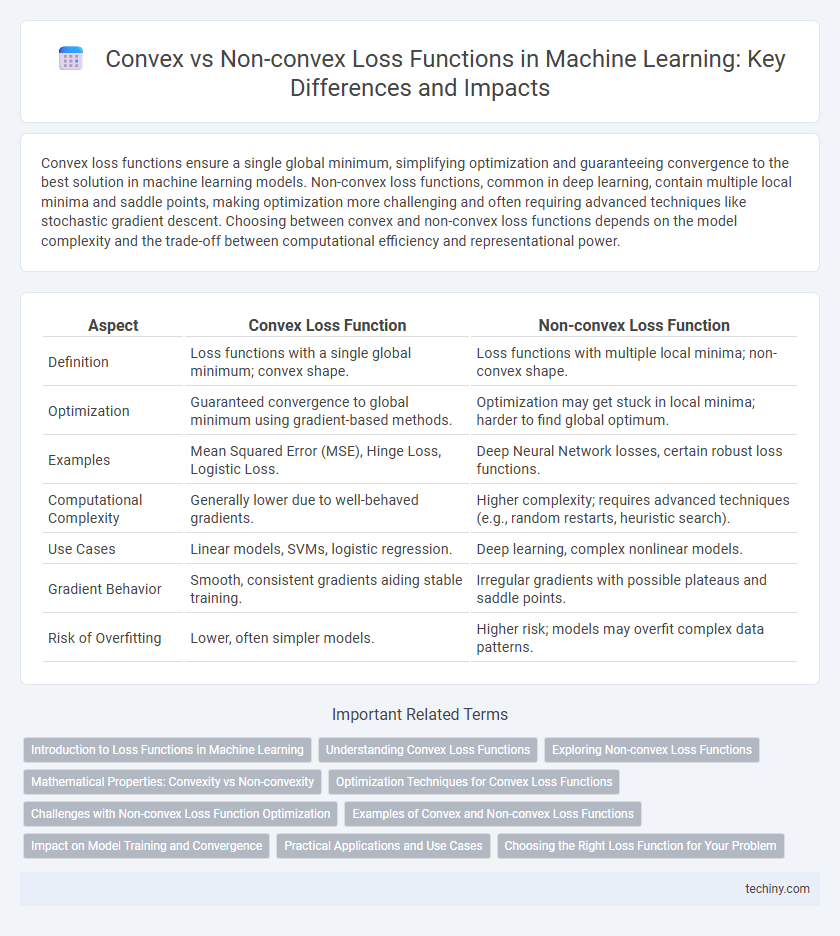

Table of Comparison

| Aspect | Convex Loss Function | Non-convex Loss Function |

|---|---|---|

| Definition | Loss functions with a single global minimum; convex shape. | Loss functions with multiple local minima; non-convex shape. |

| Optimization | Guaranteed convergence to global minimum using gradient-based methods. | Optimization may get stuck in local minima; harder to find global optimum. |

| Examples | Mean Squared Error (MSE), Hinge Loss, Logistic Loss. | Deep Neural Network losses, certain robust loss functions. |

| Computational Complexity | Generally lower due to well-behaved gradients. | Higher complexity; requires advanced techniques (e.g., random restarts, heuristic search). |

| Use Cases | Linear models, SVMs, logistic regression. | Deep learning, complex nonlinear models. |

| Gradient Behavior | Smooth, consistent gradients aiding stable training. | Irregular gradients with possible plateaus and saddle points. |

| Risk of Overfitting | Lower, often simpler models. | Higher risk; models may overfit complex data patterns. |

Introduction to Loss Functions in Machine Learning

Loss functions in machine learning measure the difference between predicted and actual values, guiding model optimization. Convex loss functions, such as mean squared error, ensure a single global minimum, facilitating efficient and reliable convergence during training. Non-convex loss functions may contain multiple local minima and saddle points, posing challenges in optimization but enabling greater model expressiveness for complex tasks.

Understanding Convex Loss Functions

Convex loss functions in machine learning ensure a single global minimum, simplifying optimization and improving convergence in training algorithms such as gradient descent. Common examples include Mean Squared Error (MSE) and Hinge Loss, which provide smooth, predictable gradients that facilitate stable updates to model parameters. Understanding convex loss functions is critical for building models that are efficient to train and less prone to getting stuck in suboptimal solutions.

Exploring Non-convex Loss Functions

Non-convex loss functions, characterized by multiple local minima and saddle points, enable modeling of complex patterns beyond the reach of convex counterparts in machine learning. They play a critical role in deep neural networks, where optimization landscapes are inherently non-convex, allowing for richer representations and improved performance on tasks like image recognition and natural language processing. Despite their challenges in convergence and optimization, advanced techniques such as stochastic gradient descent with momentum, adaptive learning rates, and ensemble methods help effectively navigate these complex loss surfaces.

Mathematical Properties: Convexity vs Non-convexity

Convex loss functions exhibit a gradient landscape with a single global minimum, ensuring stable convergence and predictable optimization behavior in machine learning models. Non-convex loss functions contain multiple local minima and saddle points, posing challenges for gradient-based algorithms to find the global optimum efficiently. The mathematical property of convexity guarantees that any local minimum is also a global minimum, whereas non-convexity lacks this assurance, leading to potential model performance variability.

Optimization Techniques for Convex Loss Functions

Optimization techniques for convex loss functions leverage their property of a single global minimum, enabling efficient convergence through algorithms such as Gradient Descent, Stochastic Gradient Descent, and Newton's Method. These methods benefit from convexity by guaranteeing that any local minimum is a global minimum, simplifying parameter updates and improving stability. Convex optimization problems for loss functions often utilize strong duality and subgradient methods to handle nondifferentiable cases, ensuring robust solutions in machine learning models.

Challenges with Non-convex Loss Function Optimization

Non-convex loss function optimization in machine learning poses significant challenges due to the presence of multiple local minima and saddle points, which complicate convergence to the global optimum. This complexity leads to unstable training dynamics and increased computational cost, often requiring advanced optimization algorithms like stochastic gradient descent with momentum or adaptive learning rates. Additionally, non-convex landscapes make gradient-based methods sensitive to initialization and hyperparameter tuning, impacting model accuracy and generalization.

Examples of Convex and Non-convex Loss Functions

Examples of convex loss functions include Mean Squared Error (MSE) and Hinge Loss, which are widely used in regression and classification tasks due to their guaranteed global minima. Non-convex loss functions, such as the Cross-Entropy Loss used in deep neural networks and the Huber Loss for robust regression, often involve multiple local minima, making optimization more challenging. The choice between convex and non-convex loss functions depends on the model complexity and the desired balance between computational efficiency and representational power.

Impact on Model Training and Convergence

Convex loss functions guarantee global optimality and faster convergence rates in model training due to their well-defined, bowl-shaped landscapes that prevent local minima entrapment. Non-convex loss functions often introduce multiple local minima and saddle points, increasing the complexity of optimization algorithms and potentially slowing convergence or causing suboptimal solutions. This distinction heavily influences the stability, efficiency, and accuracy of machine learning models during the training process.

Practical Applications and Use Cases

Convex loss functions, such as mean squared error and logistic loss, are widely used in linear regression and classification tasks due to their guarantee of convergence to a global minimum, ensuring stable and efficient training in practical applications like spam detection and credit scoring. Non-convex loss functions, often encountered in deep learning architectures like convolutional neural networks and recurrent neural networks, enable modeling of complex data patterns but require advanced optimization techniques like stochastic gradient descent with heuristics to navigate local minima, making them suitable for image recognition and natural language processing. The choice between convex and non-convex loss functions depends on the complexity of the task, computational resources, and the need for model expressiveness in real-world machine learning problems.

Choosing the Right Loss Function for Your Problem

Choosing the right loss function is crucial in machine learning as convex loss functions, like mean squared error or logistic loss, guarantee global minima and stable convergence for convex models, enhancing training efficiency. Non-convex loss functions, common in deep learning with activation functions such as ReLU or sigmoid, may result in multiple local minima, requiring careful optimization strategies like stochastic gradient descent to avoid poor solutions. Selecting a loss function depends on the problem structure, model complexity, and the desired balance between optimization reliability and representational power.

Convex Loss Function vs Non-convex Loss Function Infographic

techiny.com

techiny.com