Training error measures a model's accuracy on the data it was trained on, often showing lower values due to overfitting. Test error evaluates performance on unseen data, providing a more realistic estimate of generalization ability. Minimizing the gap between training error and test error is crucial for developing models that perform well in real-world applications.

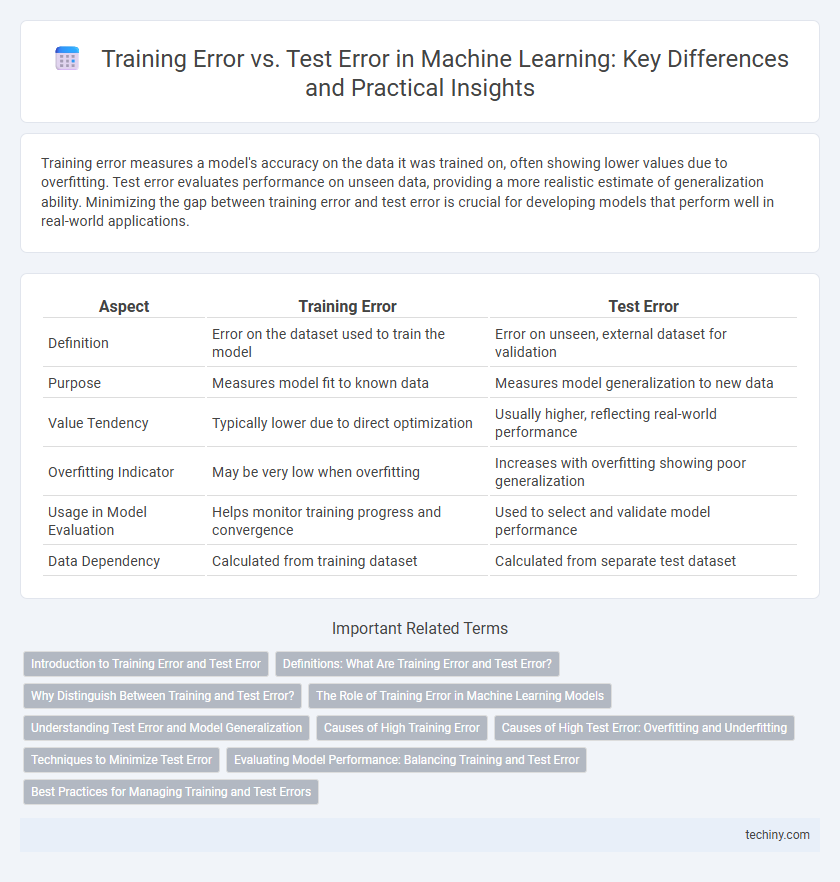

Table of Comparison

| Aspect | Training Error | Test Error |

|---|---|---|

| Definition | Error on the dataset used to train the model | Error on unseen, external dataset for validation |

| Purpose | Measures model fit to known data | Measures model generalization to new data |

| Value Tendency | Typically lower due to direct optimization | Usually higher, reflecting real-world performance |

| Overfitting Indicator | May be very low when overfitting | Increases with overfitting showing poor generalization |

| Usage in Model Evaluation | Helps monitor training progress and convergence | Used to select and validate model performance |

| Data Dependency | Calculated from training dataset | Calculated from separate test dataset |

Introduction to Training Error and Test Error

Training error measures the difference between predicted and actual outcomes on the dataset used to train the machine learning model, indicating how well the model has learned the training data. Test error evaluates the model's performance on new, unseen data, providing an estimate of its generalization ability. Monitoring both training error and test error helps identify overfitting or underfitting, essential for building robust machine learning models.

Definitions: What Are Training Error and Test Error?

Training error measures the proportion of incorrect predictions made by a machine learning model on the dataset used for training, reflecting how well the model learns from known data. Test error evaluates the model's performance on unseen data, indicating its ability to generalize beyond the training samples. Minimizing test error is crucial for developing models that perform reliably in real-world applications.

Why Distinguish Between Training and Test Error?

Distinguishing between training error and test error is crucial to evaluate a machine learning model's generalization performance accurately. Training error measures the model's accuracy on the data it was trained on, often underestimating real-world error due to overfitting. Test error assesses model performance on unseen data, providing a realistic measure of prediction accuracy and helping to detect overfitting or underfitting issues.

The Role of Training Error in Machine Learning Models

Training error measures how well a machine learning model fits the training dataset, directly reflecting the model's performance on the data it was trained on. Low training error indicates that the model has learned the underlying patterns within the training data, but it does not guarantee generalization to unseen data. Monitoring training error alongside test error helps identify overfitting, where a model performs well on training data but poorly on new, external datasets.

Understanding Test Error and Model Generalization

Test error measures a machine learning model's performance on unseen data, reflecting its ability to generalize beyond the training set. Understanding the gap between training error and test error is crucial for diagnosing overfitting or underfitting issues. Minimizing test error ensures that the model captures underlying patterns rather than noise, leading to robust generalization in real-world applications.

Causes of High Training Error

High training error in machine learning models often results from underfitting, where the model is too simple to capture the underlying patterns in the training data. Causes include insufficient model complexity, inadequate feature representation, or noisy and insufficient training data. Regularization techniques set too high can also excessively constrain the model, leading to poor fit on the training set.

Causes of High Test Error: Overfitting and Underfitting

High test error in machine learning models primarily results from overfitting or underfitting. Overfitting occurs when a model learns noise and patterns specific to the training data, causing poor generalization to unseen test data, while underfitting happens when the model is too simple to capture the underlying data distribution, leading to high bias. Effective strategies to reduce test error include model regularization, cross-validation, and ensuring appropriate model complexity to balance bias and variance.

Techniques to Minimize Test Error

Regularization methods such as L1 and L2 penalize model complexity to reduce test error by preventing overfitting during training. Cross-validation techniques provide reliable estimates of test error, guiding hyperparameter tuning to improve generalization performance. Early stopping monitors validation error to halt training before overfitting occurs, minimizing discrepancies between training error and test error.

Evaluating Model Performance: Balancing Training and Test Error

Balancing training error and test error is crucial for evaluating machine learning model performance, as low training error alone often indicates overfitting, while high test error suggests poor generalization to unseen data. Effective evaluation uses metrics such as cross-validation error and validation curves to identify the optimal trade-off, ensuring the model captures underlying patterns without memorizing noise. Monitoring convergence between training and test errors helps improve model robustness and predictive accuracy in real-world applications.

Best Practices for Managing Training and Test Errors

Minimizing training error while preventing overfitting is essential to improve model generalization on unseen data, achieved through techniques like cross-validation and regularization. Monitoring both training and test errors helps detect underfitting or overfitting, guiding parameter tuning and model complexity adjustments. Employing strategies such as early stopping and data augmentation further balances training and test errors, ensuring robust and accurate machine learning models.

Training Error vs Test Error Infographic

techiny.com

techiny.com