Principal Component Analysis (PCA) reduces data dimensionality by identifying directions of maximum variance without considering class labels, making it an unsupervised technique. Linear Discriminant Analysis (LDA) maximizes class separability by projecting features onto a lower-dimensional space using class-specific information, thus serving as a supervised method. While PCA is ideal for feature extraction in unlabeled data, LDA excels in classification tasks by enhancing discrimination between distinct classes.

Table of Comparison

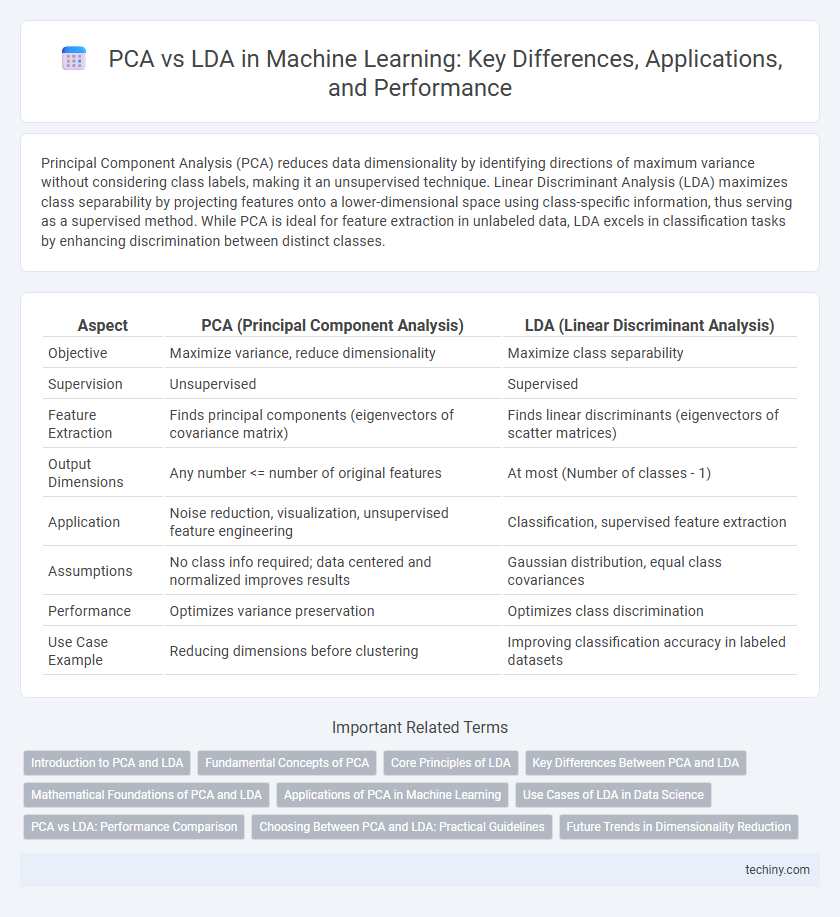

| Aspect | PCA (Principal Component Analysis) | LDA (Linear Discriminant Analysis) |

|---|---|---|

| Objective | Maximize variance, reduce dimensionality | Maximize class separability |

| Supervision | Unsupervised | Supervised |

| Feature Extraction | Finds principal components (eigenvectors of covariance matrix) | Finds linear discriminants (eigenvectors of scatter matrices) |

| Output Dimensions | Any number <= number of original features | At most (Number of classes - 1) |

| Application | Noise reduction, visualization, unsupervised feature engineering | Classification, supervised feature extraction |

| Assumptions | No class info required; data centered and normalized improves results | Gaussian distribution, equal class covariances |

| Performance | Optimizes variance preservation | Optimizes class discrimination |

| Use Case Example | Reducing dimensions before clustering | Improving classification accuracy in labeled datasets |

Introduction to PCA and LDA

Principal Component Analysis (PCA) is an unsupervised dimensionality reduction technique that transforms data into a set of orthogonal components, maximizing variance across features without considering class labels. Linear Discriminant Analysis (LDA) is a supervised method that seeks a feature subspace maximizing class separability by optimizing the ratio of between-class variance to within-class variance. PCA captures data variance efficiently, while LDA emphasizes discriminative information for classification tasks.

Fundamental Concepts of PCA

Principal Component Analysis (PCA) is a dimensionality reduction technique that transforms data into a new coordinate system by identifying the directions, called principal components, along which the variance of the data is maximized. PCA operates by computing the eigenvectors and eigenvalues of the covariance matrix, capturing the most significant features while minimizing information loss. This unsupervised method emphasizes variance preservation without considering class labels, distinguishing it from supervised techniques like Linear Discriminant Analysis (LDA).

Core Principles of LDA

Linear Discriminant Analysis (LDA) focuses on maximizing the ratio of between-class variance to within-class variance in feature space, enhancing class separability. It assumes normally distributed classes with identical covariance matrices, optimizing a linear combination of features for better classification. Unlike PCA, which reduces dimensionality by capturing overall variance, LDA is supervised, using class labels to improve discrimination.

Key Differences Between PCA and LDA

PCA (Principal Component Analysis) is an unsupervised dimensionality reduction technique that maximizes variance by projecting data onto principal components, while LDA (Linear Discriminant Analysis) is supervised and focuses on maximizing class separability by finding linear discriminants. PCA does not consider class labels and is primarily used for feature extraction and data compression, whereas LDA incorporates class information to improve classification performance. The core difference lies in PCA's variance-based projection versus LDA's discriminant-based projection, making PCA suitable for general data exploration and LDA optimal for classification tasks.

Mathematical Foundations of PCA and LDA

PCA (Principal Component Analysis) leverages eigenvalue decomposition of the covariance matrix to identify directions of maximum variance in data, transforming high-dimensional datasets into lower-dimensional spaces while preserving maximum variance. LDA (Linear Discriminant Analysis) optimizes class separability by maximizing the ratio of between-class scatter to within-class scatter through solving a generalized eigenvalue problem, focusing on discriminative features for supervised classification tasks. Both techniques rely on linear algebra and matrix computations but differ in objective functions: PCA is unsupervised variance maximization, whereas LDA is supervised class discrimination.

Applications of PCA in Machine Learning

PCA (Principal Component Analysis) is widely used in machine learning for dimensionality reduction, enabling efficient data visualization and noise reduction in high-dimensional datasets. It helps improve model performance by extracting uncorrelated features, which are essential for algorithms sensitive to multicollinearity, such as linear regression and neural networks. PCA also facilitates feature extraction in areas like image recognition, bioinformatics, and text processing, where it reduces computational complexity while preserving significant variance.

Use Cases of LDA in Data Science

Linear Discriminant Analysis (LDA) excels in supervised classification tasks, particularly in scenarios requiring dimensionality reduction while preserving class separability. It is widely used in applications such as facial recognition, medical diagnosis, and customer segmentation where labeled data guides the identification of discriminative features. Unlike Principal Component Analysis (PCA), which focuses on maximizing variance without considering class labels, LDA optimizes class separability, making it ideal for classification problems in data science.

PCA vs LDA: Performance Comparison

PCA (Principal Component Analysis) excels in unsupervised dimensionality reduction by capturing maximum variance, whereas LDA (Linear Discriminant Analysis) optimizes class separability for supervised classification tasks. PCA performs better when the primary goal is data compression without label information, while LDA shows superior accuracy in tasks requiring class discrimination. In high-dimensional datasets with clear class labels, LDA often outperforms PCA by maximizing between-class variance and minimizing within-class variance, resulting in improved classification performance.

Choosing Between PCA and LDA: Practical Guidelines

Choosing between Principal Component Analysis (PCA) and Linear Discriminant Analysis (LDA) depends on the objective: PCA is ideal for unsupervised dimensionality reduction by capturing maximum variance, while LDA excels in supervised classification tasks by maximizing class separability. PCA is preferred when the goal is to reduce features without labeled data, whereas LDA requires labeled datasets to optimize feature extraction for predictive performance. Practical guidelines recommend using LDA for applications involving distinct class prediction and PCA for exploratory data analysis or noise reduction.

Future Trends in Dimensionality Reduction

Emerging trends in dimensionality reduction emphasize integrating Principal Component Analysis (PCA) and Linear Discriminant Analysis (LDA) with deep learning frameworks to enhance feature extraction efficiency and interpretability. Advances in hybrid models leverage PCA's unsupervised variance preservation and LDA's supervised class separability to address high-dimensional data challenges in complex domains like genomics and image recognition. Quantum computing and autoencoder-based techniques are poised to redefine dimensionality reduction by significantly accelerating processing times and improving dimensionality reduction accuracy beyond traditional PCA and LDA capabilities.

PCA vs LDA Infographic

techiny.com

techiny.com