Pruning reduces model complexity by removing less important neurons or branches in neural networks, enhancing speed and interpretability without heavily modifying the loss function. Regularization introduces penalty terms to the loss function, such as L1 or L2 norms, to prevent overfitting by constraining model parameters. Both techniques improve generalization but target different aspects of the training process for optimized performance.

Table of Comparison

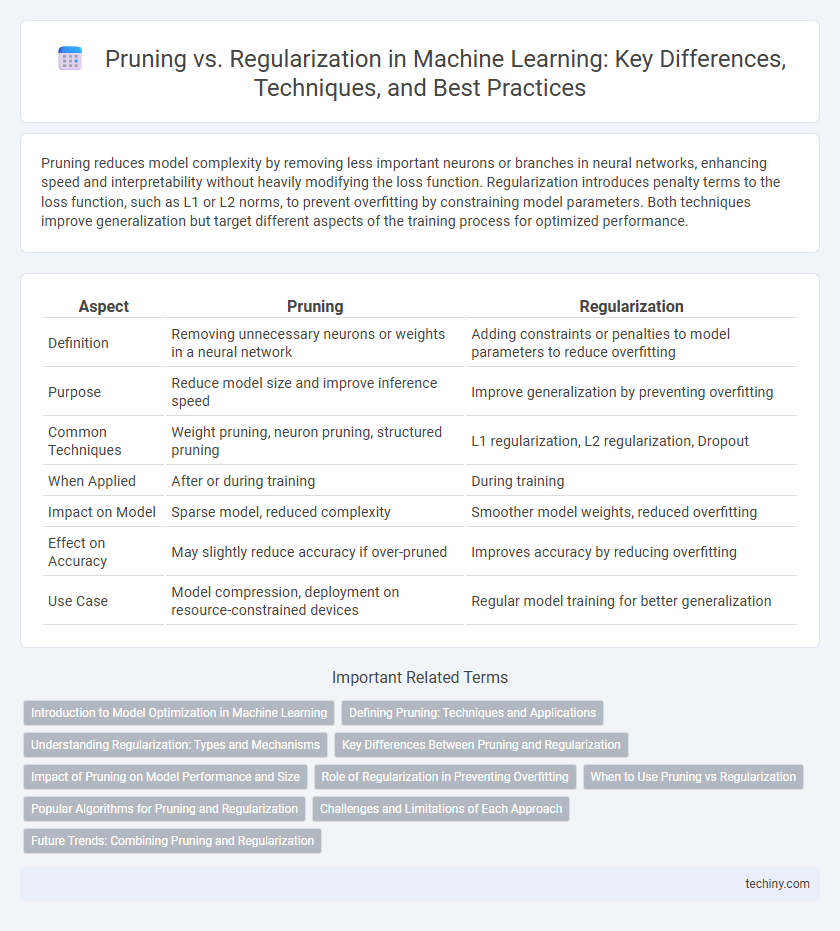

| Aspect | Pruning | Regularization |

|---|---|---|

| Definition | Removing unnecessary neurons or weights in a neural network | Adding constraints or penalties to model parameters to reduce overfitting |

| Purpose | Reduce model size and improve inference speed | Improve generalization by preventing overfitting |

| Common Techniques | Weight pruning, neuron pruning, structured pruning | L1 regularization, L2 regularization, Dropout |

| When Applied | After or during training | During training |

| Impact on Model | Sparse model, reduced complexity | Smoother model weights, reduced overfitting |

| Effect on Accuracy | May slightly reduce accuracy if over-pruned | Improves accuracy by reducing overfitting |

| Use Case | Model compression, deployment on resource-constrained devices | Regular model training for better generalization |

Introduction to Model Optimization in Machine Learning

Pruning and regularization are essential techniques in machine learning for optimizing model performance and preventing overfitting. Pruning reduces model complexity by removing unnecessary parameters or neurons post-training, enhancing generalization and computational efficiency. Regularization incorporates penalty terms like L1 or L2 into the loss function, constraining model weights during training to improve robustness and avoid overfitting.

Defining Pruning: Techniques and Applications

Pruning in machine learning involves selectively removing parameters or nodes from a model to reduce complexity and prevent overfitting while maintaining performance. Techniques such as weight pruning, neuron pruning, and structured pruning target less important weights, neurons, or filters based on criteria like magnitude, sensitivity, or contribution. Pruning is widely applied in deep neural networks and decision trees to enhance model efficiency, speed up inference, and reduce memory footprint without significant accuracy loss.

Understanding Regularization: Types and Mechanisms

Regularization techniques in machine learning, such as L1 (Lasso) and L2 (Ridge) regularization, prevent overfitting by adding penalty terms to the loss function, which constrain model complexity and encourage sparsity or small weights. Dropout is another regularization method that randomly deactivates neurons during training to improve generalization and reduce co-adaptation. These mechanisms balance bias and variance, enhancing model robustness and predictive accuracy on unseen data.

Key Differences Between Pruning and Regularization

Pruning in machine learning involves eliminating unnecessary neurons or connections within a neural network to reduce model complexity and improve inference efficiency. Regularization, such as L1 or L2, adds a penalty term to the loss function to prevent overfitting by encouraging simpler models through weight constraints. While pruning physically removes parts of the model post-training, regularization influences the training process itself to produce more generalized models.

Impact of Pruning on Model Performance and Size

Pruning in machine learning significantly reduces model size by eliminating redundant or less important neurons and connections, leading to more efficient models ideal for deployment on resource-constrained devices. This process can enhance model performance by preventing overfitting and improving generalization, especially in deep neural networks. However, aggressive pruning may degrade accuracy if critical parameters are removed, necessitating careful tuning to balance compression and performance.

Role of Regularization in Preventing Overfitting

Regularization techniques such as L1 and L2 penalize model complexity by adding constraints on the magnitude of coefficients, effectively reducing overfitting in machine learning models. By controlling the capacity of the model, regularization ensures improved generalization on unseen data, preventing the model from capturing noise as patterns. This approach contrasts with pruning, which simplifies model structure post-training, while regularization integrates complexity control during model optimization.

When to Use Pruning vs Regularization

Pruning is most effective in decision trees and neural networks when reducing model complexity to prevent overfitting while maintaining interpretability and performance. Regularization techniques such as L1 and L2 are ideal for linear models or deep neural networks to constrain model parameters, improve generalization, and handle high-dimensional data. Use pruning when model simplicity and feature selection are critical, and apply regularization when controlling coefficient magnitude and enhancing model stability are priorities.

Popular Algorithms for Pruning and Regularization

Popular pruning algorithms include magnitude-based pruning, which removes weights with the smallest absolute values, and structured pruning techniques that eliminate entire neurons or filters for model compression. Regularization methods commonly used are L1 and L2 regularization, which add penalties to the loss function to constrain model complexity, alongside dropout that randomly deactivates neurons during training to prevent overfitting. These algorithms enhance model generalization by reducing over-parameterization and improving computational efficiency in deep learning frameworks.

Challenges and Limitations of Each Approach

Pruning techniques in machine learning face challenges such as the risk of over-pruning, which can lead to underfitting and loss of model accuracy by removing essential neurons or connections. Regularization methods like L1 and L2 may struggle with selecting optimal hyperparameters, sometimes resulting in insufficient complexity reduction or over-penalization, which hampers model expressiveness. Both approaches also encounter limitations in generalization when applied to diverse datasets, requiring careful tuning and validation for effective performance enhancement.

Future Trends: Combining Pruning and Regularization

Future trends in machine learning emphasize the integration of pruning and regularization techniques to enhance model efficiency and generalization. Combining pruning's ability to reduce model complexity by removing redundant parameters with regularization's capacity to prevent overfitting leads to more robust and scalable models. Advances in automated machine learning (AutoML) systems are expected to optimize this hybrid approach, tailoring pruning-regularization strategies dynamically during training.

Pruning vs Regularization Infographic

techiny.com

techiny.com