K-nearest neighbors (KNN) is a supervised learning algorithm used for classification and regression by identifying the closest training examples in the feature space. In contrast, k-means is an unsupervised clustering algorithm that partitions data into k clusters based on feature similarity and minimizes variance within each cluster. Both methods rely on distance metrics, but KNN predicts labels for new data points while k-means discovers inherent groupings in unlabeled datasets.

Table of Comparison

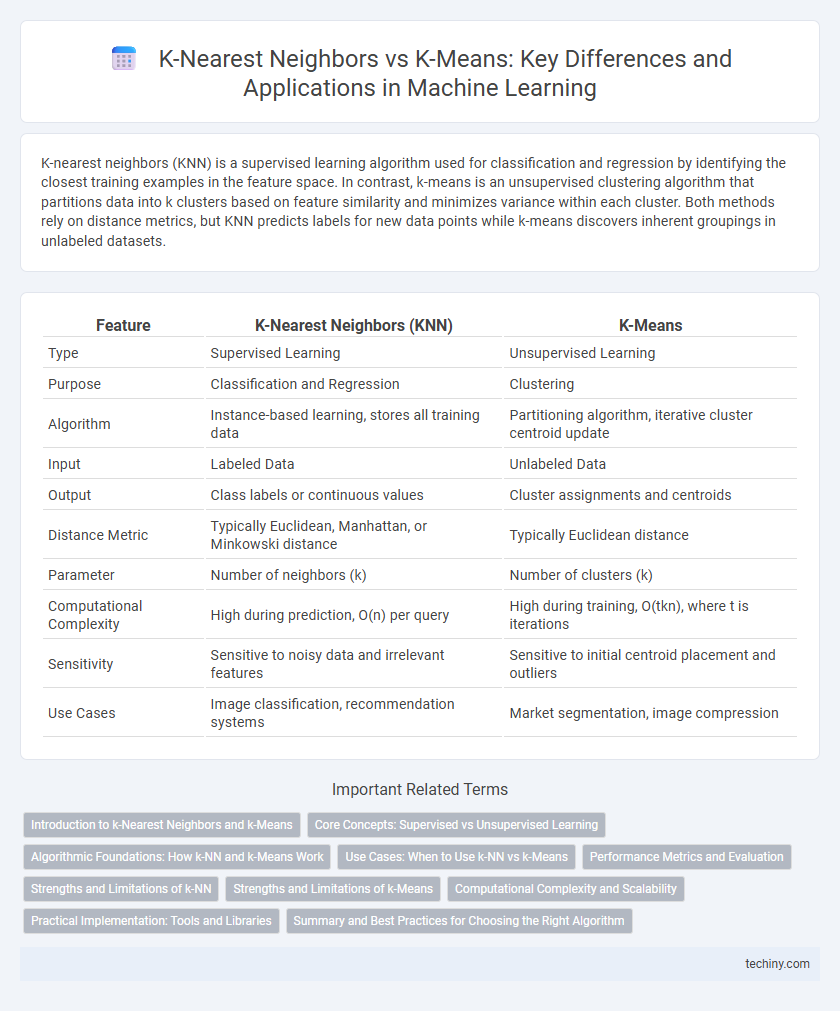

| Feature | K-Nearest Neighbors (KNN) | K-Means |

|---|---|---|

| Type | Supervised Learning | Unsupervised Learning |

| Purpose | Classification and Regression | Clustering |

| Algorithm | Instance-based learning, stores all training data | Partitioning algorithm, iterative cluster centroid update |

| Input | Labeled Data | Unlabeled Data |

| Output | Class labels or continuous values | Cluster assignments and centroids |

| Distance Metric | Typically Euclidean, Manhattan, or Minkowski distance | Typically Euclidean distance |

| Parameter | Number of neighbors (k) | Number of clusters (k) |

| Computational Complexity | High during prediction, O(n) per query | High during training, O(tkn), where t is iterations |

| Sensitivity | Sensitive to noisy data and irrelevant features | Sensitive to initial centroid placement and outliers |

| Use Cases | Image classification, recommendation systems | Market segmentation, image compression |

Introduction to k-Nearest Neighbors and k-Means

K-Nearest Neighbors (KNN) is a supervised learning algorithm primarily used for classification tasks, relying on the proximity of data points to make predictions based on the majority class among the closest neighbors. K-Means is an unsupervised clustering algorithm designed to partition data into k distinct clusters by minimizing the variance within each cluster through iterative centroid updates. Both algorithms utilize distance metrics such as Euclidean distance but serve different purposes in machine learning workflows: KNN for labeled data classification and K-Means for identifying inherent groupings in unlabeled data.

Core Concepts: Supervised vs Unsupervised Learning

K-nearest neighbors (KNN) is a supervised learning algorithm that classifies data points based on the majority label of their closest neighbors, relying on labeled training data to make predictions. K-means is an unsupervised learning technique that partitions data into k clusters by minimizing intra-cluster variance without requiring labeled inputs. Understanding the core distinction between KNN's reliance on explicit labels and K-means' focus on discovering inherent data structures is fundamental for selecting the appropriate algorithm for classification versus clustering tasks.

Algorithmic Foundations: How k-NN and k-Means Work

K-Nearest Neighbors (k-NN) operates as a supervised learning algorithm that classifies data points based on the majority label of their k closest neighbors using distance metrics like Euclidean or Manhattan distance. K-Means, an unsupervised clustering algorithm, partitions data into k clusters by iteratively updating cluster centroids and assigning points to the nearest centroid based on distance calculations. Both algorithms rely heavily on distance measurements but serve fundamentally different purposes: k-NN for classification and regression, and k-Means for clustering and pattern detection.

Use Cases: When to Use k-NN vs k-Means

k-nearest neighbors (k-NN) is ideal for classification and regression tasks where labeled data is available, such as image recognition or recommendation systems. k-means is best suited for clustering and unsupervised learning scenarios, like customer segmentation or pattern discovery in large datasets. Choose k-NN when prediction based on proximity to labeled examples is needed, and k-means when grouping data into distinct clusters without predefined labels is the goal.

Performance Metrics and Evaluation

K-nearest neighbors (KNN) relies on accuracy, precision, recall, and F1-score to evaluate its classification performance, as it operates on labeled data. K-means clustering performance is measured using metrics like inertia, silhouette score, and Davies-Bouldin index, which assess cluster cohesion and separation without labeled outputs. Selecting the appropriate evaluation metric depends on whether the task is supervised classification (KNN) or unsupervised clustering (K-means).

Strengths and Limitations of k-NN

K-nearest neighbors (k-NN) excels in simplicity and effectiveness for classification tasks, especially with small to medium-sized datasets and well-separated classes. Its major strength lies in requiring no prior training phase, enabling easy adaptation to new data and capturing complex decision boundaries. However, k-NN suffers from high computational cost during prediction, sensitivity to irrelevant features, and degraded performance in high-dimensional spaces due to the curse of dimensionality.

Strengths and Limitations of k-Means

K-means excels in clustering large datasets with clear, spherical groupings by minimizing intra-cluster variance, making it computationally efficient and scalable. Its limitations include sensitivity to initial centroid selection, difficulty in handling non-globular clusters, and poor performance with noisy data or outliers. K-means requires the number of clusters to be predefined, which can lead to suboptimal groupings if the true cluster count is unknown.

Computational Complexity and Scalability

K-nearest neighbors (KNN) exhibits high computational complexity during prediction due to distance calculations against all training samples, resulting in O(n*d) time per query, limiting its scalability with large datasets. K-means clustering, optimized for unsupervised learning, typically achieves O(t*k*n*d) complexity, where t is iterations and k is clusters, making it more scalable for big data when using efficient centroid updates and approximations. Scalability improvements in K-means leverage batch processing and parallelization, whereas KNN requires advanced indexing structures like KD-trees or approximate nearest neighbor search to reduce prediction latency.

Practical Implementation: Tools and Libraries

K-nearest neighbors (KNN) is commonly implemented using libraries like scikit-learn in Python, which offers robust functions for classification and regression tasks. For k-means clustering, popular tools include scikit-learn and Apache Spark's MLlib, both providing scalable algorithms for unsupervised learning on large datasets. Practitioners often choose KNN for supervised learning problems requiring instance-based predictions, while k-means is preferred for clustering and segmentation tasks leveraging iterative centroid updates.

Summary and Best Practices for Choosing the Right Algorithm

K-nearest neighbors (KNN) is a supervised learning algorithm ideal for classification and regression tasks that require labeled data, while k-means is an unsupervised clustering method suited for discovering patterns within unlabeled datasets. Choosing the right algorithm depends on the presence of labeled data, the specific problem requirements, and computational efficiency; KNN performs well with smaller datasets and defined classes, whereas k-means excels in partitioning large datasets into clusters for pattern recognition. Best practices include evaluating the data structure, validating model assumptions, and leveraging cross-validation for KNN or the elbow method for optimizing k-means cluster count.

k-nearest neighbors vs k-means Infographic

techiny.com

techiny.com