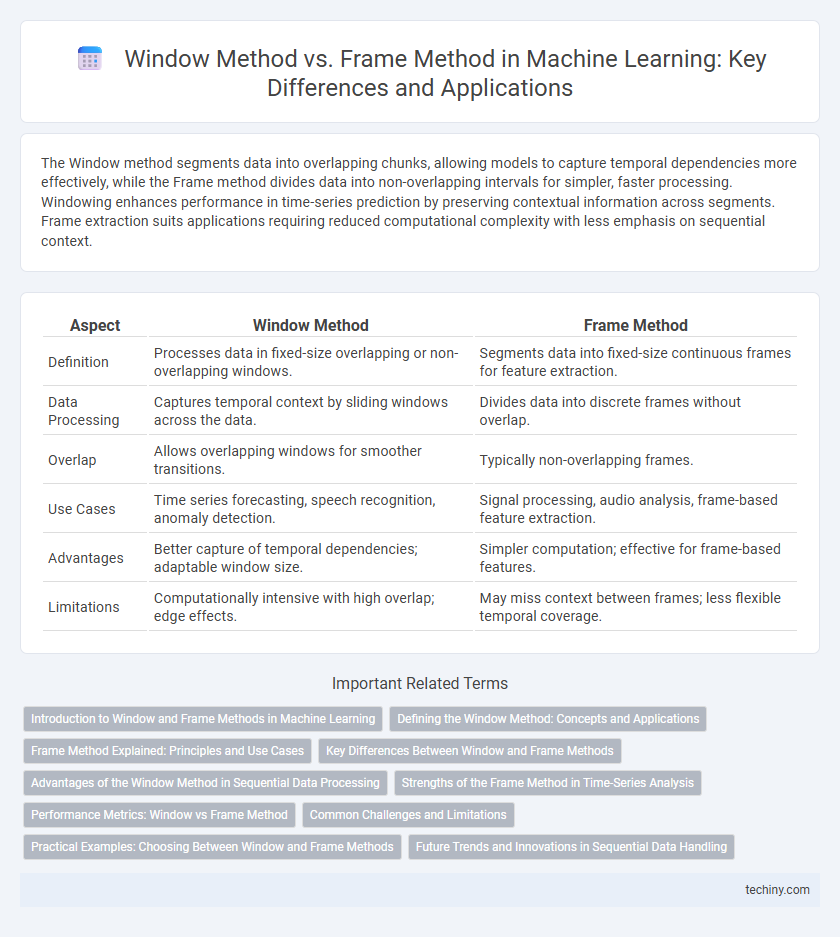

The Window method segments data into overlapping chunks, allowing models to capture temporal dependencies more effectively, while the Frame method divides data into non-overlapping intervals for simpler, faster processing. Windowing enhances performance in time-series prediction by preserving contextual information across segments. Frame extraction suits applications requiring reduced computational complexity with less emphasis on sequential context.

Table of Comparison

| Aspect | Window Method | Frame Method |

|---|---|---|

| Definition | Processes data in fixed-size overlapping or non-overlapping windows. | Segments data into fixed-size continuous frames for feature extraction. |

| Data Processing | Captures temporal context by sliding windows across the data. | Divides data into discrete frames without overlap. |

| Overlap | Allows overlapping windows for smoother transitions. | Typically non-overlapping frames. |

| Use Cases | Time series forecasting, speech recognition, anomaly detection. | Signal processing, audio analysis, frame-based feature extraction. |

| Advantages | Better capture of temporal dependencies; adaptable window size. | Simpler computation; effective for frame-based features. |

| Limitations | Computationally intensive with high overlap; edge effects. | May miss context between frames; less flexible temporal coverage. |

Introduction to Window and Frame Methods in Machine Learning

Window and frame methods in machine learning refer to techniques that segment sequential data into manageable subsets for analysis and model training. Window methods typically involve fixed-size sliding windows capturing temporal dependencies, enhancing feature extraction in time-series prediction and natural language processing. Frame methods, by contrast, use variable-sized or event-driven frames that adapt segmentation based on contextual shifts, improving accuracy in scenarios like speech recognition and video analysis.

Defining the Window Method: Concepts and Applications

The Window Method in Machine Learning involves selecting a fixed-size subset of data points to analyze temporal patterns or trends within a sliding window, enabling real-time processing and reducing computational complexity. This technique is widely applied in time series forecasting, anomaly detection, and signal processing, where capturing local dependencies and short-term fluctuations is critical for accurate model performance. Window size and stride are key parameters that influence the balance between sensitivity and generalization in various applications.

Frame Method Explained: Principles and Use Cases

The Frame Method in machine learning involves segmenting data into overlapping or non-overlapping frames to capture temporal or sequential patterns, enhancing the model's ability to recognize context-dependent features. Unlike the Window Method, which typically uses fixed-size sliding windows, the Frame Method allows flexible frame lengths tailored to specific temporal dynamics, improving model accuracy in time-series analysis, speech recognition, and video processing. Its use cases include natural language processing for sentence framing, sensor data segmentation for anomaly detection, and bioinformatics sequence alignment, where contextual continuity within frames is critical.

Key Differences Between Window and Frame Methods

The key differences between the window method and frame method in machine learning lie in their approach to data segmentation and context handling. The window method uses fixed-size, sliding windows over sequential data to extract features, enabling localized temporal analysis, whereas the frame method segments data into overlapping or non-overlapping frames with potentially variable size to capture broader temporal or spatial patterns. This distinction impacts model performance, with the window method favoring finer granularity and the frame method allowing more context-aware feature extraction.

Advantages of the Window Method in Sequential Data Processing

The Window method in sequential data processing offers enhanced local context capture by analyzing fixed-size segments, which leads to improved feature extraction and noise reduction compared to the Frame method. It enables more efficient parallelization and lower computational overhead, facilitating faster training and inference in real-time applications. This method also provides better handling of temporal dependencies, resulting in more accurate modeling of sequential patterns in machine learning tasks.

Strengths of the Frame Method in Time-Series Analysis

The Frame method in time-series analysis excels in capturing complex temporal dependencies by maintaining fixed-length segments that preserve sequential order and context, enhancing model accuracy. Its structured approach facilitates robust feature extraction, improving the detection of subtle patterns and trends within noisy data. This method outperforms the Window method by reducing data leakage and enabling better handling of non-stationary signals inherent in real-world time-series datasets.

Performance Metrics: Window vs Frame Method

The Window method evaluates model performance by analyzing fixed-size segments of data, allowing for consistent metric calculation over time but potentially overlooking temporal dependencies. In contrast, the Frame method captures dynamic changes by considering overlapping or adaptive data frames, which can improve sensitivity to temporal variations but may introduce complexity in metric aggregation. Performance metrics such as accuracy, precision, recall, and F1-score often vary between these methods due to differences in how data is segmented and interpreted, impacting the model's ability to generalize in time-series predictions.

Common Challenges and Limitations

Window and frame methods in machine learning both face challenges related to data boundary handling and computational efficiency when processing sequential data. The window method struggles with selecting optimal window size, impacting model performance and missing long-term dependencies, while the frame method often encounters issues with overlapping frames leading to redundant computations and potential information loss. Both techniques require careful tuning to balance bias-variance trade-offs and handle noisy or incomplete data effectively.

Practical Examples: Choosing Between Window and Frame Methods

Choosing between Window and Frame methods in machine learning depends on the nature of time-series or sequential data. The Window method segments data into fixed-size chunks ideal for uniform temporal structures, such as sliding window in real-time sensor data analysis. The Frame method adapts to variable length patterns, making it suitable for tasks like speech recognition where segment durations fluctuate.

Future Trends and Innovations in Sequential Data Handling

The Window method enables efficient pattern recognition by analyzing fixed-size data segments, while the Frame method dynamically adjusts to variable-length sequences, offering greater flexibility in handling diverse sequential data. Emerging innovations in sequential data handling emphasize adaptive windowing techniques and hybrid frame-window models powered by deep neural networks to enhance prediction accuracy and real-time processing. Future trends highlight the integration of attention mechanisms and self-supervised learning to optimize sequence representation and improve the scalability of sequential machine learning models.

Window method vs Frame method Infographic

techiny.com

techiny.com