Data imputation involves filling in missing values in a dataset based on statistical techniques or machine learning models to maintain data integrity. Data interpolation estimates unknown data points within the range of a discrete set of known data points, often using methods like linear or spline interpolation. While both techniques address data gaps, imputation focuses on managing missing values in datasets for analysis, whereas interpolation aims to predict intermediate values in continuous data sequences.

Table of Comparison

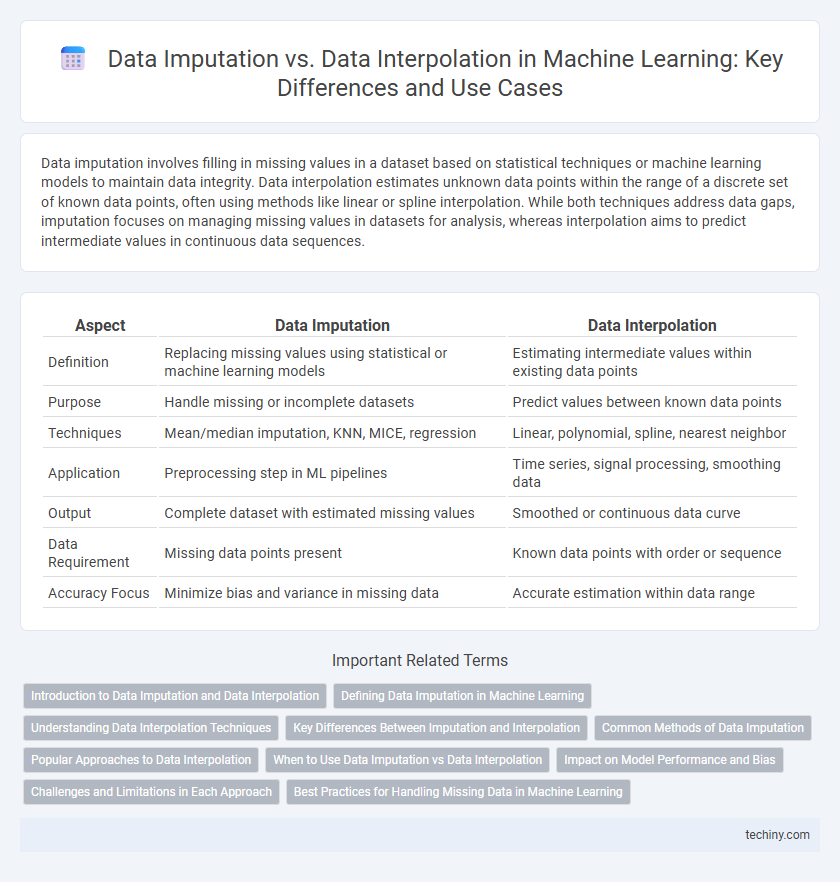

| Aspect | Data Imputation | Data Interpolation |

|---|---|---|

| Definition | Replacing missing values using statistical or machine learning models | Estimating intermediate values within existing data points |

| Purpose | Handle missing or incomplete datasets | Predict values between known data points |

| Techniques | Mean/median imputation, KNN, MICE, regression | Linear, polynomial, spline, nearest neighbor |

| Application | Preprocessing step in ML pipelines | Time series, signal processing, smoothing data |

| Output | Complete dataset with estimated missing values | Smoothed or continuous data curve |

| Data Requirement | Missing data points present | Known data points with order or sequence |

| Accuracy Focus | Minimize bias and variance in missing data | Accurate estimation within data range |

Introduction to Data Imputation and Data Interpolation

Data imputation involves replacing missing values in datasets using statistical methods such as mean, median, or model-based predictions to maintain data integrity for machine learning algorithms. Data interpolation estimates unknown data points within the range of a discrete set of known data points, commonly using linear, polynomial, or spline techniques to create continuous data representations. Both processes are crucial for preprocessing, enhancing model performance by addressing incomplete or sparse datasets.

Defining Data Imputation in Machine Learning

Data imputation in machine learning refers to the process of replacing missing or incomplete data with substituted values to maintain dataset integrity and enable accurate model training. Techniques such as mean imputation, k-nearest neighbors (KNN), and multivariate imputation by chained equations (MICE) are commonly employed to estimate these missing entries based on existing data patterns. Effective data imputation reduces bias and variance introduced by missing data, improving the performance and reliability of predictive models.

Understanding Data Interpolation Techniques

Data interpolation techniques in machine learning estimate missing data points within the range of a discrete set of known data points using methods such as linear, polynomial, or spline interpolation. These techniques assume a smooth underlying function and leverage surrounding data values to predict unknown values, enhancing dataset completeness without introducing external biases. Understanding the differences and applications of interpolation methods improves model accuracy by better handling incomplete or irregularly sampled datasets.

Key Differences Between Imputation and Interpolation

Data imputation involves filling in missing values within datasets using statistical or model-based techniques to maintain data integrity, whereas data interpolation estimates intermediate values within the range of discrete data points to create a continuous function. Imputation methods include mean, median, or predictive modeling, focusing on correcting incomplete data, while interpolation techniques like linear or spline interpolation generate new data points to support trend analysis. The key difference lies in imputation targeting missing entries for dataset completeness, whereas interpolation reconstructs continuous data patterns for smoother data representation.

Common Methods of Data Imputation

Common methods of data imputation in machine learning include mean, median, and mode substitution, which replace missing values with central tendency measures to preserve dataset integrity. Advanced techniques like k-Nearest Neighbors (k-NN) imputation and multiple imputation leverage statistical and probabilistic models to estimate missing data more accurately. Unlike data interpolation that predicts values within existing data ranges, data imputation focuses on handling missing or incomplete entries to improve model performance and reliability.

Popular Approaches to Data Interpolation

Popular approaches to data interpolation in machine learning include linear interpolation, polynomial interpolation, and spline interpolation, each designed to estimate missing values within the range of observed data points. Linear interpolation calculates values using straight-line segments between known points, ensuring simplicity and efficiency in time series and spatial data. Spline interpolation leverages piecewise polynomials for smooth curves, improving accuracy in datasets with nonlinear patterns or higher-order continuity requirements.

When to Use Data Imputation vs Data Interpolation

Data imputation is essential when handling missing data in datasets, especially in supervised machine learning tasks where accurate labels are required for training algorithms. Data interpolation is more suitable for estimating values within a sequence of data points, such as time series or spatial data, to maintain continuity and smoothness. Use data imputation for categorical or randomly missing data, and data interpolation for ordered data requiring estimation of intermediate values.

Impact on Model Performance and Bias

Data imputation methods, such as mean or median imputation, fill missing values to preserve dataset size, often reducing bias but potentially smoothing out important variance, which can affect model accuracy. In contrast, data interpolation estimates missing values based on existing temporal or spatial trends, maintaining data continuity but risking overfitting bias if the underlying patterns are not representative. Choosing between imputation and interpolation depends on the data structure and missingness mechanism, as inappropriate handling can lead to biased parameter estimates and degrade overall model performance.

Challenges and Limitations in Each Approach

Data imputation often struggles with bias introduction and can distort underlying data distributions, especially when missing values are not random, leading to inaccurate model training outcomes. Data interpolation is limited by its assumption of continuity and smoothness, which can fail in datasets with abrupt changes or non-linear patterns, resulting in oversimplified or misleading estimations. Both approaches face challenges in high-dimensional spaces where complex relationships and dependencies among variables make accurate reconstruction of missing data difficult.

Best Practices for Handling Missing Data in Machine Learning

Data imputation involves filling missing values using statistical measures such as mean, median, or model-based predictions to preserve dataset integrity, ensuring machine learning algorithms receive complete input. Data interpolation estimates missing points within existing data ranges, often used in time series or spatial data to maintain continuity and trends. Best practices include selecting imputation methods aligned with data type and missingness patterns, validating imputed data quality, and considering model robustness to handle imputed values without bias.

Data Imputation vs Data Interpolation Infographic

techiny.com

techiny.com