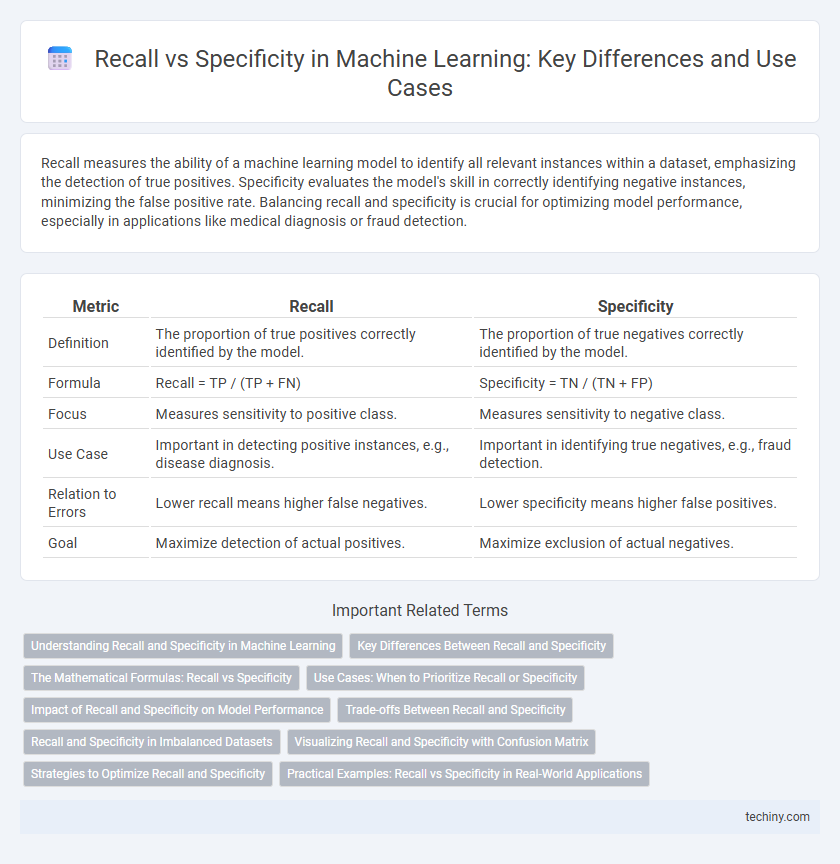

Recall measures the ability of a machine learning model to identify all relevant instances within a dataset, emphasizing the detection of true positives. Specificity evaluates the model's skill in correctly identifying negative instances, minimizing the false positive rate. Balancing recall and specificity is crucial for optimizing model performance, especially in applications like medical diagnosis or fraud detection.

Table of Comparison

| Metric | Recall | Specificity |

|---|---|---|

| Definition | The proportion of true positives correctly identified by the model. | The proportion of true negatives correctly identified by the model. |

| Formula | Recall = TP / (TP + FN) | Specificity = TN / (TN + FP) |

| Focus | Measures sensitivity to positive class. | Measures sensitivity to negative class. |

| Use Case | Important in detecting positive instances, e.g., disease diagnosis. | Important in identifying true negatives, e.g., fraud detection. |

| Relation to Errors | Lower recall means higher false negatives. | Lower specificity means higher false positives. |

| Goal | Maximize detection of actual positives. | Maximize exclusion of actual negatives. |

Understanding Recall and Specificity in Machine Learning

Recall measures the ability of a machine learning model to identify all relevant positive instances, calculated as the ratio of true positives to the sum of true positives and false negatives. Specificity, on the other hand, quantifies the model's capacity to correctly identify negative instances, expressed as the ratio of true negatives to the sum of true negatives and false positives. Balancing recall and specificity is crucial for optimizing classifier performance, especially in applications like medical diagnosis where false negatives and false positives carry significant consequences.

Key Differences Between Recall and Specificity

Recall measures the proportion of true positive instances correctly identified by a machine learning model, emphasizing sensitivity to actual positive cases. Specificity quantifies the ability of the model to correctly identify true negatives, reflecting accuracy in recognizing negative instances. The key difference lies in recall prioritizing detection of positives, while specificity focuses on correctly excluding negatives, impacting model evaluation and threshold selection.

The Mathematical Formulas: Recall vs Specificity

Recall, also known as sensitivity, is mathematically defined as TP / (TP + FN), where TP represents true positives and FN false negatives, measuring the proportion of actual positives correctly identified by the model. Specificity is calculated as TN / (TN + FP), with TN as true negatives and FP false positives, reflecting the model's ability to correctly identify negatives. These formulas quantify different aspects of classification performance, crucial in applications such as medical diagnosis and fraud detection.

Use Cases: When to Prioritize Recall or Specificity

Prioritize recall in use cases like medical diagnostics or fraud detection, where missing positive cases can lead to severe consequences or financial losses. Specificity becomes crucial in scenarios such as spam filtering or quality control, where minimizing false positives ensures efficiency and user satisfaction. Choosing between recall and specificity hinges on the relative costs of false negatives versus false positives within the application's risk framework.

Impact of Recall and Specificity on Model Performance

Recall measures a model's ability to correctly identify positive instances, directly impacting the detection rate of true positives in machine learning tasks such as disease diagnosis and fraud detection. Specificity quantifies the model's effectiveness in correctly identifying negative cases, reducing false positives and ensuring reliable exclusion of irrelevant instances. Balancing recall and specificity is crucial for optimizing overall model performance, particularly in domains where the cost of false negatives and false positives differs significantly.

Trade-offs Between Recall and Specificity

Recall measures the ability of a machine learning model to identify all relevant positive instances, while specificity quantifies how well the model correctly identifies negative instances. Enhancing recall often results in a decrease in specificity, leading to more false positives, whereas increasing specificity can reduce recall, causing more false negatives. Balancing the trade-offs between recall and specificity is crucial for optimizing model performance based on the problem context, such as prioritizing recall in medical diagnostics to avoid missing cases.

Recall and Specificity in Imbalanced Datasets

Recall measures the ability of a machine learning model to identify all relevant positive instances, making it crucial in imbalanced datasets where minority class detection is important. Specificity evaluates the proportion of true negatives correctly identified, helping to avoid false positives that can skew results in such datasets. Optimizing recall ensures better minority class recognition, while specificity maintains the reliability of negative predictions, both essential for balanced model performance in imbalanced data scenarios.

Visualizing Recall and Specificity with Confusion Matrix

Visualizing recall and specificity using a confusion matrix enhances understanding of a model's performance by clearly displaying true positives, false negatives, true negatives, and false positives. Recall, calculated as true positives divided by the sum of true positives and false negatives, measures the model's ability to identify relevant instances. Specificity, defined as true negatives over the sum of true negatives and false positives, evaluates the model's capacity to correctly reject irrelevant instances, making the confusion matrix an essential tool for balancing these metrics in classification tasks.

Strategies to Optimize Recall and Specificity

Strategies to optimize recall focus on minimizing false negatives by adjusting classification thresholds, employing balanced class weights, and using data augmentation to improve model generalization. Techniques to enhance specificity concentrate on reducing false positives through feature selection, regularization methods, and fine-tuning decision boundaries to better distinguish negative instances. Implementing cross-validation and domain-specific cost-sensitive learning further refines the balance between recall and specificity to meet application-specific requirements.

Practical Examples: Recall vs Specificity in Real-World Applications

In medical diagnostics, recall measures the ability to identify all patients with a disease, minimizing false negatives, which is critical for early treatment. Specificity evaluates how well a test correctly excludes healthy individuals, reducing false positives and unnecessary treatments, important in routine screenings. Balancing recall and specificity ensures effective decision-making, such as prioritizing recall in cancer detection while emphasizing specificity in confirming negative cases to avoid overtreatment.

recall vs specificity Infographic

techiny.com

techiny.com