Mean Squared Error (MSE) emphasizes larger errors by squaring the differences between predicted and actual values, making it sensitive to outliers in machine learning models. Mean Absolute Error (MAE) calculates the average absolute differences, providing a linear score that treats all errors equally without disproportionately penalizing large deviations. Choosing between MSE and MAE depends on the specific application, where MSE is preferred for highlighting significant errors and MAE offers robustness against noisy data.

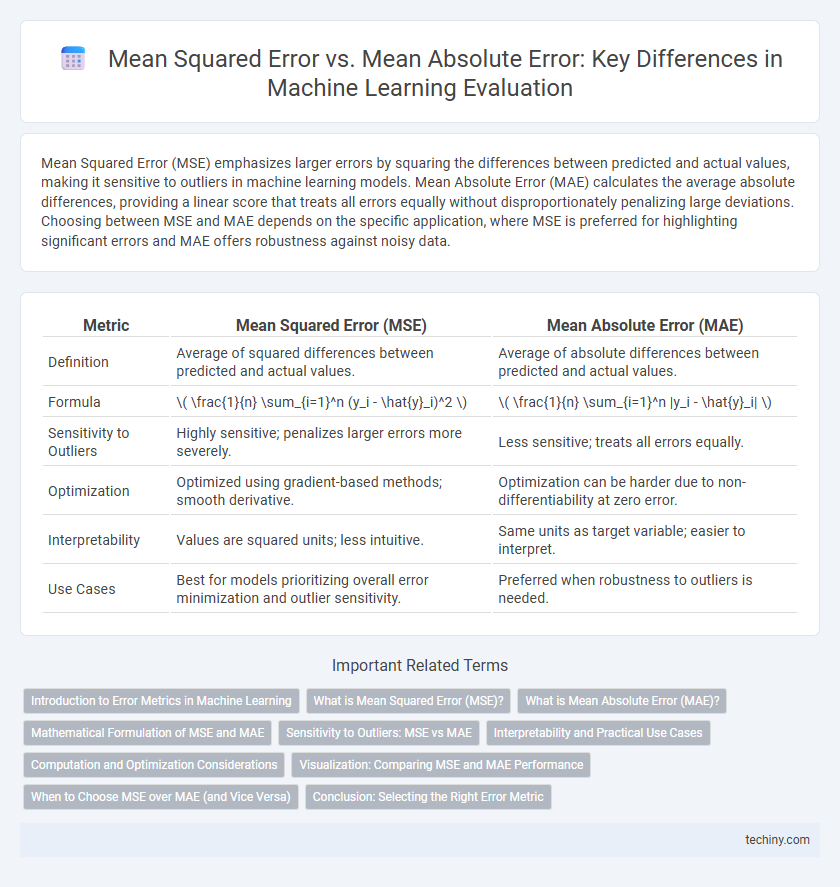

Table of Comparison

| Metric | Mean Squared Error (MSE) | Mean Absolute Error (MAE) |

|---|---|---|

| Definition | Average of squared differences between predicted and actual values. | Average of absolute differences between predicted and actual values. |

| Formula | \( \frac{1}{n} \sum_{i=1}^n (y_i - \hat{y}_i)^2 \) | \( \frac{1}{n} \sum_{i=1}^n |y_i - \hat{y}_i| \) |

| Sensitivity to Outliers | Highly sensitive; penalizes larger errors more severely. | Less sensitive; treats all errors equally. |

| Optimization | Optimized using gradient-based methods; smooth derivative. | Optimization can be harder due to non-differentiability at zero error. |

| Interpretability | Values are squared units; less intuitive. | Same units as target variable; easier to interpret. |

| Use Cases | Best for models prioritizing overall error minimization and outlier sensitivity. | Preferred when robustness to outliers is needed. |

Introduction to Error Metrics in Machine Learning

Mean Squared Error (MSE) quantifies the average squared difference between predicted and actual values, emphasizing larger errors due to its quadratic nature. Mean Absolute Error (MAE) calculates the average absolute difference, providing a linear measure of prediction accuracy less sensitive to outliers. Both metrics serve as essential tools for evaluating regression model performance, guiding the optimization process in machine learning algorithms.

What is Mean Squared Error (MSE)?

Mean Squared Error (MSE) is a widely used metric in machine learning to evaluate the accuracy of regression models by measuring the average of the squares of the errors between predicted and actual values. MSE emphasizes larger errors due to squaring, making it sensitive to outliers and useful for models where significant deviations are highly undesirable. This metric helps optimize algorithms by minimizing the squared differences, leading to more precise predictions and improved model performance.

What is Mean Absolute Error (MAE)?

Mean Absolute Error (MAE) measures the average magnitude of errors in a set of predictions, without considering their direction, by calculating the average absolute differences between predicted and actual values. MAE provides a straightforward interpretation of prediction accuracy, expressed in the same units as the target variable. It is often preferred in machine learning tasks where robustness to outliers is important, as it treats all errors equally without emphasizing larger deviations.

Mathematical Formulation of MSE and MAE

Mean Squared Error (MSE) is mathematically defined as the average of the squared differences between predicted values and actual values, expressed as \( \text{MSE} = \frac{1}{n} \sum_{i=1}^n (y_i - \hat{y}_i)^2 \), where \( y_i \) is the true value and \( \hat{y}_i \) is the predicted value. Mean Absolute Error (MAE) calculates the average of the absolute differences between predictions and actual observations, given by \( \text{MAE} = \frac{1}{n} \sum_{i=1}^n |y_i - \hat{y}_i| \). MSE penalizes larger errors more severely due to the squaring term, while MAE provides a linear score that is more robust to outliers.

Sensitivity to Outliers: MSE vs MAE

Mean Squared Error (MSE) is highly sensitive to outliers due to the squaring of error terms, which disproportionately penalizes larger errors and amplifies their impact on the overall loss. In contrast, Mean Absolute Error (MAE) treats all errors linearly, providing a more robust measure when outliers are present, as it minimizes the influence of extreme values. This distinction makes MSE preferable for applications requiring sensitivity to large deviations, while MAE is suited for datasets with noisy or anomalous data points.

Interpretability and Practical Use Cases

Mean Squared Error (MSE) emphasizes larger errors due to squaring, making it sensitive to outliers and suitable for applications demanding high precision, such as regression tasks in finance or engineering. Mean Absolute Error (MAE) provides a more interpretable average error magnitude without exaggerating outliers, benefiting domains like healthcare or customer satisfaction where error consistency matters more than error extremity. Choosing between MSE and MAE depends on the trade-off between penalizing large deviations and maintaining straightforward error interpretation aligned with specific practical use cases.

Computation and Optimization Considerations

Mean Squared Error (MSE) emphasizes larger errors by squaring the differences, making it sensitive to outliers and suitable for models where penalizing larger deviations is crucial, but it requires more computational power due to the squaring operation. Mean Absolute Error (MAE) computes the average absolute differences, providing robustness against outliers and simpler gradient calculations, which often leads to slower convergence in gradient-based optimization. Optimization algorithms using MSE benefit from smooth, differentiable gradients, while MAE's gradient is constant or undefined at zero, influencing the choice of optimization techniques in machine learning workflows.

Visualization: Comparing MSE and MAE Performance

Visualizing Mean Squared Error (MSE) and Mean Absolute Error (MAE) highlights key differences in error sensitivity and distribution impact in machine learning models. MSE amplifies larger errors due to squaring, resulting in a visualization with pronounced peaks for outliers, while MAE provides a linear scale that reflects the average magnitude of errors more evenly. Comparing performance through error plots or residual histograms helps identify model robustness to outliers and guides the selection of appropriate metrics for regression tasks.

When to Choose MSE over MAE (and Vice Versa)

Mean Squared Error (MSE) is preferable when large errors need to be penalized more heavily, making it ideal for models that require sensitivity to outliers and where error magnitude matters significantly. Mean Absolute Error (MAE) is better suited for datasets with outliers or when robustness is crucial, as it treats all errors linearly without disproportionately amplifying larger deviations. Choosing between MSE and MAE depends on the application's tolerance for outliers and the desired error sensitivity, with MSE benefiting regression tasks emphasizing precision and MAE favored in scenarios needing resilience to anomalies.

Conclusion: Selecting the Right Error Metric

Selecting the right error metric depends on the specific goals and nature of the machine learning model, where Mean Squared Error (MSE) penalizes larger errors more heavily, making it ideal for models requiring sensitivity to significant deviations. Mean Absolute Error (MAE) provides a more robust measure against outliers by treating all errors uniformly, which is beneficial when model interpretability and consistency across all error scales are prioritized. Understanding the trade-offs between MSE's sensitivity and MAE's robustness is crucial for optimizing model performance and aligning error measurement with business objectives.

Mean Squared Error vs Mean Absolute Error Infographic

techiny.com

techiny.com