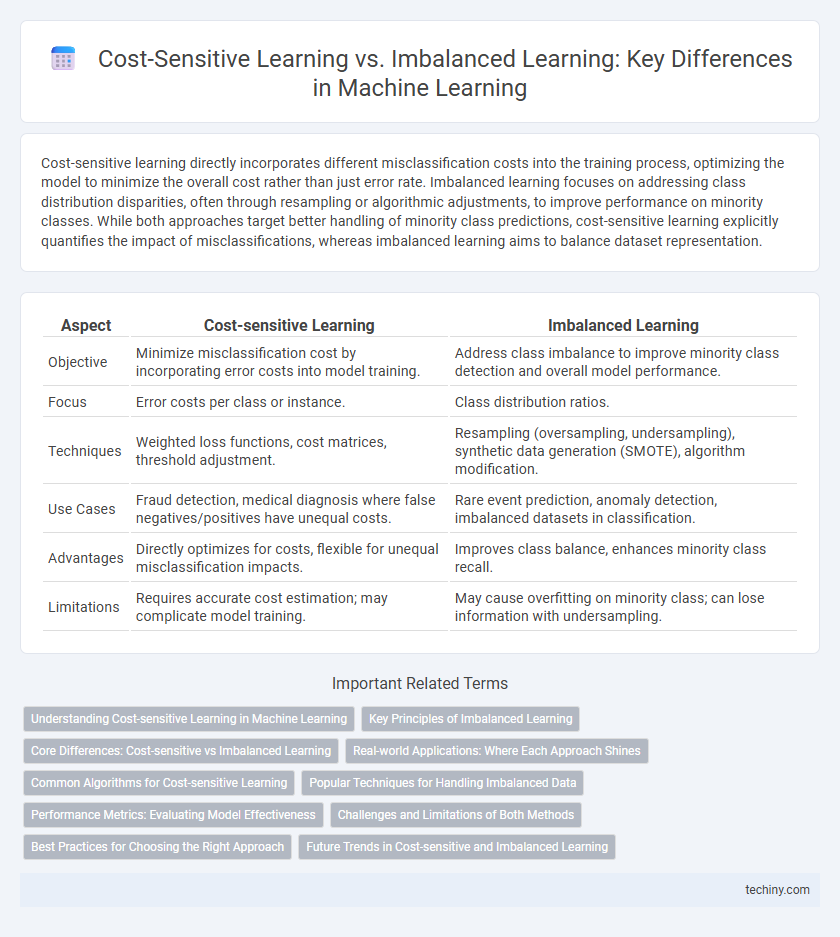

Cost-sensitive learning directly incorporates different misclassification costs into the training process, optimizing the model to minimize the overall cost rather than just error rate. Imbalanced learning focuses on addressing class distribution disparities, often through resampling or algorithmic adjustments, to improve performance on minority classes. While both approaches target better handling of minority class predictions, cost-sensitive learning explicitly quantifies the impact of misclassifications, whereas imbalanced learning aims to balance dataset representation.

Table of Comparison

| Aspect | Cost-sensitive Learning | Imbalanced Learning |

|---|---|---|

| Objective | Minimize misclassification cost by incorporating error costs into model training. | Address class imbalance to improve minority class detection and overall model performance. |

| Focus | Error costs per class or instance. | Class distribution ratios. |

| Techniques | Weighted loss functions, cost matrices, threshold adjustment. | Resampling (oversampling, undersampling), synthetic data generation (SMOTE), algorithm modification. |

| Use Cases | Fraud detection, medical diagnosis where false negatives/positives have unequal costs. | Rare event prediction, anomaly detection, imbalanced datasets in classification. |

| Advantages | Directly optimizes for costs, flexible for unequal misclassification impacts. | Improves class balance, enhances minority class recall. |

| Limitations | Requires accurate cost estimation; may complicate model training. | May cause overfitting on minority class; can lose information with undersampling. |

Understanding Cost-sensitive Learning in Machine Learning

Cost-sensitive learning in machine learning addresses the challenge of unequal misclassification costs by assigning different penalties to errors, optimizing models for minimizing overall cost rather than just error rate. Unlike imbalanced learning, which focuses on handling skewed class distributions, cost-sensitive learning directly incorporates the financial or operational impact of misclassifications into the training process. This approach improves decision-making accuracy in critical applications such as fraud detection, medical diagnosis, and risk management where the cost of false positives and false negatives varies significantly.

Key Principles of Imbalanced Learning

Imbalanced learning addresses data sets where certain classes are significantly underrepresented, focusing on techniques such as resampling, synthetic data generation, and algorithm modification to improve minority class recognition. Key principles involve maintaining a balance between sensitivity and specificity by adjusting class weights or applying threshold-moving strategies to mitigate bias toward the majority class. Effective imbalanced learning ensures improved model performance metrics like recall, precision, and F1-score for minority classes without compromising overall accuracy.

Core Differences: Cost-sensitive vs Imbalanced Learning

Cost-sensitive learning incorporates varying misclassification costs directly into the model's objective function to minimize overall cost, while imbalanced learning primarily addresses skewed class distributions by resampling or reweighting data to improve minority class predictions. Cost-sensitive methods explicitly assign different penalties for false positives and false negatives, enabling precise control over decision thresholds based on real-world consequences. In contrast, imbalanced learning techniques focus on balancing class representation or using specialized algorithms without explicit cost information, often relying on metrics like recall or F1-score for evaluation.

Real-world Applications: Where Each Approach Shines

Cost-sensitive learning excels in real-world applications where misclassification costs vary significantly, such as fraud detection and medical diagnosis, by minimizing costly errors through tailored loss functions. Imbalanced learning is particularly effective in scenarios with highly skewed class distributions, like rare event detection and fault diagnosis, ensuring minority class representation is adequately addressed. These approaches complement each other by combining cost considerations with class distribution handling for improved model performance in complex decision-making environments.

Common Algorithms for Cost-sensitive Learning

Common algorithms for cost-sensitive learning in machine learning include cost-sensitive decision trees, cost-sensitive support vector machines (SVM), and cost-sensitive neural networks, each designed to minimize misclassification costs rather than overall error rates. These algorithms incorporate cost matrices directly into their learning processes, adjusting decisions based on the varying costs of different types of errors. Techniques such as weighted loss functions and threshold tuning are frequently employed to enhance performance on datasets with unequal misclassification costs, distinguishing cost-sensitive learning from traditional imbalanced learning approaches.

Popular Techniques for Handling Imbalanced Data

Popular techniques for handling imbalanced data in machine learning include resampling methods such as oversampling the minority class with SMOTE (Synthetic Minority Over-sampling Technique) and undersampling the majority class to balance class distribution. Cost-sensitive learning incorporates misclassification costs directly into the model training process, adjusting the algorithm to penalize errors on minority classes more heavily. Ensemble methods like Balanced Random Forest and EasyEnsemble combine resampling with model aggregation to improve performance on imbalanced datasets.

Performance Metrics: Evaluating Model Effectiveness

Cost-sensitive learning incorporates misclassification costs directly into the model training, optimizing for metrics such as weighted accuracy, cost-sensitive error rate, and expected cost to better reflect real-world expenses. Imbalanced learning focuses on handling skewed class distributions by prioritizing metrics like F1-score, Area Under the Curve (AUC), Precision-Recall curves, and G-mean to evaluate model robustness across minority and majority classes. Selecting appropriate performance metrics is crucial as cost-sensitive learning emphasizes minimizing monetary loss, while imbalanced learning targets balanced accuracy and minority class recognition.

Challenges and Limitations of Both Methods

Cost-sensitive learning faces challenges in accurately estimating misclassification costs, which can lead to suboptimal model performance when costs are mis-specified or vary across applications. Imbalanced learning struggles with skewed class distributions that cause classifiers to be biased towards majority classes, often resulting in poor minority class recognition and overfitting when using resampling techniques. Both methods encounter limitations in generalizability and scalability, especially in complex or high-dimensional datasets where cost matrices or imbalance ratios are not well-defined or fluctuate dynamically.

Best Practices for Choosing the Right Approach

Cost-sensitive learning focuses on assigning different misclassification costs to classes, making it essential when false positives and false negatives have unequal consequences. Imbalanced learning addresses class distribution disparities by techniques such as resampling or synthetic data generation to improve minority class recognition. The best practice involves evaluating problem-specific cost matrices and dataset imbalance ratios before selecting an approach that optimizes predictive accuracy and minimizes overall risk.

Future Trends in Cost-sensitive and Imbalanced Learning

Future trends in cost-sensitive and imbalanced learning emphasize the integration of advanced deep learning architectures with dynamic cost adjustment to improve model robustness. Research is increasingly focusing on developing real-time adaptive algorithms that can automatically recalibrate misclassification costs based on evolving data distributions. Enhanced interpretability and the fusion of cost-sensitive techniques with synthetic data generation methods are expected to address the persistent challenge of skewed class distributions effectively.

Cost-sensitive Learning vs Imbalanced Learning Infographic

techiny.com

techiny.com