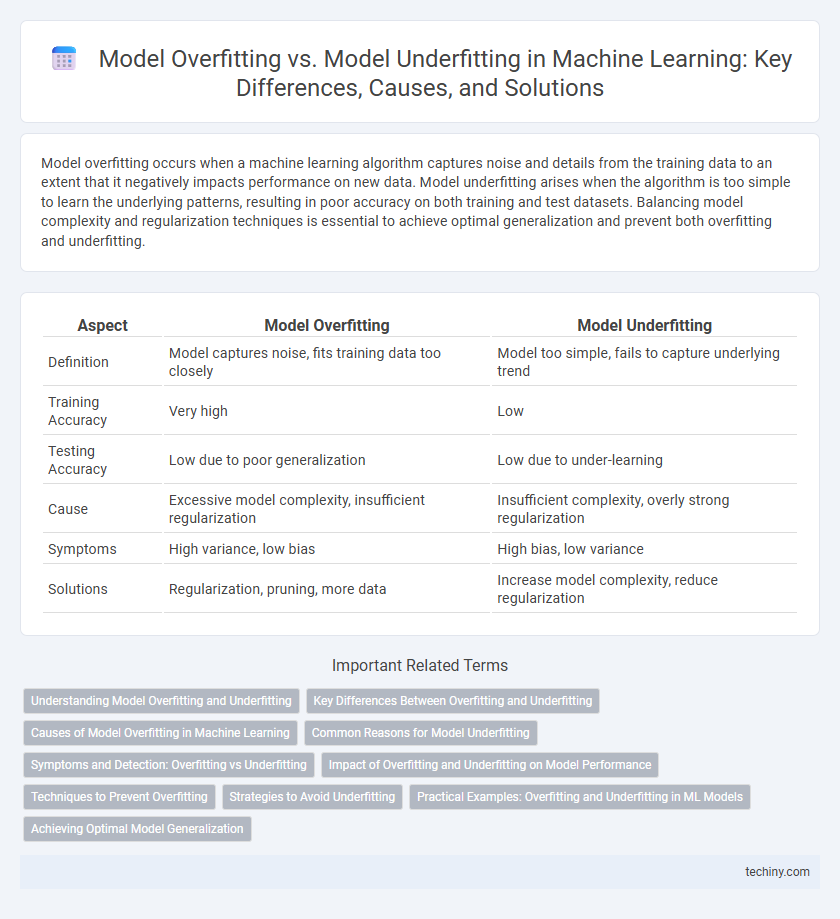

Model overfitting occurs when a machine learning algorithm captures noise and details from the training data to an extent that it negatively impacts performance on new data. Model underfitting arises when the algorithm is too simple to learn the underlying patterns, resulting in poor accuracy on both training and test datasets. Balancing model complexity and regularization techniques is essential to achieve optimal generalization and prevent both overfitting and underfitting.

Table of Comparison

| Aspect | Model Overfitting | Model Underfitting |

|---|---|---|

| Definition | Model captures noise, fits training data too closely | Model too simple, fails to capture underlying trend |

| Training Accuracy | Very high | Low |

| Testing Accuracy | Low due to poor generalization | Low due to under-learning |

| Cause | Excessive model complexity, insufficient regularization | Insufficient complexity, overly strong regularization |

| Symptoms | High variance, low bias | High bias, low variance |

| Solutions | Regularization, pruning, more data | Increase model complexity, reduce regularization |

Understanding Model Overfitting and Underfitting

Model overfitting occurs when a machine learning model captures noise and fluctuations in the training data, resulting in poor generalization to new datasets, while underfitting happens when the model is too simple to capture the underlying pattern, leading to high bias and low accuracy. Techniques such as cross-validation, regularization (L1, L2), and pruning help mitigate overfitting, whereas increasing model complexity, adding features, or reducing regularization can address underfitting. Evaluating performance metrics like training and validation error curves provides critical insight into the balance between bias and variance, guiding optimal model tuning.

Key Differences Between Overfitting and Underfitting

Overfitting occurs when a machine learning model learns the noise and details in the training data to an extent that it negatively impacts its performance on new data, exhibiting high variance but low bias. Underfitting, characterized by high bias and low variance, happens when the model is too simple to capture the underlying patterns of the data, resulting in poor performance on both training and test datasets. Key differences include overfitting's tendency to cause excellent training accuracy but poor generalization, while underfitting leads to poor accuracy across all datasets due to inadequate model complexity.

Causes of Model Overfitting in Machine Learning

Model overfitting in machine learning occurs when a model learns noise and random fluctuations in the training data rather than the underlying patterns, often due to excessive model complexity or insufficient training data. High variance in the model's predictions and overly complex architectures like deep neural networks without adequate regularization contribute significantly to overfitting. Lack of proper cross-validation techniques and failure to use regularization methods such as dropout, L1/L2 regularization, or early stopping exacerbate the risk of overfitting, leading to poor generalization on unseen data.

Common Reasons for Model Underfitting

Model underfitting occurs when a machine learning model is too simple to capture the underlying patterns in the training data, often due to insufficient model complexity or inadequate feature selection. Common reasons include using linear models on non-linear problems, insufficient training data, or excessive regularization that restricts the model's ability to learn. Underfitting results in high bias and poor performance on both training and testing datasets, signaling the need for more expressive models or enhanced feature engineering.

Symptoms and Detection: Overfitting vs Underfitting

Model overfitting is characterized by high accuracy on training data but poor generalization to unseen data, often detected by a large gap between training and validation error. Underfitting occurs when both training and validation errors remain high, indicating the model is too simple to capture the underlying data patterns. Monitoring learning curves and using techniques like cross-validation helps in diagnosing these symptoms accurately.

Impact of Overfitting and Underfitting on Model Performance

Overfitting occurs when a machine learning model captures noise instead of the underlying data pattern, leading to high accuracy on training data but poor generalization to new data. Underfitting happens when the model fails to learn the data complexity, resulting in low performance on both training and testing datasets. Both issues degrade model performance by either causing excessive variance or high bias, reducing predictive reliability.

Techniques to Prevent Overfitting

Techniques to prevent overfitting in machine learning include regularization methods such as L1 (Lasso) and L2 (Ridge) regularization, which add penalties to model complexity, effectively reducing variance. Dropout, commonly used in neural networks, randomly deactivates neurons during training to prevent co-adaptation and improve generalization. Early stopping monitors validation loss and halts training when performance degrades, avoiding excessive fitting to noise in the training data.

Strategies to Avoid Underfitting

Strategies to avoid underfitting in machine learning include increasing model complexity by choosing algorithms capable of capturing more intricate patterns, such as deep neural networks or ensemble methods like Random Forests. Enhancing feature engineering through the creation of new features or transforming existing ones helps the model better understand the underlying data distribution. Furthermore, reducing regularization strength and ensuring sufficient training epochs allow the model to learn more comprehensive representations without being overly constrained.

Practical Examples: Overfitting and Underfitting in ML Models

Overfitting occurs when a machine learning model performs exceptionally well on training data but poorly on unseen data, such as a decision tree that memorizes training examples but fails to generalize. Underfitting happens when a model is too simple to capture the underlying patterns, like a linear regression trying to fit a complex nonlinear relationship, leading to poor performance on both training and test sets. Practical examples include tuning model complexity and regularization to balance bias and variance, ensuring models generalize well beyond the training dataset.

Achieving Optimal Model Generalization

Model overfitting occurs when a machine learning algorithm captures noise and random fluctuations in the training data, resulting in high accuracy on training sets but poor performance on unseen data. Model underfitting happens when the algorithm is too simple to learn the underlying patterns in the data, leading to low accuracy on both training and test sets. Achieving optimal model generalization involves balancing bias and variance, using techniques such as cross-validation, regularization, and hyperparameter tuning to enhance predictive performance on new inputs.

model overfitting vs model underfitting Infographic

techiny.com

techiny.com