K-nearest neighbors (KNN) is a supervised learning algorithm used for classification and regression by identifying the closest data points based on distance metrics. In contrast, k-means clustering is an unsupervised learning technique that partitions data into k distinct clusters by minimizing the variance within each cluster. Both methods rely on distance calculations but serve fundamentally different purposes: KNN predicts labels for new data based on training examples, while k-means uncovers inherent groupings in unlabeled data.

Table of Comparison

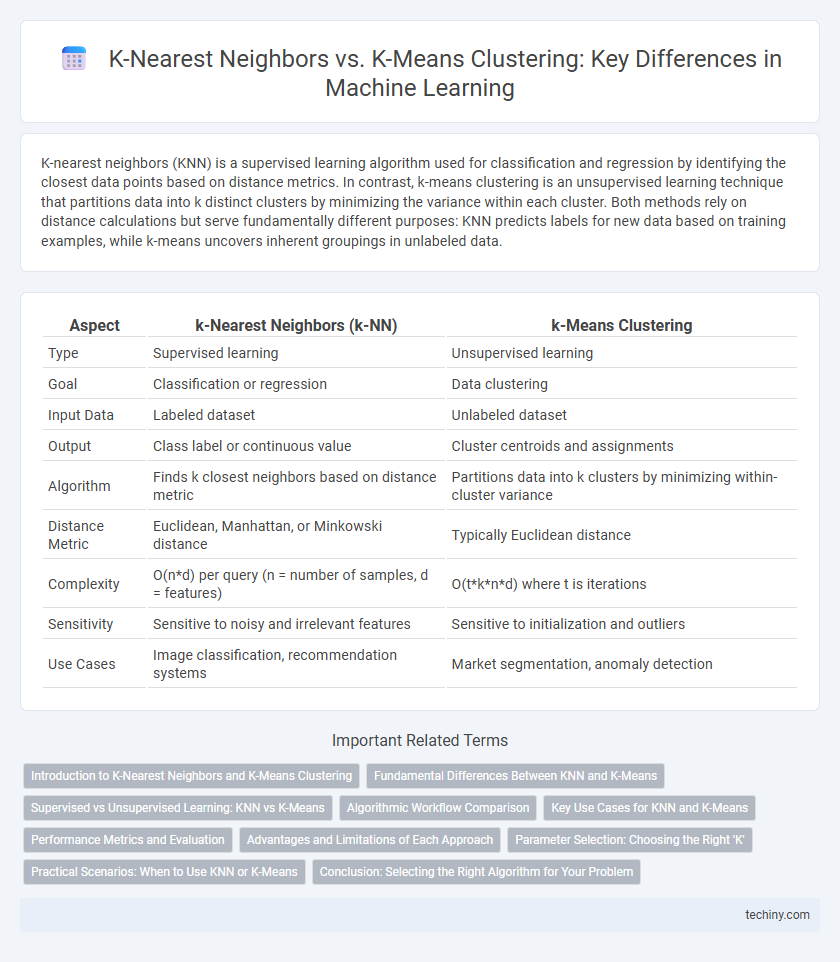

| Aspect | k-Nearest Neighbors (k-NN) | k-Means Clustering |

|---|---|---|

| Type | Supervised learning | Unsupervised learning |

| Goal | Classification or regression | Data clustering |

| Input Data | Labeled dataset | Unlabeled dataset |

| Output | Class label or continuous value | Cluster centroids and assignments |

| Algorithm | Finds k closest neighbors based on distance metric | Partitions data into k clusters by minimizing within-cluster variance |

| Distance Metric | Euclidean, Manhattan, or Minkowski distance | Typically Euclidean distance |

| Complexity | O(n*d) per query (n = number of samples, d = features) | O(t*k*n*d) where t is iterations |

| Sensitivity | Sensitive to noisy and irrelevant features | Sensitive to initialization and outliers |

| Use Cases | Image classification, recommendation systems | Market segmentation, anomaly detection |

Introduction to K-Nearest Neighbors and K-Means Clustering

K-Nearest Neighbors (KNN) is a supervised machine learning algorithm used primarily for classification and regression by identifying the closest data points in feature space based on distance metrics like Euclidean distance. K-Means clustering is an unsupervised learning technique that partitions data into k clusters by minimizing the variance within each cluster, iteratively updating centroids to represent cluster centers. Both algorithms rely on proximity but differ fundamentally in their objectives: KNN focuses on predicting labels using labeled data, whereas K-Means identifies inherent groupings without pre-assigned labels.

Fundamental Differences Between KNN and K-Means

K-nearest neighbors (KNN) is a supervised learning algorithm that classifies data points based on the labels of their closest neighbors, relying on distance metrics and labeled training data. In contrast, k-means clustering is an unsupervised learning method that partitions data into k clusters by minimizing intra-cluster variance without requiring labeled data. KNN emphasizes classification or regression tasks, while k-means focuses on discovering inherent data groupings through iterative centroid updates.

Supervised vs Unsupervised Learning: KNN vs K-Means

K-nearest neighbors (KNN) is a supervised learning algorithm that classifies data points based on labeled training examples by identifying the closest neighbors in feature space. In contrast, k-means clustering is an unsupervised learning technique that partitions unlabeled data into k distinct clusters by minimizing within-cluster variance. KNN leverages known outputs for prediction accuracy, while k-means discovers inherent structures without prior labels.

Algorithmic Workflow Comparison

K-nearest neighbors (KNN) classifies data points by identifying the closest labeled examples based on distance metrics, while k-means clustering partitions data into k clusters by minimizing intra-cluster variance through iterative centroid updates. KNN operates in a supervised learning context requiring labeled data, leveraging a straightforward prediction phase driven by neighbor voting. K-means clustering functions as an unsupervised method, iteratively refining cluster centers without prior labels, focusing on intra-cluster similarity to uncover inherent data structures.

Key Use Cases for KNN and K-Means

K-nearest neighbors (KNN) excels in classification tasks such as image recognition, fraud detection, and recommendation systems due to its instance-based learning and simplicity. K-means clustering is primarily used for unsupervised learning applications like customer segmentation, market basket analysis, and anomaly detection by grouping data points into distinct clusters. Both algorithms serve vital roles in pattern recognition but differ fundamentally in supervised versus unsupervised contexts.

Performance Metrics and Evaluation

K-nearest neighbors (KNN) performance is typically evaluated using accuracy, precision, recall, and F1-score due to its supervised learning nature, while k-means clustering relies on unsupervised metrics like silhouette score, Davies-Bouldin index, and inertia to assess cluster compactness and separation. KNN's classification results depend heavily on the choice of k and distance metrics, directly influencing true positive and false positive rates. In contrast, k-means performance metrics focus on minimizing within-cluster variance and maximizing inter-cluster distance, affecting the quality and interpretability of the clustering results.

Advantages and Limitations of Each Approach

K-nearest neighbors (KNN) excels in classification tasks with clear label boundaries, offering simplicity and intuitive results but suffers from high computational cost and sensitivity to irrelevant features in large datasets. K-means clustering efficiently partitions data into distinct groups by minimizing intra-cluster variance, making it ideal for unsupervised learning; however, it requires prior knowledge of cluster numbers and is prone to failing with non-spherical cluster shapes or noisy data. Choosing between KNN and K-means depends on whether the problem is supervised classification or unsupervised clustering, balancing accuracy needs against scalability and data structure assumptions.

Parameter Selection: Choosing the Right 'K'

In k-nearest neighbors (KNN), selecting the right 'K' balances bias and variance, where a small 'K' can lead to noisy predictions while a large 'K' smooths class boundaries. In contrast, k-means clustering requires careful choice of 'K' to optimize cluster compactness and separation, often guided by metrics like the elbow method or silhouette score. Both algorithms' performance heavily depends on this parameter, impacting classification accuracy in KNN and clustering validity in k-means.

Practical Scenarios: When to Use KNN or K-Means

K-nearest neighbors (KNN) excels in supervised learning tasks where labeled data is available and accurate classification or regression predictions are needed, such as image recognition or customer segmentation with known categories. In contrast, k-means clustering is ideal for unsupervised learning scenarios where discovering hidden patterns or grouping unlabeled data is essential, like market basket analysis or document clustering. Choosing between KNN and k-means depends on whether the problem requires classification based on proximity to labeled examples or identifying intrinsic data groupings without prior labels.

Conclusion: Selecting the Right Algorithm for Your Problem

K-nearest neighbors (KNN) excels in supervised classification and regression tasks by leveraging labeled data to predict outcomes based on proximity to known samples. K-means clustering is best suited for unsupervised learning scenarios where discovering inherent groupings without predefined labels is essential. Choosing between KNN and k-means depends on whether the problem involves labeled data for prediction or unlabeled data for pattern discovery.

k-nearest neighbors vs k-means clustering Infographic

techiny.com

techiny.com