Data leakage occurs when information from outside the training dataset is used to create the model, causing overly optimistic performance and poor generalization on new data. Data drift refers to changes in the input data distribution over time, leading to decreased model accuracy as the model encounters data it was not trained on. Monitoring both data leakage and data drift is essential for maintaining reliable and robust machine learning models in production environments.

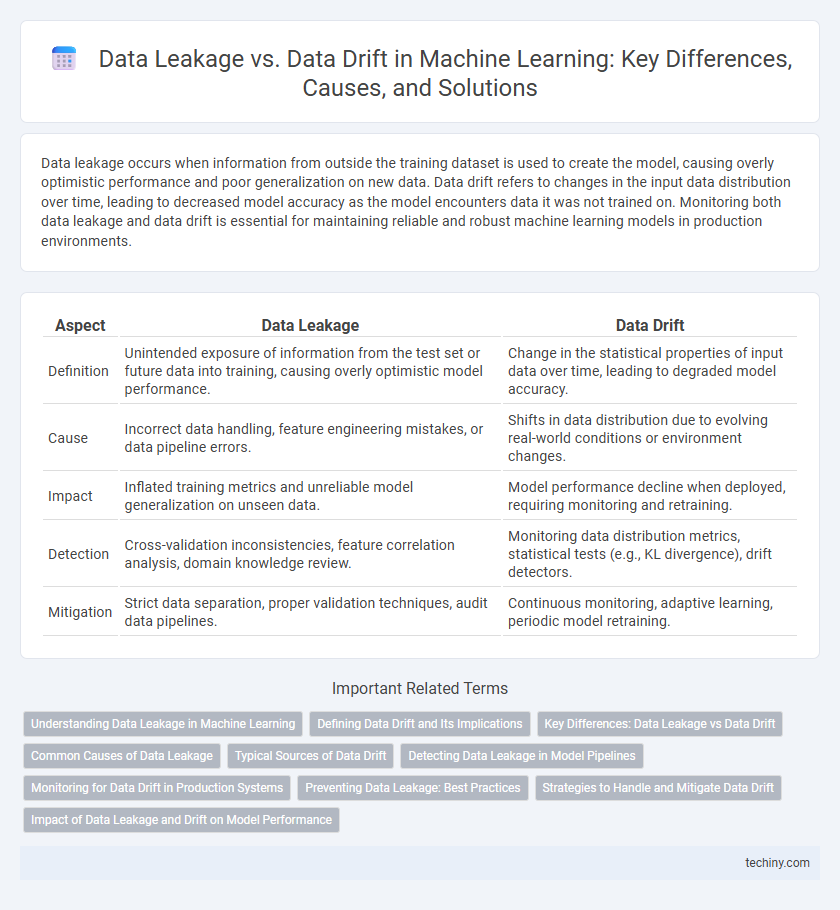

Table of Comparison

| Aspect | Data Leakage | Data Drift |

|---|---|---|

| Definition | Unintended exposure of information from the test set or future data into training, causing overly optimistic model performance. | Change in the statistical properties of input data over time, leading to degraded model accuracy. |

| Cause | Incorrect data handling, feature engineering mistakes, or data pipeline errors. | Shifts in data distribution due to evolving real-world conditions or environment changes. |

| Impact | Inflated training metrics and unreliable model generalization on unseen data. | Model performance decline when deployed, requiring monitoring and retraining. |

| Detection | Cross-validation inconsistencies, feature correlation analysis, domain knowledge review. | Monitoring data distribution metrics, statistical tests (e.g., KL divergence), drift detectors. |

| Mitigation | Strict data separation, proper validation techniques, audit data pipelines. | Continuous monitoring, adaptive learning, periodic model retraining. |

Understanding Data Leakage in Machine Learning

Data leakage in machine learning occurs when information from outside the training dataset unintentionally influences the model, leading to overly optimistic performance estimates. It often arises from including future data or target labels during training, which masks the true generalization ability of the model. Detecting and preventing data leakage is critical to ensure reliable predictions and maintain model integrity in real-world applications.

Defining Data Drift and Its Implications

Data drift refers to the gradual or sudden changes in the statistical properties of input data over time, which can degrade the performance of machine learning models. These shifts often arise from evolving user behavior, environmental changes, or updates in data collection methods, leading to inaccurate predictions or model instability. Monitoring data drift and implementing retraining strategies are essential to maintaining model accuracy and ensuring reliable decision-making in production environments.

Key Differences: Data Leakage vs Data Drift

Data leakage occurs when information from outside the training dataset is inappropriately used to create the model, causing overly optimistic performance metrics. Data drift refers to changes in the input data distribution or underlying relationships over time, which can degrade model accuracy during deployment. Unlike data leakage, which is a modeling error fixed during training, data drift requires ongoing monitoring and adaptation of the model to maintain predictive reliability.

Common Causes of Data Leakage

Data leakage commonly occurs when sensitive information or target variable data inadvertently becomes part of the training dataset, leading to overly optimistic model performance. Frequent causes include improper feature selection where future data or labels leak into training features, and insufficient separation between training and testing datasets. Preventing data leakage requires rigorous validation protocols and careful dataset partitioning during the machine learning pipeline.

Typical Sources of Data Drift

Typical sources of data drift include changes in the underlying data distributions caused by evolving user behaviors, system updates, or external factors such as seasonality and market trends. Data drift often occurs when the statistical properties of input features or target variables shift over time, leading to model performance degradation. Identifying and monitoring these changes through regular data analysis and feature distribution tracking is essential for maintaining accurate machine learning predictions.

Detecting Data Leakage in Model Pipelines

Detecting data leakage in model pipelines involves monitoring for unintended exposure of target information during training, which can falsely inflate model performance. Key techniques include rigorous separation of training and test datasets, implementation of cross-validation without overlap, and thorough feature auditing to identify variables derived from future or target data. Automated tools leveraging statistical tests and explainability methods can enhance detection by flagging anomalies indicative of leakage within complex machine learning workflows.

Monitoring for Data Drift in Production Systems

Monitoring for data drift in production systems involves continuously analyzing input data distributions to detect significant changes that may degrade machine learning model performance. Key techniques include statistical tests such as the Kolmogorov-Smirnov test, population stability index (PSI), and embedding-based similarity metrics to identify shifts in feature distributions. Implementing automated alerts and retraining pipelines ensures timely adaptation to data drift, maintaining model accuracy and reliability in dynamic environments.

Preventing Data Leakage: Best Practices

Preventing data leakage in machine learning involves strict separation of training, validation, and test datasets to ensure no information from the target variable leaks into the feature set. Techniques such as proper feature engineering pipelines, time-based splitting for time series data, and careful handling of data transformations minimize leakage risks. Regular auditing of models and datasets alongside cross-validation helps maintain data integrity and improves model generalization performance.

Strategies to Handle and Mitigate Data Drift

Detecting and addressing data drift involves continuous monitoring of model input distributions and retraining models with recent, representative datasets. Implementing automated data validation pipelines and alert systems helps promptly identify deviations in feature statistics or label distributions. Leveraging adaptive learning techniques, such as online learning or incremental model updates, ensures models remain robust against evolving data patterns.

Impact of Data Leakage and Drift on Model Performance

Data leakage introduces information into the training process that would not be available during actual model deployment, causing overly optimistic performance metrics and poor generalization to new data. Data drift occurs when the underlying data distribution changes over time, leading to degradation in model accuracy and reliability as the model encounters patterns it was not trained on. Both data leakage and data drift significantly impact model robustness, necessitating continuous monitoring and retraining to maintain optimal machine learning performance.

Data Leakage vs Data Drift Infographic

techiny.com

techiny.com