Multilayer perceptrons (MLPs) excel in modeling complex, non-linear relationships through layered neural networks, enabling them to learn high-level abstractions from large datasets. Support vector machines (SVMs) optimize classification by maximizing the margin between classes using kernel functions, making them effective for smaller, well-defined datasets with clear boundaries. While MLPs offer flexibility and scalability for deep learning tasks, SVMs provide robustness and precision in high-dimensional spaces with limited samples.

Table of Comparison

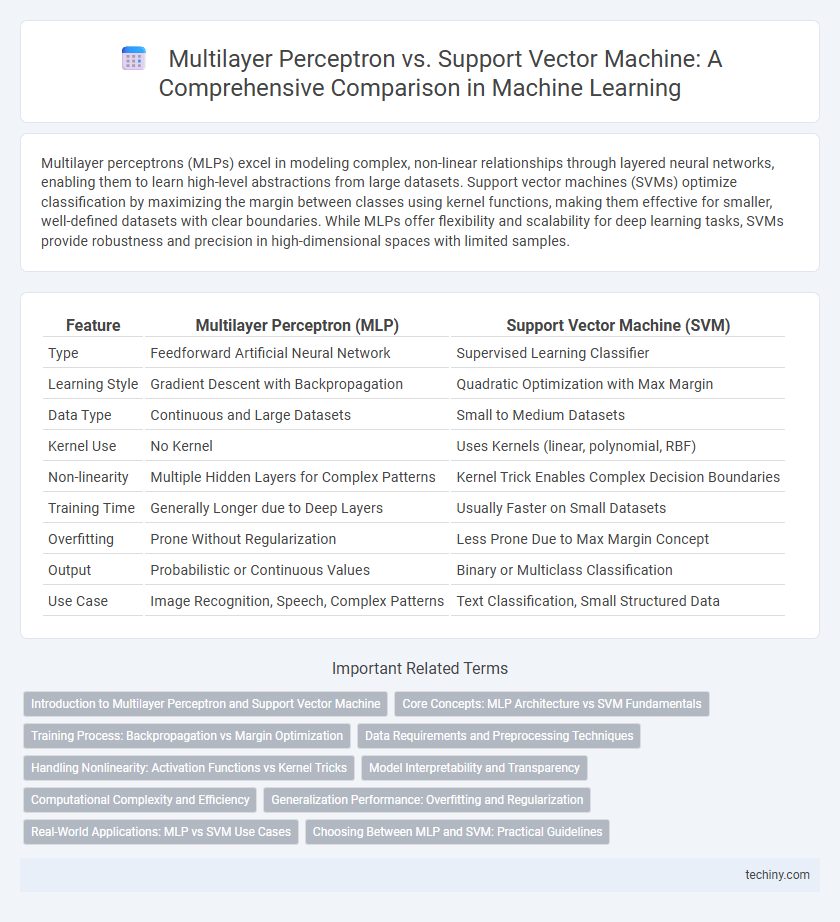

| Feature | Multilayer Perceptron (MLP) | Support Vector Machine (SVM) |

|---|---|---|

| Type | Feedforward Artificial Neural Network | Supervised Learning Classifier |

| Learning Style | Gradient Descent with Backpropagation | Quadratic Optimization with Max Margin |

| Data Type | Continuous and Large Datasets | Small to Medium Datasets |

| Kernel Use | No Kernel | Uses Kernels (linear, polynomial, RBF) |

| Non-linearity | Multiple Hidden Layers for Complex Patterns | Kernel Trick Enables Complex Decision Boundaries |

| Training Time | Generally Longer due to Deep Layers | Usually Faster on Small Datasets |

| Overfitting | Prone Without Regularization | Less Prone Due to Max Margin Concept |

| Output | Probabilistic or Continuous Values | Binary or Multiclass Classification |

| Use Case | Image Recognition, Speech, Complex Patterns | Text Classification, Small Structured Data |

Introduction to Multilayer Perceptron and Support Vector Machine

Multilayer Perceptron (MLP) is a feedforward artificial neural network model that consists of multiple layers of nodes, enabling it to learn complex patterns through nonlinear activation functions and backpropagation. Support Vector Machine (SVM) is a supervised learning algorithm that constructs hyperplanes in high-dimensional space to maximize the margin between different classes for classification tasks. Both MLP and SVM are powerful techniques used in machine learning for pattern recognition, but MLP excels in handling large datasets with complex patterns, while SVM is effective in cases with clear margin separation and smaller sample sizes.

Core Concepts: MLP Architecture vs SVM Fundamentals

Multilayer Perceptron (MLP) architecture consists of interconnected layers of neurons using nonlinear activation functions to model complex patterns through forward propagation and backpropagation for training. Support Vector Machine (SVM) fundamentals rely on finding an optimal hyperplane that maximizes the margin between different class data points in a high-dimensional feature space, often employing kernel functions for non-linear classification. The key distinction lies in MLP's deep learning approach with multiple hidden layers versus SVM's focus on margin maximization and kernel trick for classification efficiency.

Training Process: Backpropagation vs Margin Optimization

Multilayer Perceptrons (MLPs) utilize backpropagation during the training process, which involves gradient descent algorithms to minimize a loss function by adjusting network weights through multiple layers. Support Vector Machines (SVMs) rely on margin optimization, where the training aims to find the hyperplane that maximizes the margin between different classes using convex optimization techniques. While MLPs adaptively learn complex patterns with iterative weight updates, SVMs focus on finding a global optimum solution that maximizes class separation in the feature space.

Data Requirements and Preprocessing Techniques

Multilayer perceptrons (MLPs) require large amounts of labeled data and benefit from normalized input features to improve convergence during training, often employing techniques like min-max scaling or z-score normalization. Support vector machines (SVMs) perform well with smaller datasets and rely heavily on careful feature scaling, such as standardization, to maximize the margin between classes. While MLPs can handle raw input with minimal preprocessing, SVMs demand rigorous preprocessing steps including feature selection and kernel transformations to optimize model performance.

Handling Nonlinearity: Activation Functions vs Kernel Tricks

Multilayer Perceptrons (MLPs) handle nonlinearity through activation functions like ReLU, sigmoid, and tanh, which enable the network to learn complex, non-linear patterns by introducing non-linear transformations at each neuron. Support Vector Machines (SVMs) address nonlinearity using kernel tricks, such as the radial basis function (RBF) or polynomial kernels, mapping input features into higher-dimensional space to find linear separability. While MLPs learn representations through layered transformations and backpropagation, SVMs rely on kernel functions to implicitly project data, making kernel selection critical for performance with non-linear datasets.

Model Interpretability and Transparency

Multilayer Perceptrons (MLPs) offer limited model interpretability due to their complex, layered architecture and non-linear transformations, which often act as a "black box." Support Vector Machines (SVMs) provide greater transparency by relying on a subset of critical support vectors to define the decision boundary, making it easier to understand which data points influence the model. Techniques such as feature importance and margin visualization further enhance the interpretability of SVMs compared to the deep, distributed representations in MLPs.

Computational Complexity and Efficiency

Multilayer Perceptrons (MLPs) typically require more computational resources during training due to backpropagation through multiple layers, leading to longer training times especially with large datasets. Support Vector Machines (SVMs) have a computational complexity that scales between quadratic and cubic with the number of training samples, often making them less efficient on very large datasets. In practice, MLPs benefit from parallel processing on GPUs, which can offset their complexity, whereas SVMs tend to be more efficient on smaller datasets with clear margin separation.

Generalization Performance: Overfitting and Regularization

Multilayer perceptrons (MLPs) often require explicit regularization techniques such as dropout and L2 regularization to mitigate overfitting, enhancing their generalization performance on complex datasets. Support vector machines (SVMs) inherently control overfitting through the maximization of the margin between classes, providing strong generalization even in high-dimensional spaces. The choice between MLP and SVM depends on dataset size and feature complexity, with SVMs excelling in smaller, clearer margin problems and MLPs benefiting from large-scale data with appropriate regularization.

Real-World Applications: MLP vs SVM Use Cases

Multilayer perceptrons (MLPs) excel in complex, large-scale data scenarios such as image recognition, speech processing, and natural language understanding due to their deep learning architecture and ability to model non-linear relationships. Support vector machines (SVMs) are highly effective for smaller datasets with clear margin separations, commonly applied in bioinformatics, text classification, and fraud detection where precision and interpretability are crucial. While MLPs scale better with increasing data and feature complexity, SVMs often outperform in high-dimensional, sparse data contexts by maximizing the margin between classes.

Choosing Between MLP and SVM: Practical Guidelines

Multilayer Perceptrons (MLPs) excel in handling large-scale datasets with complex, non-linear patterns due to their deep learning architecture, making them suitable for image and speech recognition tasks. Support Vector Machines (SVMs) perform well with smaller, high-dimensional datasets, offering robust classification boundaries and faster training on limited data. Choosing between MLP and SVM depends on dataset size, feature complexity, and computational resources, with MLP favored for intricate pattern recognition and SVM for clear-margin classification with fewer samples.

multilayer perceptron vs support vector machine Infographic

techiny.com

techiny.com