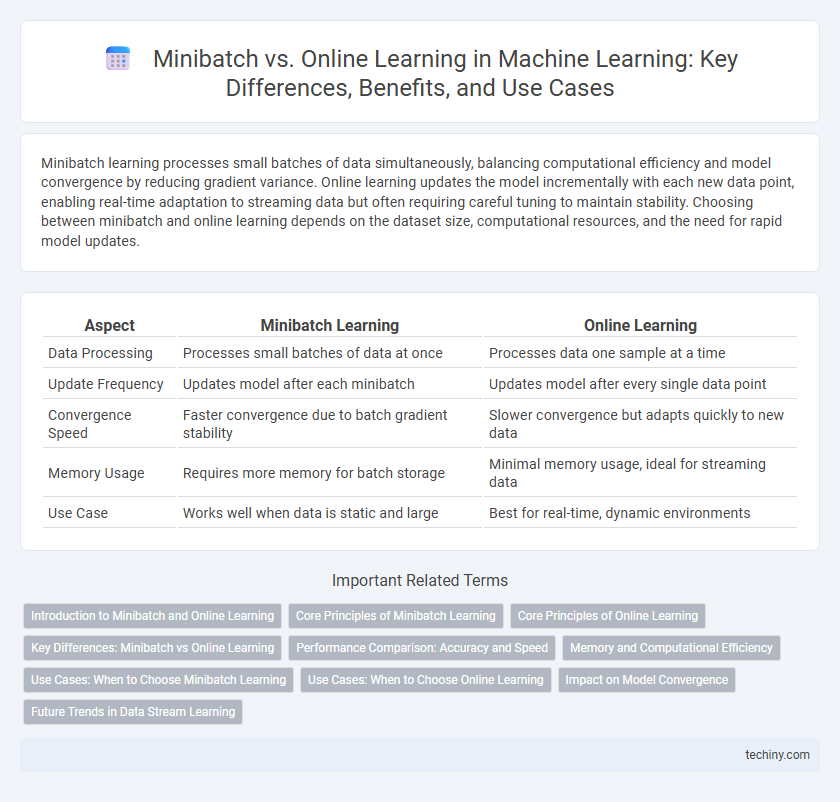

Minibatch learning processes small batches of data simultaneously, balancing computational efficiency and model convergence by reducing gradient variance. Online learning updates the model incrementally with each new data point, enabling real-time adaptation to streaming data but often requiring careful tuning to maintain stability. Choosing between minibatch and online learning depends on the dataset size, computational resources, and the need for rapid model updates.

Table of Comparison

| Aspect | Minibatch Learning | Online Learning |

|---|---|---|

| Data Processing | Processes small batches of data at once | Processes data one sample at a time |

| Update Frequency | Updates model after each minibatch | Updates model after every single data point |

| Convergence Speed | Faster convergence due to batch gradient stability | Slower convergence but adapts quickly to new data |

| Memory Usage | Requires more memory for batch storage | Minimal memory usage, ideal for streaming data |

| Use Case | Works well when data is static and large | Best for real-time, dynamic environments |

Introduction to Minibatch and Online Learning

Minibatch learning processes small subsets of data at a time, balancing computational efficiency and model convergence stability by updating weights after each subset. Online learning updates the model incrementally with each individual data sample, enabling real-time adaptation to streaming data and reducing memory requirements. Both methods optimize the training process in machine learning by addressing trade-offs between computational cost, convergence speed, and data availability.

Core Principles of Minibatch Learning

Minibatch learning processes small subsets of data called minibatches, balancing computational efficiency and gradient estimation accuracy compared to online learning's single-sample updates. Core principles include reducing variance in gradient estimates through averaging, improving convergence stability, and enabling parallel computation on modern hardware. This approach optimizes resource use while maintaining effective model training speed and accuracy in machine learning.

Core Principles of Online Learning

Online learning processes data instances sequentially, updating the model incrementally after each example, which enables real-time adaptability and efficient handling of streaming data. It relies on gradient-based updates or loss function optimization performed one sample at a time, minimizing memory usage compared to minibatch learning. Core principles emphasize continuous model refinement, fast convergence on non-stationary data distributions, and resilience to concept drift in dynamic environments.

Key Differences: Minibatch vs Online Learning

Minibatch learning processes fixed-size subsets of data, balancing computational efficiency and gradient variance reduction, whereas online learning updates model parameters one sample at a time, enabling rapid adaptation to streaming data. Minibatch training improves stability and convergence speed by averaging gradients over batches, while online learning excels in real-time scenarios with limited memory. The choice between minibatch and online learning depends on dataset size, computational resources, and the need for model responsiveness.

Performance Comparison: Accuracy and Speed

Minibatch learning offers a balanced approach by processing fixed-size data subsets, enhancing training speed and stability compared to online learning, which updates the model continuously with each data point. Accuracy in minibatch methods tends to be higher due to more stable gradient estimates, while online learning excels in scenarios requiring rapid model adaptation but may suffer from noisier updates. Performance efficiency depends on dataset size and computational resources, with minibatch learning generally preferred for leveraging parallel hardware and achieving faster convergence.

Memory and Computational Efficiency

Minibatch learning processes data in fixed-size subsets, optimizing memory usage by limiting the amount of data loaded into RAM simultaneously, which enhances computational efficiency by enabling vectorized operations. Online learning updates model parameters incrementally with each individual data point, requiring minimal memory but potentially increasing computational overhead due to frequent updates. Choosing between minibatch and online learning depends on the trade-off between real-time adaptability and the benefits of batch processing for hardware acceleration and memory management.

Use Cases: When to Choose Minibatch Learning

Minibatch learning is ideal for training deep neural networks on large datasets where hardware constraints limit memory capacity, striking a balance between computational efficiency and gradient stability. It suits scenarios like image recognition and natural language processing, where parallel processing accelerates convergence without sacrificing model accuracy. Enterprises handling extensive data streams benefit from minibatch learning by leveraging batch updates to stabilize learning dynamics while managing noise inherent in online approaches.

Use Cases: When to Choose Online Learning

Online learning is ideal for scenarios with streaming data or when computational resources are limited, enabling models to update continuously without storing the entire dataset. This approach suits real-time applications like fraud detection, recommendation systems, and adaptive control where data arrives sequentially and immediate model adjustments are critical. Minibatch learning is less practical in dynamic environments where rapid model adaptation to new data prevents retraining delays.

Impact on Model Convergence

Minibatch learning balances gradient estimation accuracy and computational efficiency by using small, fixed-size subsets of data, promoting stable and faster model convergence compared to online learning. Online learning updates the model with each individual data point, which can introduce high variance in gradient estimates and result in slower or more unstable convergence. Selecting an appropriate minibatch size optimizes convergence speed and model performance by reducing noise while maintaining timely parameter updates.

Future Trends in Data Stream Learning

Future trends in data stream learning emphasize hybrid approaches combining minibatch and online learning to optimize model adaptability and computational efficiency. Advances in algorithms will leverage real-time data processing with periodic minibatch updates to mitigate concept drift and improve predictive accuracy. Integration of edge computing and federated learning is poised to enhance scalability and privacy in continuous data stream environments.

minibatch vs online learning Infographic

techiny.com

techiny.com