Mini-batch training processes small groups of data samples in each iteration, balancing computational efficiency and model convergence speed. Online training updates model parameters after each individual data point, enabling adaptation to dynamic data streams but often requiring careful tuning to avoid noisy gradients. Choosing between mini-batch and online training depends on dataset size, computational resources, and the need for real-time model updates.

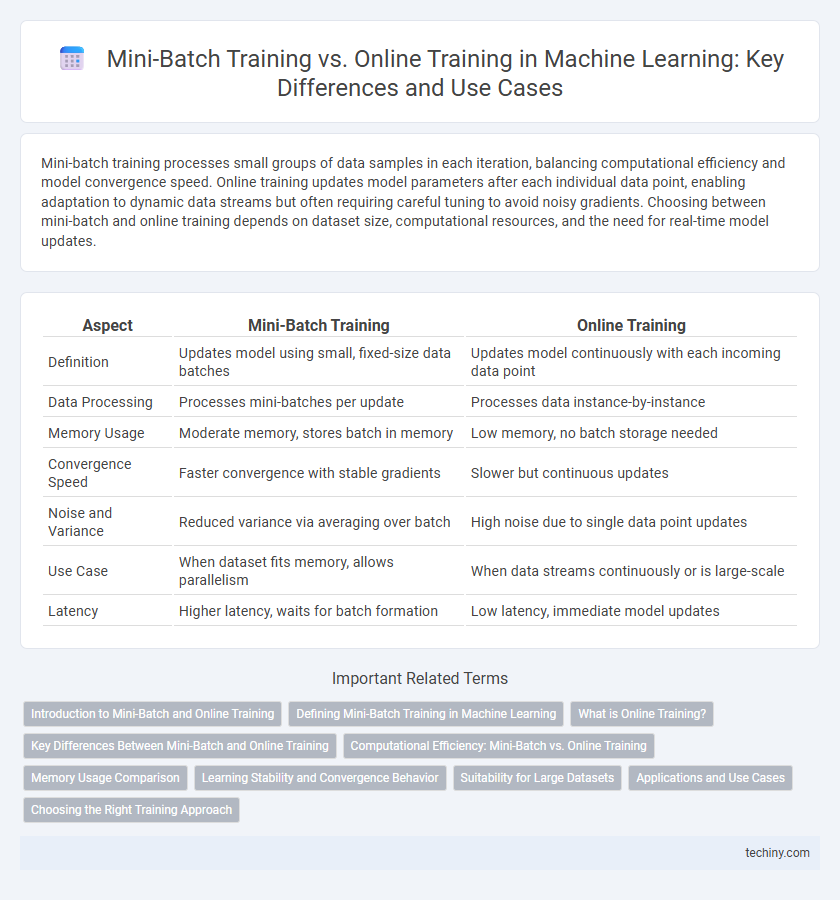

Table of Comparison

| Aspect | Mini-Batch Training | Online Training |

|---|---|---|

| Definition | Updates model using small, fixed-size data batches | Updates model continuously with each incoming data point |

| Data Processing | Processes mini-batches per update | Processes data instance-by-instance |

| Memory Usage | Moderate memory, stores batch in memory | Low memory, no batch storage needed |

| Convergence Speed | Faster convergence with stable gradients | Slower but continuous updates |

| Noise and Variance | Reduced variance via averaging over batch | High noise due to single data point updates |

| Use Case | When dataset fits memory, allows parallelism | When data streams continuously or is large-scale |

| Latency | Higher latency, waits for batch formation | Low latency, immediate model updates |

Introduction to Mini-Batch and Online Training

Mini-batch training processes a subset of the dataset in each iteration, balancing computational efficiency and gradient accuracy, making it suitable for large-scale machine learning models. Online training, also known as stochastic gradient descent (SGD), updates model parameters using one data sample at a time, allowing fast adaptation and reduced memory usage, ideal for streaming data scenarios. Both techniques enhance model convergence speed and generalization by managing the trade-off between update frequency and noise in gradient estimation.

Defining Mini-Batch Training in Machine Learning

Mini-batch training in machine learning involves dividing the training dataset into small, fixed-size batches to update model parameters iteratively, balancing between stochastic and batch gradient descent. This approach improves computational efficiency by leveraging vectorized operations and stabilizes convergence through averaged gradients, typically using batch sizes ranging from 32 to 256 samples. Mini-batch training enhances model generalization and reduces training time compared to online training, which updates the model with one sample at a time.

What is Online Training?

Online training in machine learning refers to the continuous updating of model parameters as each individual data point is received, enabling real-time learning and adaptation. Unlike mini-batch training that processes small batches of data simultaneously, online training allows immediate incorporation of new information without waiting for batch completion. This approach is highly effective for streaming data and applications requiring rapid response to evolving patterns.

Key Differences Between Mini-Batch and Online Training

Mini-batch training processes fixed-size subsets of the dataset, balancing computational efficiency and gradient estimation accuracy, while online training updates model parameters after each individual data point, enabling faster convergence but noisier gradients. Mini-batch training leverages hardware acceleration like GPUs more effectively, improving throughput, whereas online training suits streaming data or non-stationary environments requiring continuous model adaptation. The choice impacts memory usage, convergence speed, and model stability, with mini-batches offering smoother updates and online training providing responsiveness to real-time data changes.

Computational Efficiency: Mini-Batch vs. Online Training

Mini-batch training improves computational efficiency by leveraging parallel processing on GPUs, allowing simultaneous updates with multiple samples, which reduces training time compared to online training that processes one sample at a time. Online training offers faster model updates suitable for streaming data but suffers from higher variance gradients, leading to less stable convergence and increased computation per update. Mini-batch sizes between 32 and 256 balance memory usage and computational speed, optimizing throughput and enabling efficient gradient estimation for deep learning models.

Memory Usage Comparison

Mini-batch training strikes a balance between memory efficiency and computational speed by processing subsets of the dataset, requiring moderate memory allocation proportional to the batch size. Online training, or stochastic gradient descent, processes one data sample at a time, minimizing memory usage and making it suitable for streaming data and resource-constrained environments. Memory consumption in mini-batch training increases with larger batches due to storage of multiple samples and intermediate computations, whereas online training's memory footprint remains consistently low.

Learning Stability and Convergence Behavior

Mini-batch training offers improved learning stability by averaging gradients over small data batches, reducing variance and leading to smoother convergence paths compared to online training. Online training updates model parameters after each data point, causing high gradient noise that can destabilize learning but may enable faster adaptation in non-stationary environments. Convergence behavior in mini-batch training is typically more predictable and less oscillatory, making it preferable for complex models requiring stable optimization trajectories.

Suitability for Large Datasets

Mini-batch training excels in handling large datasets by dividing data into small, manageable batches that optimize computational efficiency and memory usage. This approach enables stable gradient estimation and faster convergence compared to online training, which processes one data point at a time and may struggle with noise in gradient updates. Consequently, mini-batch training is more suitable for large-scale machine learning tasks involving high-dimensional data and extensive datasets.

Applications and Use Cases

Mini-batch training excels in applications requiring stable convergence and efficient GPU utilization, such as image recognition and natural language processing tasks with large datasets. Online training suits real-time data scenarios like financial market prediction, online recommendation systems, and anomaly detection where models must update continuously. Selecting between mini-batch and online training directly influences model performance and resource allocation in time-sensitive versus large-scale batch processing environments.

Choosing the Right Training Approach

Mini-batch training balances computational efficiency and model convergence by processing small, fixed-size subsets of data, improving gradient estimation stability compared to pure online training. Online training updates model parameters with each data point, offering faster adaptation to streaming or non-stationary data but can suffer from higher gradient variance. Selecting the appropriate training method depends on dataset size, computational resources, and the need for real-time model updates.

mini-batch training vs online training Infographic

techiny.com

techiny.com