Hard margin SVM enforces a strict separation between classes, allowing no misclassification and requiring perfectly linearly separable data. Soft margin SVM introduces a tolerance level for misclassification, balancing maximizing the margin and minimizing classification errors, which improves performance on noisy or non-linearly separable datasets. This flexibility makes soft margin SVM more widely applicable in practical machine learning tasks where data often contains overlapping classes.

Table of Comparison

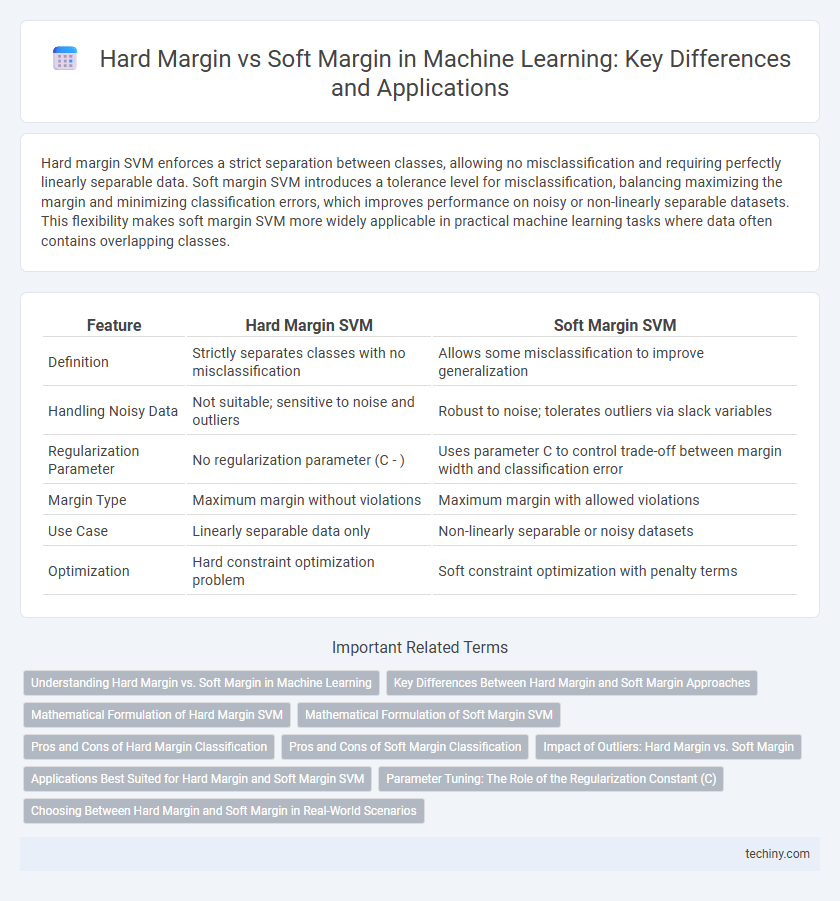

| Feature | Hard Margin SVM | Soft Margin SVM |

|---|---|---|

| Definition | Strictly separates classes with no misclassification | Allows some misclassification to improve generalization |

| Handling Noisy Data | Not suitable; sensitive to noise and outliers | Robust to noise; tolerates outliers via slack variables |

| Regularization Parameter | No regularization parameter (C - ) | Uses parameter C to control trade-off between margin width and classification error |

| Margin Type | Maximum margin without violations | Maximum margin with allowed violations |

| Use Case | Linearly separable data only | Non-linearly separable or noisy datasets |

| Optimization | Hard constraint optimization problem | Soft constraint optimization with penalty terms |

Understanding Hard Margin vs. Soft Margin in Machine Learning

Hard margin in machine learning refers to an SVM approach where the model demands perfect separation of classes with no misclassification allowed, suitable for linearly separable data. Soft margin SVM introduces a tolerance for classification errors by allowing some misclassified points through a slack variable, balancing margin maximization and error minimization. This trade-off helps soft margin models perform better on noisy or overlapping datasets, enhancing generalization compared to the rigid hard margin.

Key Differences Between Hard Margin and Soft Margin Approaches

Hard margin SVM requires data to be perfectly linearly separable, maximizing the margin without allowing any misclassifications, which can lead to overfitting in noisy datasets. Soft margin SVM introduces a regularization parameter, allowing some misclassifications to achieve better generalization and robustness on non-linearly separable or noisy data. The key difference lies in the flexibility of soft margin to balance margin maximization and classification errors, optimizing model performance on real-world datasets.

Mathematical Formulation of Hard Margin SVM

Hard Margin SVM aims to find a hyperplane that perfectly separates data points with a maximum margin by solving the optimization problem min_w ||w||^2 subject to y_i(w*x_i + b) >= 1 for all i. This formulation enforces strict constraints, allowing no misclassification or margin violations, which requires data to be linearly separable. The objective function minimizes the norm of the weight vector w, maximizing the margin between classes while ensuring all samples lie outside the margin boundaries.

Mathematical Formulation of Soft Margin SVM

Soft Margin SVM mathematically introduces slack variables \( \xi_i \geq 0 \) to the optimization problem, allowing some misclassification or margin violations while minimizing \( \frac{1}{2} \|w\|^2 + C \sum_{i=1}^n \xi_i \), where \(w\) is the weight vector and \(C\) controls the trade-off between margin width and classification error. The constraints \( y_i (w \cdot x_i + b) \geq 1 - \xi_i \) ensure that data points can lie within the margin boundary or be misclassified depending on the slack values. This formulation balances margin maximization and error minimization, enabling better generalization in non-linearly separable or noisy datasets.

Pros and Cons of Hard Margin Classification

Hard margin classification in machine learning enforces a strict separation of data points with zero tolerance for misclassification, making it ideal for perfectly linearly separable datasets. Its main advantage is simplicity and strong generalization on clean data, but it lacks robustness to noise and outliers, which can cause overfitting and poor performance on real-world data. The inability to handle data overlap limits its use in practical scenarios where soft margin classifiers, allowing some misclassifications, offer more flexibility and better resilience.

Pros and Cons of Soft Margin Classification

Soft margin classification in machine learning allows some misclassifications to achieve better generalization on noisy or non-linearly separable data. It provides flexibility in capturing data patterns but may sacrifice perfect separation, leading to potential errors on training samples. This balance reduces overfitting risk and enhances model robustness in complex real-world scenarios.

Impact of Outliers: Hard Margin vs. Soft Margin

Hard margin support vector machines (SVM) strictly separate classes with a clear boundary, making them highly sensitive to outliers, which can distort the decision boundary and lead to poor generalization. Soft margin SVM introduces a tolerance for misclassification, allowing some data points to lie within the margin or on the wrong side of the hyperplane, thus enhancing robustness against outliers. This flexibility improves model performance on real-world, noisy datasets by balancing margin maximization and classification error.

Applications Best Suited for Hard Margin and Soft Margin SVM

Hard margin SVMs are best suited for applications with perfectly separable data and minimal noise, such as text classification or image recognition tasks where clear boundaries exist. Soft margin SVMs perform better in real-world scenarios with overlapping classes and noisy data, making them ideal for bioinformatics, speech recognition, and financial modeling. The choice between hard and soft margin directly impacts model robustness and generalization in various machine learning applications.

Parameter Tuning: The Role of the Regularization Constant (C)

The regularization constant (C) plays a critical role in parameter tuning by controlling the trade-off between maximizing the margin and minimizing classification errors in support vector machines. A high value of C results in a hard margin, prioritizing exact classification of training data with less tolerance for misclassification, while a low C value leads to a soft margin allowing more misclassifications for greater generalization. Careful tuning of C optimizes model performance by balancing bias and variance, directly influencing the decision boundary's flexibility and robustness.

Choosing Between Hard Margin and Soft Margin in Real-World Scenarios

Choosing between hard margin and soft margin in machine learning depends on the dataset's noise level and linear separability; hard margin suits perfectly separable and noise-free data, while soft margin accommodates overlap and outliers by allowing some misclassifications. Soft margin optimization introduces a regularization parameter (C) to balance margin maximization and classification error, crucial for generalization in real-world noisy environments. Selecting the appropriate margin impacts model robustness, preventing overfitting in complex data distributions and ensuring effective support vector machine (SVM) performance.

hard margin vs soft margin Infographic

techiny.com

techiny.com